YouTube is failing to remove videos spreading baseless conspiracies about widespread voter fraud, more than a week after announcing it would take down such content, according to a Tech Transparency Project (TTP) investigation that raises questions about YouTube’s willingness to enforce its new policy.

Google-owned YouTube said in a Dec. 9 blog post that it would “start removing any piece of content uploaded today (or anytime after) that misleads people by alleging that widespread fraud or errors changed the outcome of the 2020 U.S. Presidential election.” The new policy marked a change of course for YouTube, which had previously allowed videos peddling false claims of voter fraud to proliferate.

But a week after YouTube’s announcement, TTP easily found examples of new videos—some with the words “voter fraud” in their title—circulating on YouTube, often racking up thousands of views. In some cases, the videos are being used as fundraising vehicles for the channels or organizations that posted them.

The findings highlight YouTube’s continuing role as a vector for misinformation despite its public pledges to do better. YouTube often escapes the scrutiny directed at Facebook and Twitter over misleading and dangerous content on social media, even as evidence mounts that YouTube is a powerful engine of radicalization.

The continuing presence of false voter fraud claims on YouTube is likely to feed efforts by President Trump and his allies to sow doubt about Joe Biden’s electoral victory and fuel the far-right conspiracy theories that have taken hold among large swaths of the American public.

‘Shredded ballots’

YouTube, which is used by more than 70% of Americans, has met with widespread criticism for allowing election misinformation during and after the 2020 campaign. A study from the research project Transparency.tube found that videos supporting the idea of election fraud received more than 137 million views the week of Nov. 3.

YouTube defended its approach on Dec. 9, saying it took down “thousands of harmful and misleading elections-related videos” since September. But the company also signaled a shift in enforcement. Citing the so-called safe harbor deadline, the completion date for state-level challenges to election recounts and audits, YouTube said it would begin removing videos that falsely allege “widespread fraud or errors” swung the election.

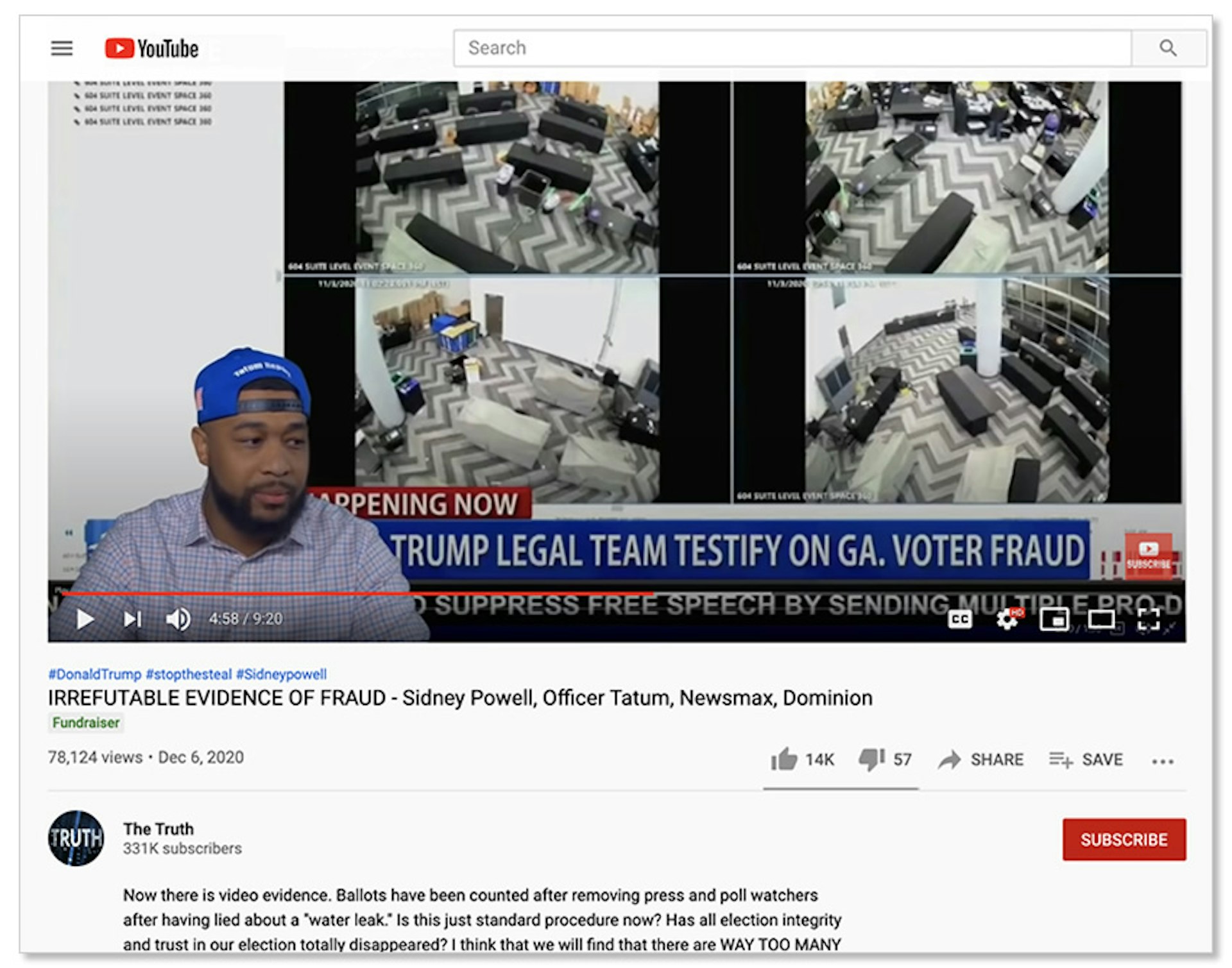

Despite that policy change, TTP found that baseless voter fraud videos continue to pop up on YouTube, some of them with significant reach. For example, one posted on Dec. 10—the day after YouTube’s policy announcement—is titled “IRREFUTABLE EVIDENCE OF FRAUD - Sidney Powell, Officer Tatum, Newsmax, Dominion.” The video, which featured a series of clips from right-wing cable channels One America News Network and Newsmax, reached 44,000 views in six days.

In one interview clip on the video, former Trump campaign lawyer Sidney Powell alleges “massive evidence of the shaving of votes and the flipping of votes from Trump to Biden” in Georgia voting machines and claims she has a “huge bag of shredded ballots” in her office. The video was posted by the YouTube channel “The Truth,” which has around 331,000 subscribers, making it a potentially significant spreader of misinformation. The channel, which launched in 2007, initially posted scenic outdoor videos and drone footage but transitioned in the past year to running far-right content and conspiracy theories, drawing a bigger audience. Its most popular video, about President Trump’s border wall, generated 4.7 million views.

Notably, The Truth’s voter fraud video uses One America News Network footage. YouTube suspended OAN last month for posting Covid-19 misinformation.

Merchandise sales

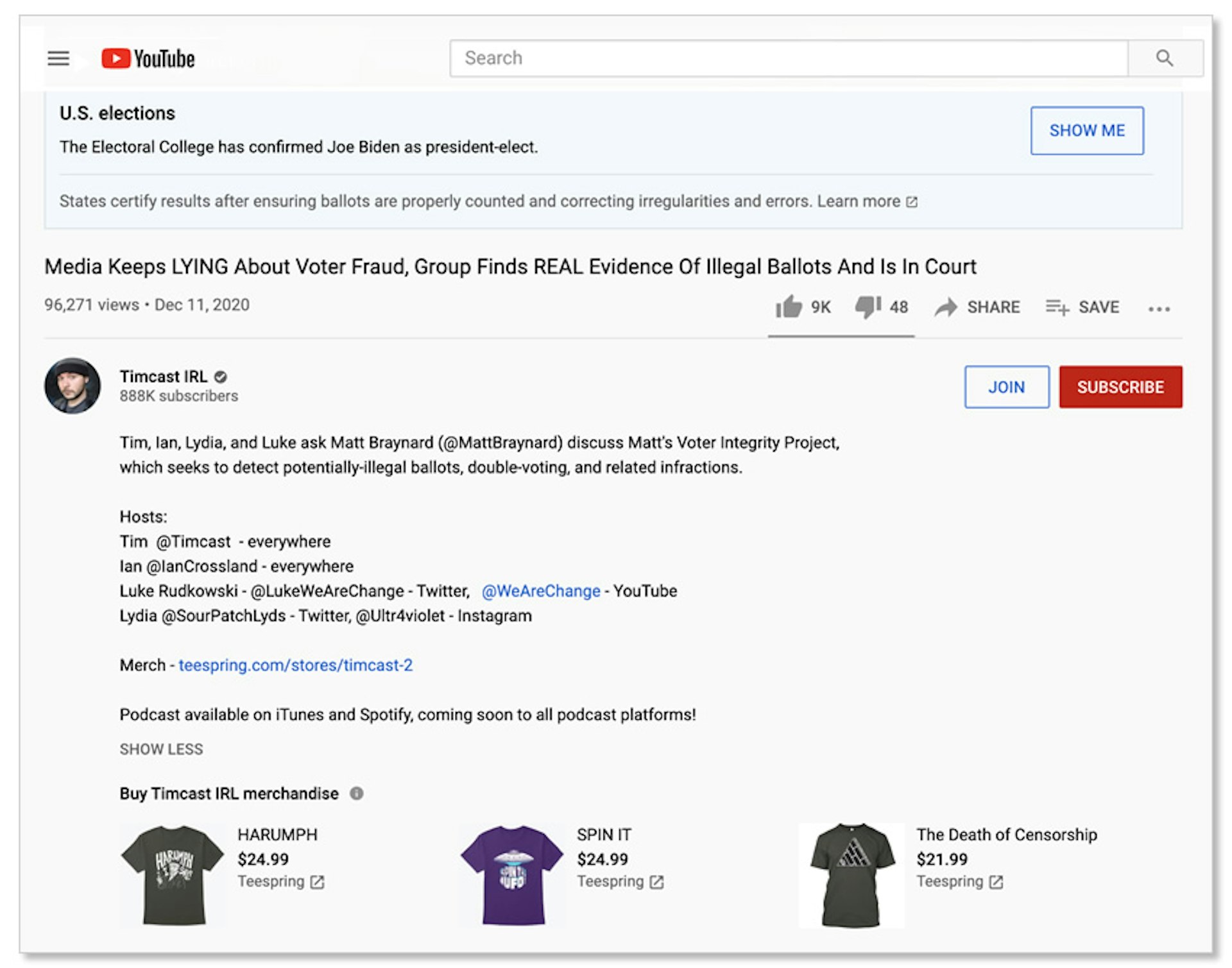

Another YouTube video posted Dec. 11 with the title, “Media Keeps LYING About Voter Fraud, Group Finds REAL Evidence Of Illegal Ballots And Is In Court,” generated more than 96,000 views in five days. Posted on the verified YouTube channel “Timcast IRL,” the video features host Tim Pool interviewing former Trump campaign staffer Matt Braynard, known for his debunked claims that out-of-state residents cast illegal ballots in Georgia.

YouTube affixed a label under the video that reads, “The Electoral College has confirmed Joe Biden as president-elect,” and “States certify results after ensuring ballots are properly counted and correcting irregularities and errors.” But it allowed the misleading video to stay up, allowing the channel, with 888,000 subscribers, to continue spreading the message.

On top of that, the video description featured an advertisement for Timcast IRL T-shirts. Clicking on the information icon on the ad produced a pop-up message that reads, “The channel and YouTube may receive compensation from purchases in the links below.” In other words, YouTube may be profiting from voter fraud misinformation it promised to remove.

One video posted Dec. 10 and titled “Van FULL of Ballots showed up at 3:30am AFTER DEADLINE in Michigan?,” featured YouTube prankster and failed congressional candidate Joey Saladino repeating a debunked claim that ballots were smuggled late into a Detroit counting center. Like the previous example, the video carries a YouTube label that Joe Biden is the confirmed president-elect. But the channel in this case, “Joey Saladino Show, Clips,” also seeks to raise money through the video, by including links to a merchandise site and Saladino’s account on Patreon, the subscription service that lets people support content creators.

Litany of conspiracies

YouTube channels with smaller followings are also amplifying false claims of voter fraud. A Dec. 15 video called “President Trump was Robbed,” featured on the channel “CHaettig,” appears to be a political ad with music, slick editing and a voiceover. It plays like a greatest hits of election fraud conspiracies, citing “suitcases full of ballots added in secret in Georgia” and “dead people voting in Wisconsin,” both claims that have been dismantled by fact checkers. The video ends with a call for people to contact their legislators to demand “honest elections,” along with a link to the Trump campaign site, which solicits donations for an “Election Defense Fund.” The video description includes hashtags like #voterfraud and #electionfraud.

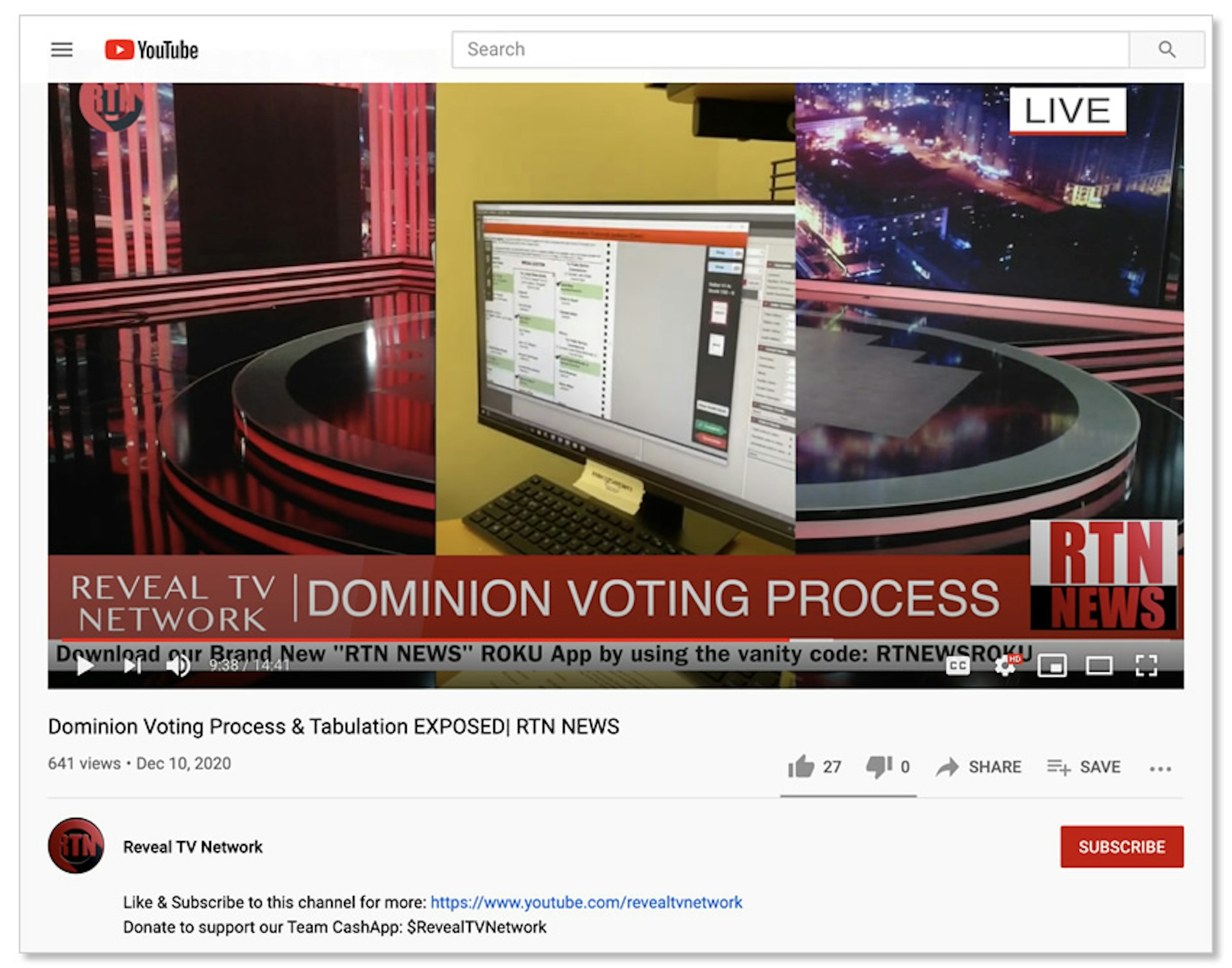

Another video posted on Dec. 10 by the YouTube channel “Reveal TV Network,” which describes itself as a nonprofit Christian ministry, is titled “Dominion Voting Process & Tabulation EXPOSED| RTN NEWS.” The rambling, nearly 15 minutes of footage shows a woman identifying herself as an “election supervisor” scanning ballots on a computer and talking about how she can change votes. The video’s title and content point to a baseless conspiracy theory that software from Dominion Voting Systems switched thousands of votes from Trump to Biden. Federal, state and local election officials have refuted these claims.

In a pinned comment on the video, Reveal TV suggested that YouTube’s Dec. 9 policy change on voter fraud content could endanger the video and encouraged viewers get the network’s Roku app. The video description also asked viewers to donate to Reveal TV through Cash App and linked to a series of earlier videos on the channel alleging voter fraud in Georgia.

TTP’s findings show YouTube’s struggles to control the flow of misinformation on its platform, even when it has made a public announcement about a new crackdown. All of the above examples were posted in the days immediately following YouTube’s Dec. 9 pledge to remove false claims about widespread voter fraud and the 2020 election.

Notably, YouTube did not say it would take down such videos posted prior to Dec. 9, suggesting its new vigilance about voter fraud conspiracy videos may not be applied retroactively.

In addition, a notice on YouTube’s policy page said the service will only start issuing strikes for false voter fraud content after Inauguration Day on Jan. 20, in what amounts to a grace period. YouTube terminates channels with three strikes.