Key points from this report:

- YouTube recommended hundreds of videos about guns and gun violence to accounts for boys interested in video games, according to a new study.

- Some of the recommended videos gave instructions on how to convert guns into automatic weapons or depicted school shootings.

- The gamer accounts that watched the YouTube-recommended videos got served a much higher volume of gun- and shooting-related content.

- Many of the videos violated YouTube’s own policies on firearms, violence, and child safety, and YouTube took no apparent steps to age-restrict them.

- YouTube also recommended a movie about serial killer Jeffrey Dahmer to minor accounts, the study found.

YouTube’s algorithms are pushing boys interested in video games to scenes of school shootings, instructions on how to use and modify weapons, and even a movie about notorious serial killer Jeffrey Dahmer, according to a study by the Tech Transparency Project (TTP) that raises new questions about the safety of YouTube’s recommendation system.

For this study, our researchers created four test YouTube accounts—two identified as nine-year-old boys and two identified as 14-year-old boys. The researchers established each boy’s interest in video games by watching playlists composed entirely of gaming videos.

We then logged and analyzed the videos that YouTube’s algorithm recommended to these minor accounts, with one of each age group watching the recommended videos and one not engaging with them. The study found that YouTube pushed content on shootings and weapons to all of the gamer accounts, but at a much higher volume to the users who clicked on the YouTube-recommended videos.

This flies in the face of YouTube’s assurances that its algorithms are safe. In a 2021 blog post, the company’s vice president of engineering said YouTube has “made delivering responsible recommendations our top priority,” adding that “recent published papers conclude YouTube recommendations aren’t actually steering viewers towards extreme content.” But our researchers found that YouTube did serve extreme content to kids, including videos that violated the platform’s own policies on violence, firearms, and child safety.

A 2022 Pew Research survey found that Google-owned YouTube was the most popular social media platform for kids between the ages of 13 and 17, with 95% of those surveyed using YouTube. Despite its popularity with young people, however, YouTube has largely escaped the intense scrutiny aimed at platforms like TikTok and Instagram for their impact on teen development and well-being.

Algorithmic ‘signals’

YouTube’s algorithms decide what to recommend to users based on “signals” such as what the user clicks on, how much time they spend watching videos, and which videos they like and share. Recommendations drive a “significant amount of the overall viewership on YouTube, even more than channel subscriptions or search,” according to the company. This helps keep eyes on YouTube content and ads. There is no way to turn off the recommendations feature on YouTube, but users can remove individual recommended videos from their own feeds.

To better understand the types of content that YouTube recommends to minors, TTP created four YouTube test accounts, providing the birthdates of a nine-year-old for two accounts and the birthdates of a 14-year old for two accounts, and identifying them all as male. (The nine-year-old accounts were linked to a parent account, per YouTube’s requirements for children under 13.) Our researchers then established the boys’ interest in video games, one of the most popular activities for American kids. An adult researcher posing as each child persona watched a list of at least 100 gaming playthrough videos (showing a video game being played from start to finish).

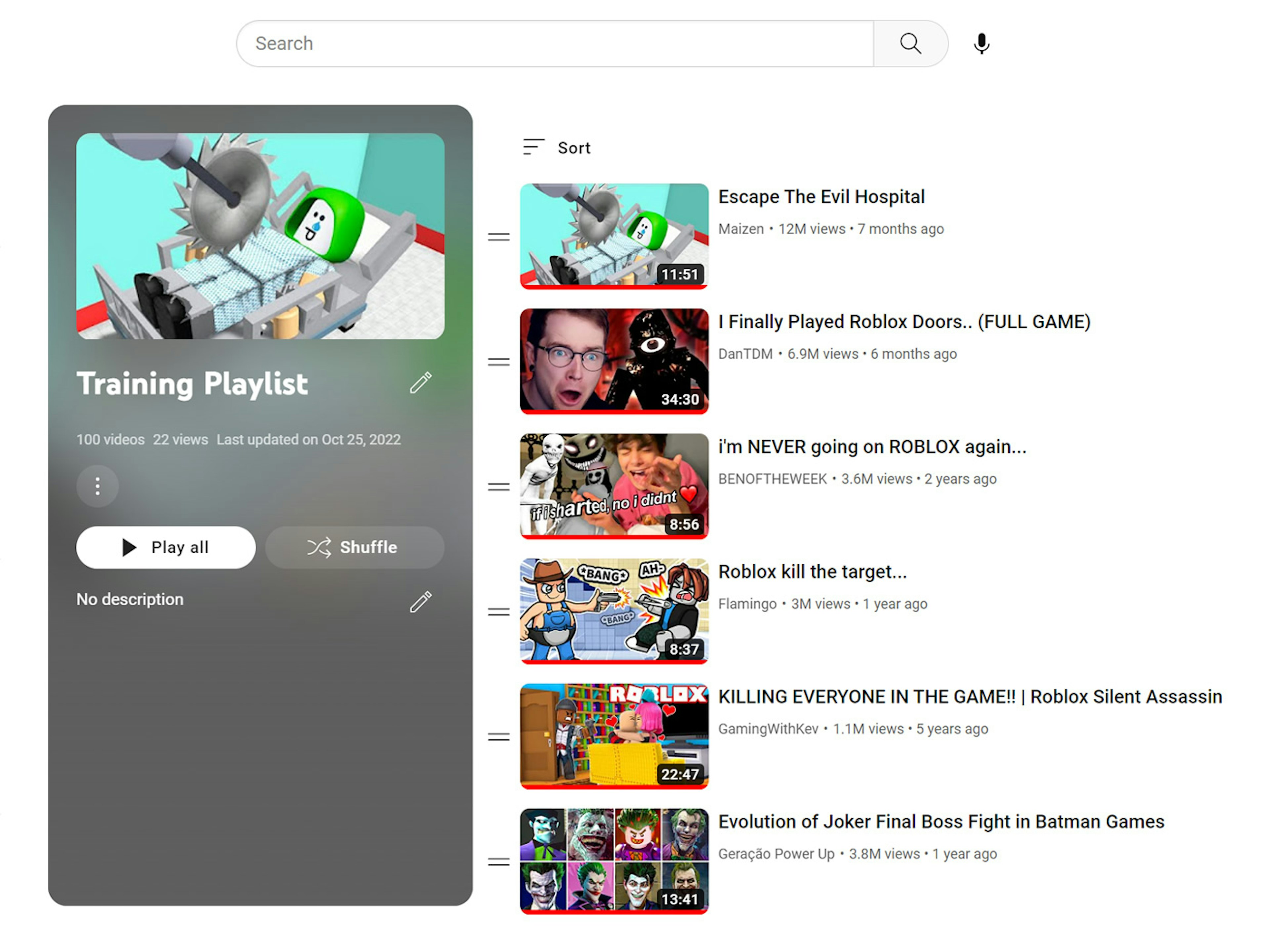

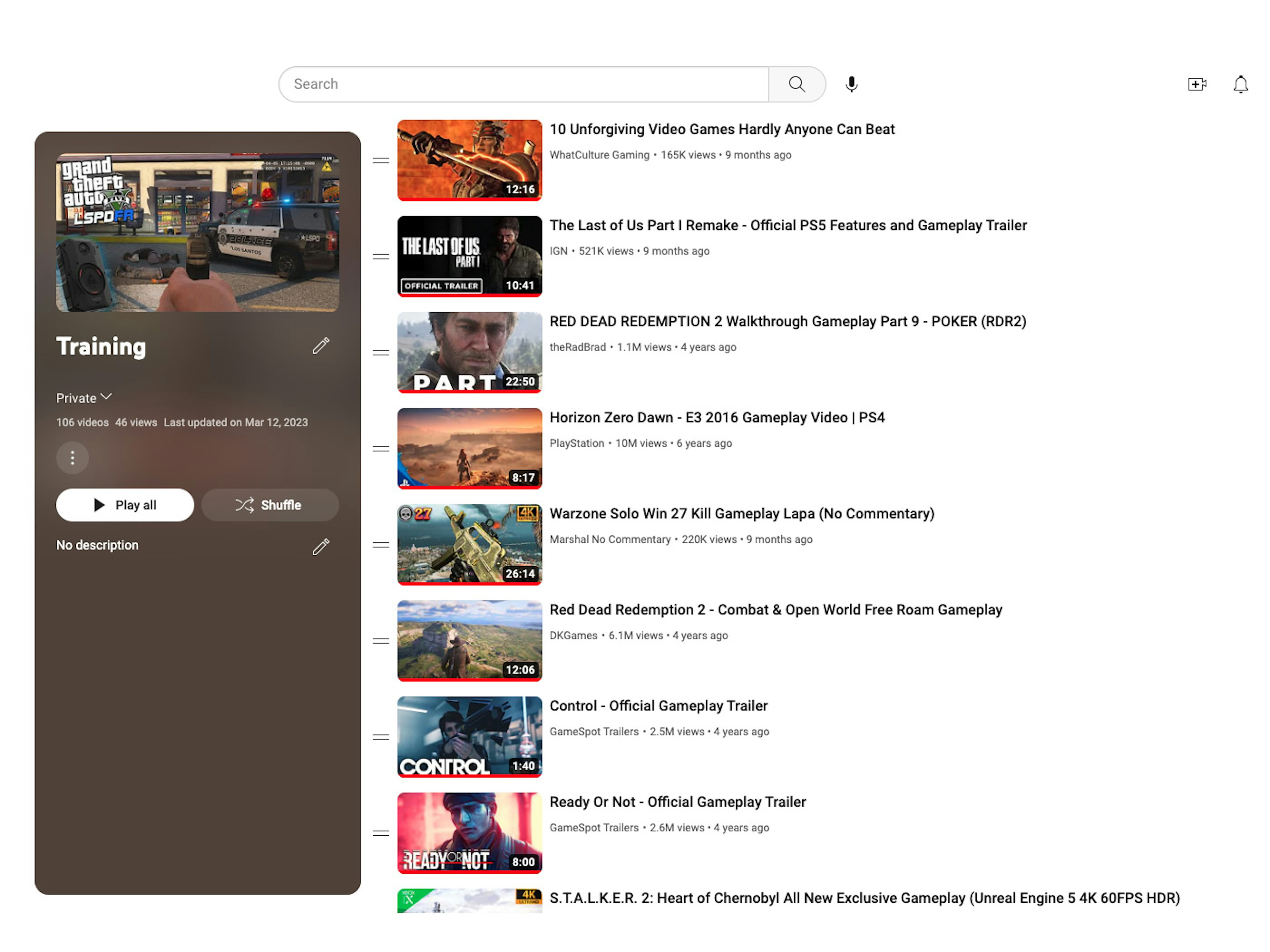

The playlist for the nine-year-old accounts included videos for games like Roblox, Lego Star Wars, and Five Nights at Freddy’s, featuring an animatronic bear character. The 14-year-old playlist consisted primarily of videos of first-person shooter games like Grand Theft Auto, Halo, and Red Dead Redemption. Both playlists were made up exclusively of gaming videos and no other content. (Read more about the playlists and methodology at the bottom of this report.)

Example of gaming videos in the nine-year-old playlist.

Example of gaming videos in the nine-year-old playlist.

Once the accounts finished watching the gaming playlists, our researchers monitored and logged the YouTube-recommended videos that appeared on each account’s home page every day for a 30-day period, from Nov. 1 to 30, 2022. (YouTube recommends videos on both the user’s home page and in the “Up Next” panel that appears when a user is watching a video; this study focused solely on the home page recommendations.) One account in each age set watched a selection of recommended videos and one did not, to test whether engagement changed the types of videos YouTube suggested.

The study found that YouTube recommended weapons- and shooting-related videos to all four of the gamer accounts. But YouTube pushed more of this content—in some cases more than 10 times more—to the accounts that watched the recommendations (referred to in this report as “engagement accounts.”) In other words, if a gamer showed interest in the videos recommended by YouTube, YouTube’s algorithm served up more and more content related to real-world violence.

These videos included scenes depicting school shootings and other mass shooting events; graphic demonstrations of how much damage guns can inflict on a human body; and how-to guides for converting a handgun to a fully automatic weapon. YouTube also pushed a movie about the young life of serial killer Jeffrey Dahmer.

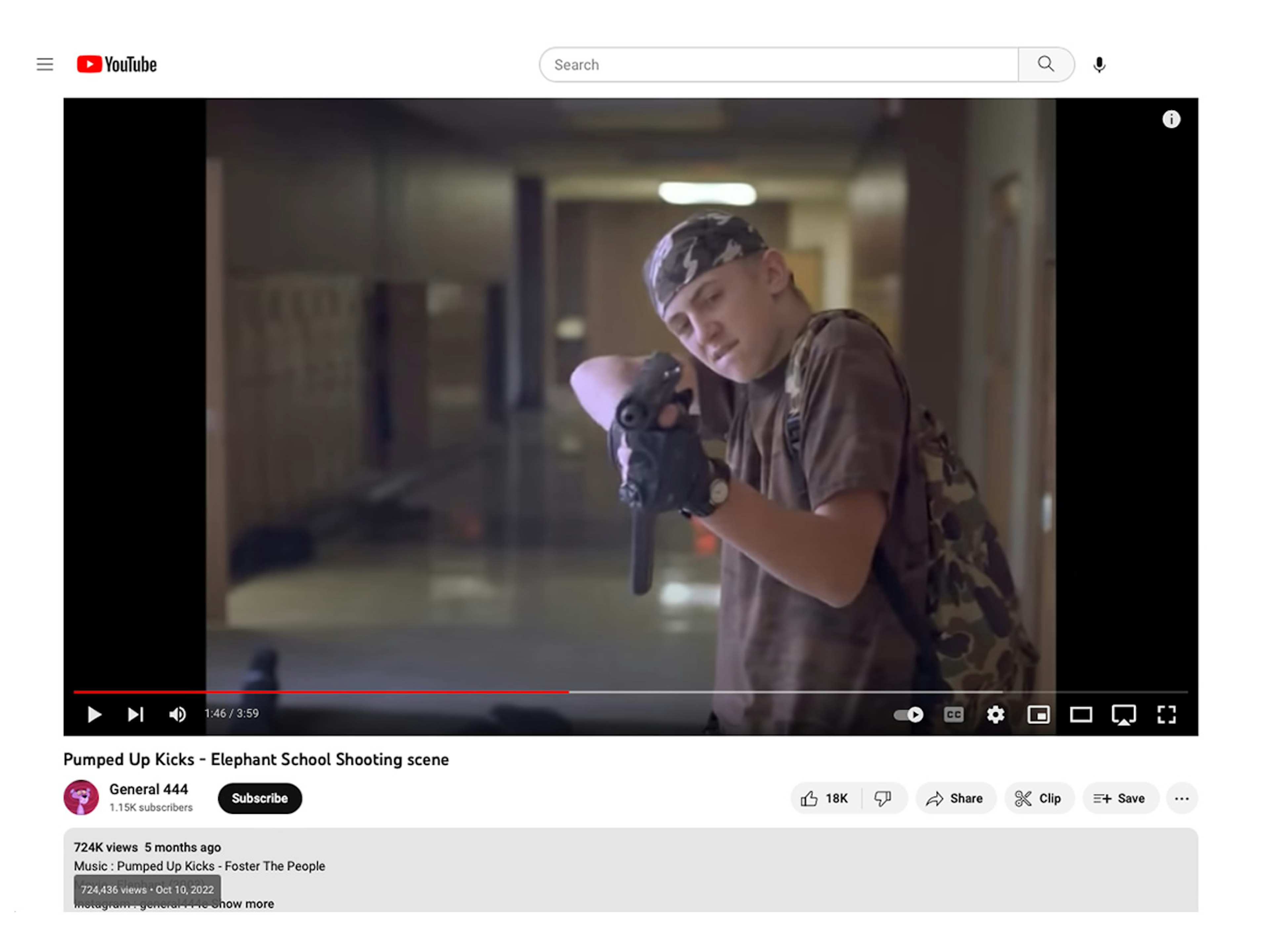

One of the videos recommended to a 14-year-old account isolated a graphic movie scene of a Columbine-like school shooting and set it to music.

One of the videos recommended to a 14-year-old account isolated a graphic movie scene of a Columbine-like school shooting and set it to music.

Many of these videos violated YouTube policies, which prohibit showing “violent or gory content intended to shock or disgust viewers,” “harmful or dangerous acts involving minors,” and “instructions on how to convert a firearm to automatic.” YouTube took no apparent steps to age-restrict these videos, despite stating it has the option to do so with content that features “adults participating in dangerous activities that minors could easily imitate.”

For the gamer accounts that clicked on YouTube’s recommendations, the stream of weapons-related content increased dramatically.

For example, between Nov. 1 and Nov. 30, YouTube pushed 382 real firearms videos to the nine-year-old engagement account—an average of more than 12 per day. The videos included graphic demonstrations of what high-powered weapons can do to a human torso or human head. YouTube served far fewer weapons videos—34—to the gamer of the same age who did not watch the recommendations.

During the same 30-day period, YouTube served 1,325 real firearms videos to the 14-year-old engagement account—an average of more than 44 per day. The videos featured shooting scenes and “how-tos” for using or modifying firearms. By contrast, the 14-year-old account that did not click on the recommended content got 172 weapons videos.

Hover over the bars for additional data, or click on content types at the top to isolate particular categories.

Hover over the bars for additional data, or click on content types at the top to isolate particular categories.

On two different occasions, YouTube pushed more than 100 weapons videos in a single day to the 14-year-old engagement account. Some of the individual videos were recommended repeatedly, including one titled “12 year old and 14 year old have HUGE gun fight with police,” featuring police body camera footage of a nighttime shootout.

School Shootings

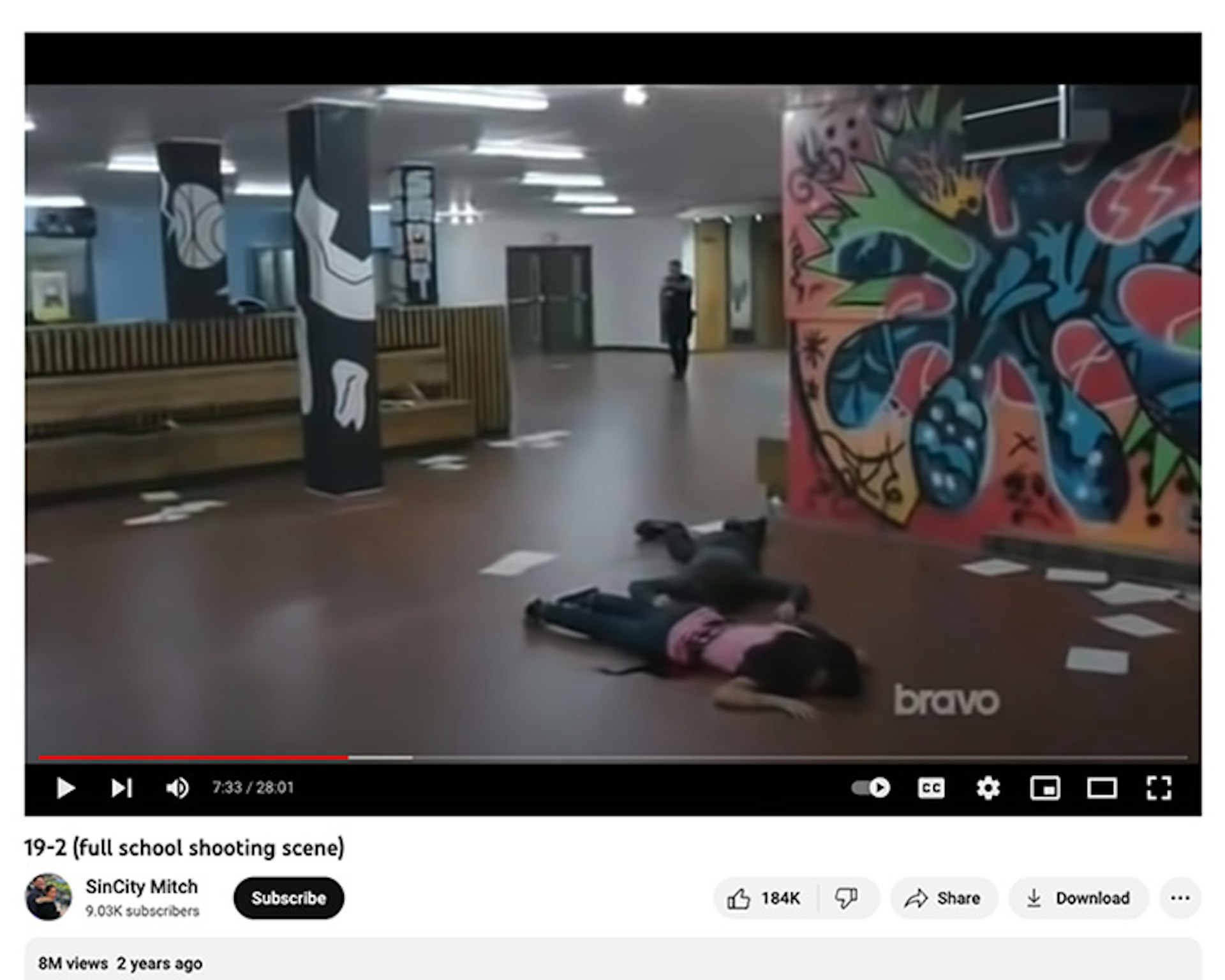

Among the more disturbing content recommended by YouTube’s algorithm were scenes of school shootings.

YouTube pushed a video to the teen engagement account that isolated a scene from the Canadian drama 19-2, showing police exchanging gunfire with a school shooter as students scream and bodies lay on the ground. The episode from which the scene is drawn is rated TV-MA, meaning it is “specifically designed to be viewed by adults and may be unsuitable for children under 17,” according to the TV Parental Guidelines Monitoring Board.

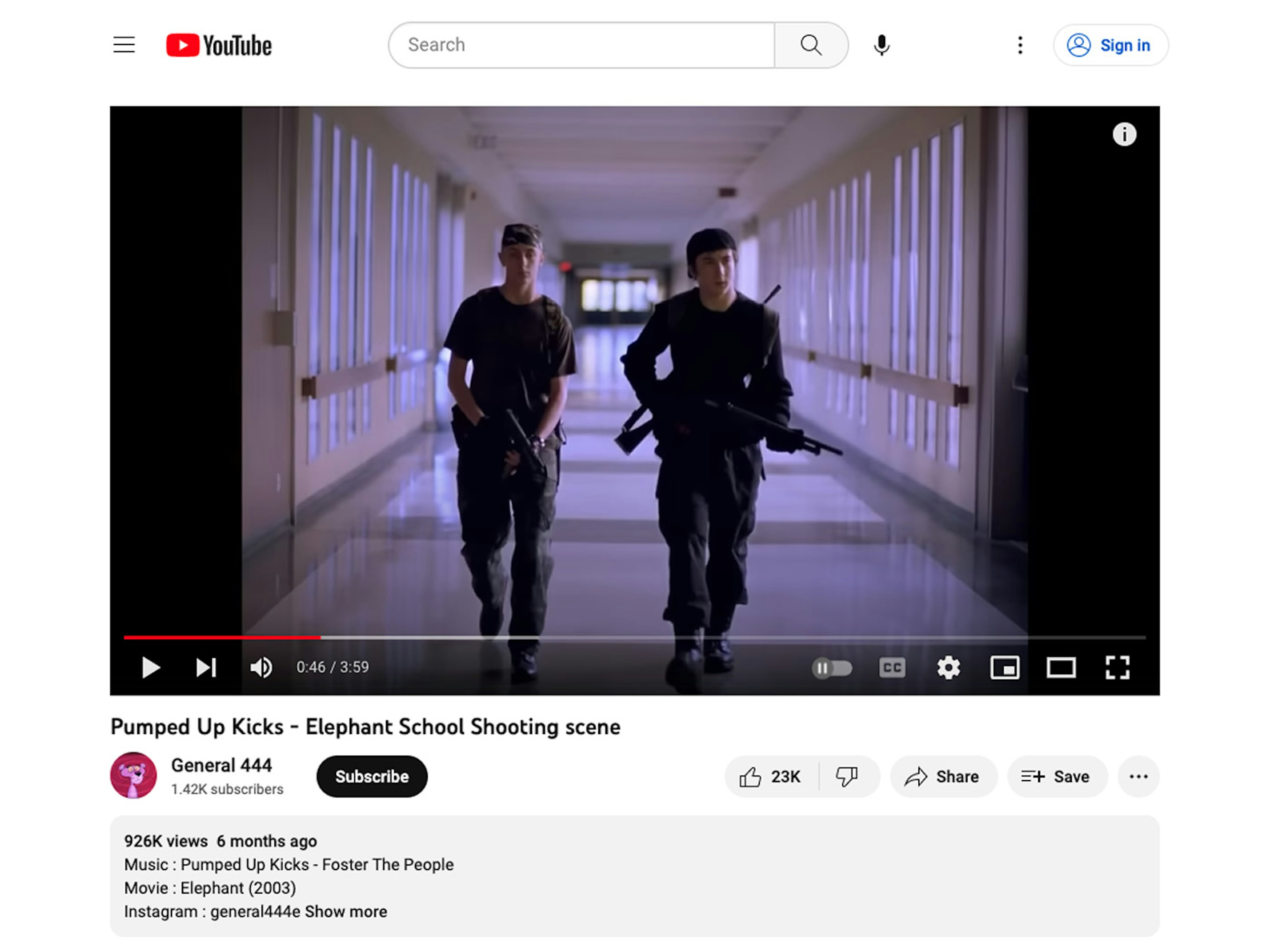

Another video served to the same teen account isolated a graphic school shooting scene from the 2003 movie “Elephant,” which is loosely based on the 1999 massacre at Columbine High School that left 12 students and one teacher dead. The scene follows two teens as they walk through their high school shooting students and teachers with military-style rifles. The scene posted to YouTube is set to the song “Pumped Up Kicks” by Foster the People, a song released in 2010 about a troubled teenager who imagines shooting people.

This video pushed to a 14-year-old isolated a graphic scene of a school shooting from the movie, “Elephant.”

This video pushed to a 14-year-old isolated a graphic scene of a school shooting from the movie, “Elephant.”

It appears that the YouTube creator behind the video added “Pumped Up Kicks” to the scene. (“Pumped Up Kicks” wasn’t released until years after the film came out.) The song has some troubling associations. According to court documents, Nikolas Cruz, the teen gunman who carried out the deadly school shooting in Parkland, Florida, in 2018, searched YouTube for “pumped up kicks columbine high school” multiple times before his attack. (Other documents released by the Broward County Sheriff’s Office show Cruz searched for “School Shooter Hype Music Video” the day before the shooting.)

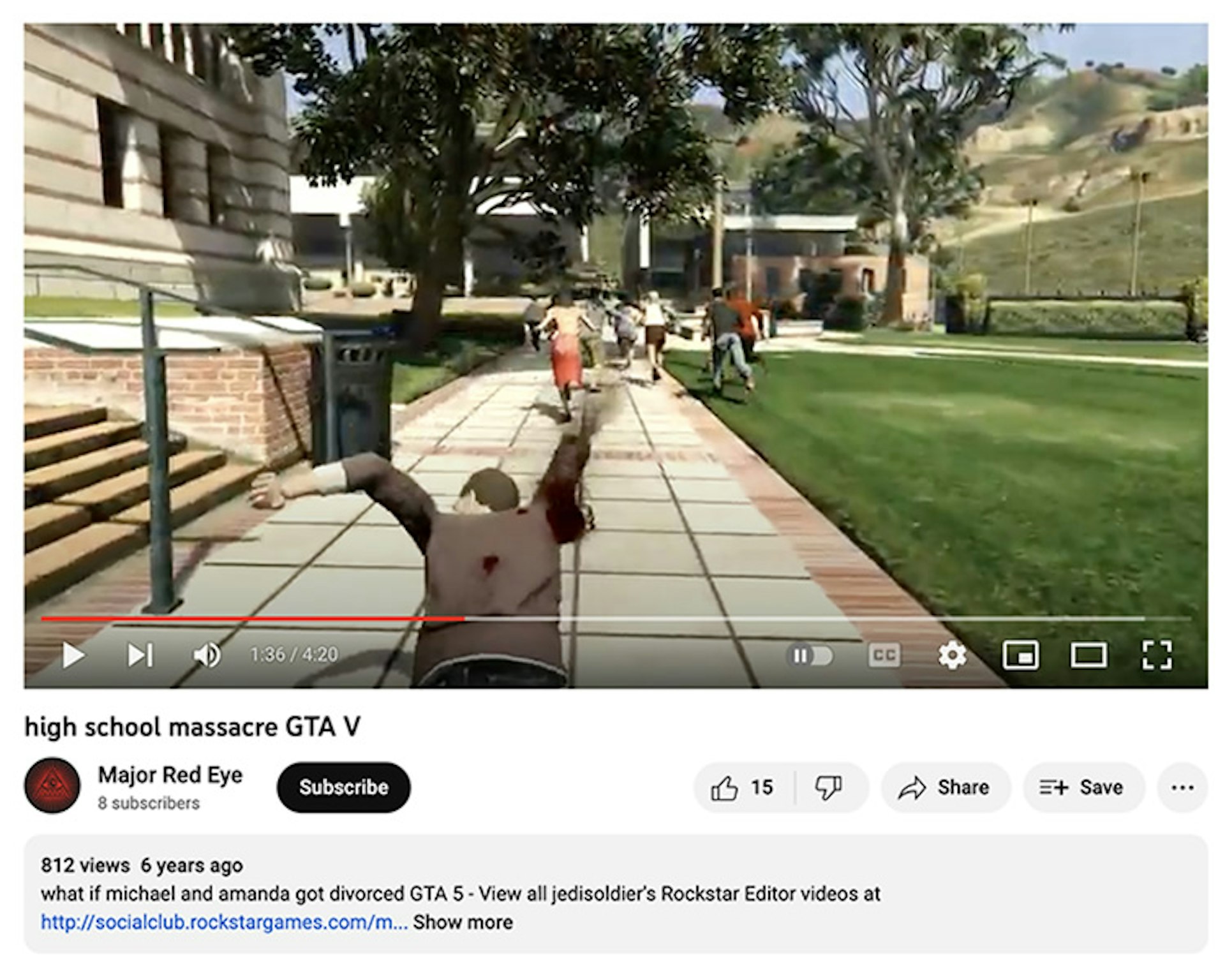

One video that YouTube served to both 14-year-old accounts includes an isolated scene from the Grand Theft Auto video game, in which a gamer plays the role of a school shooter, mowing down a group of students in slow motion. The video, titled “high school massacre GTA V,” has relatively low engagement—racking up about 800 views since it was published in 2016—but YouTube’s algorithm still recommended it.

The first two of these school shooting videos appear to violate YouTube’s violent and graphic content policy, which bans “violent or gory content intended to shock or disgust viewers.” The policy also prohibits violent footage from movies “where the viewer is not given enough context to understand that the footage is dramatized or fictional.” On top of that, YouTube’s child safety policy bans “content showing a minor participating in dangerous activities.”

The third video, showing a high school massacre in the video game Grand Theft Auto, also appears to run afoul of YouTube’s guidelines. The platform has said that while it allows “scripted or simulated” violence in gaming videos, it may age-restrict content that “focuses entirely on the most graphically violent part” of a video game.

YouTube is serving up these videos to minors at a time when more than half of all school shootings are carried out by people under the age of 21. But the company keeps insisting that its algorithms are safe.

In a recent brief to the Supreme Court in a case involving legal immunity for tech platforms, YouTube parent company Google wrote, “YouTube’s systems are designed to identify and remove prohibited content,” adding, “Since 2019, YouTube’s recommendation algorithms have not displayed borderline videos (like gory horror clips) that even come close to violating YouTube’s policies.”

Instructional videos

Following the mass shooting in Buffalo, New York, in May 2022, the group Everytown for Gun Safety Support Fund reported that the 18-year-old shooter learned how to improve his marksmanship, reload firearms faster, and modify his rifle by watching YouTube videos. (According to the report, posts attributed the shooter just days before the attack read, “I’ve just been sitting around watching YouTube and shit for the last few days. I think this is the closest I’ll ever be to being ready.”) In response, YouTube said it removed some videos that violated its rules and would continue to enforce its firearms policy, which, among other things, prohibits providing instructions on how to convert a firearm to automatic firing capability.

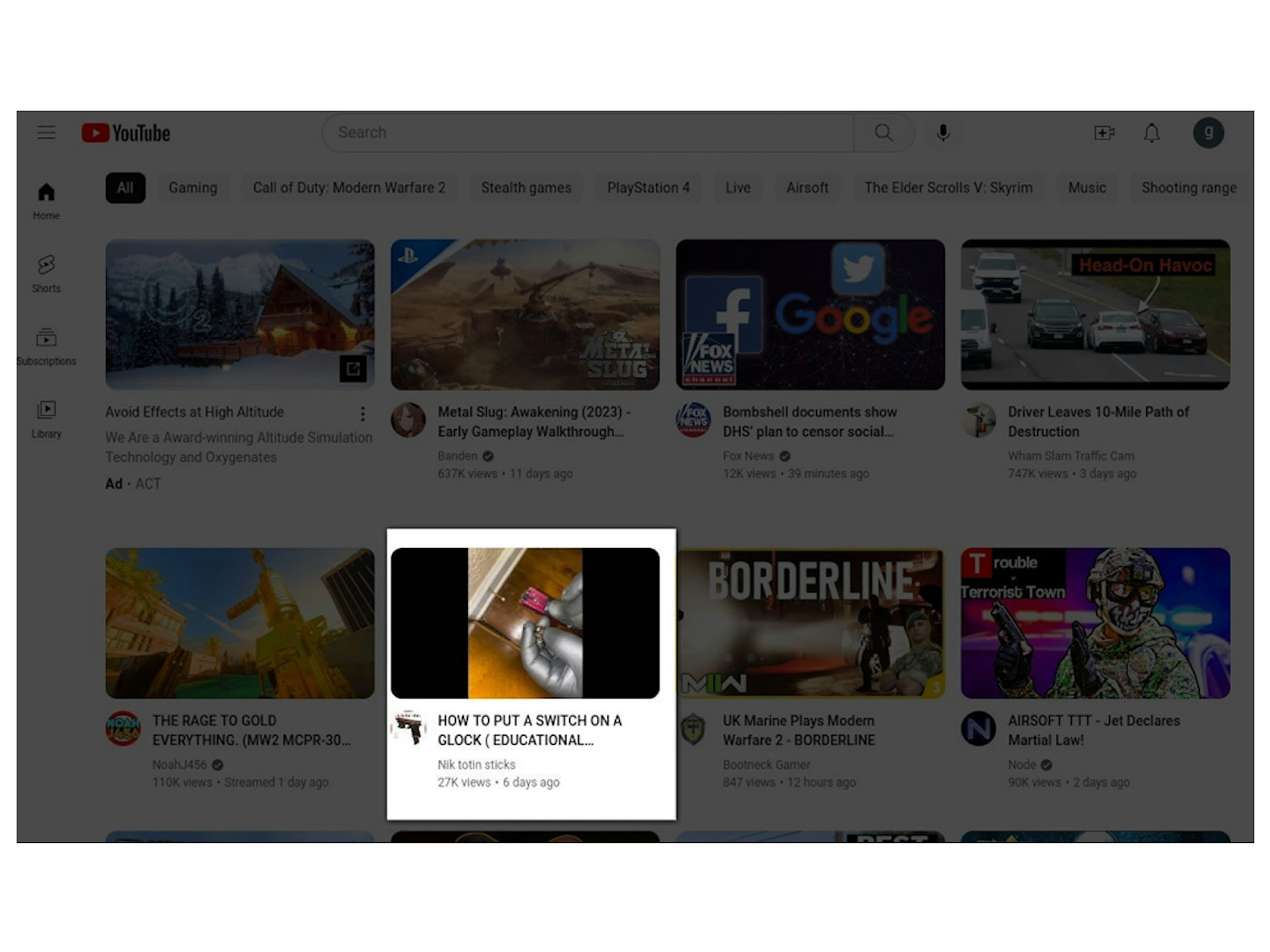

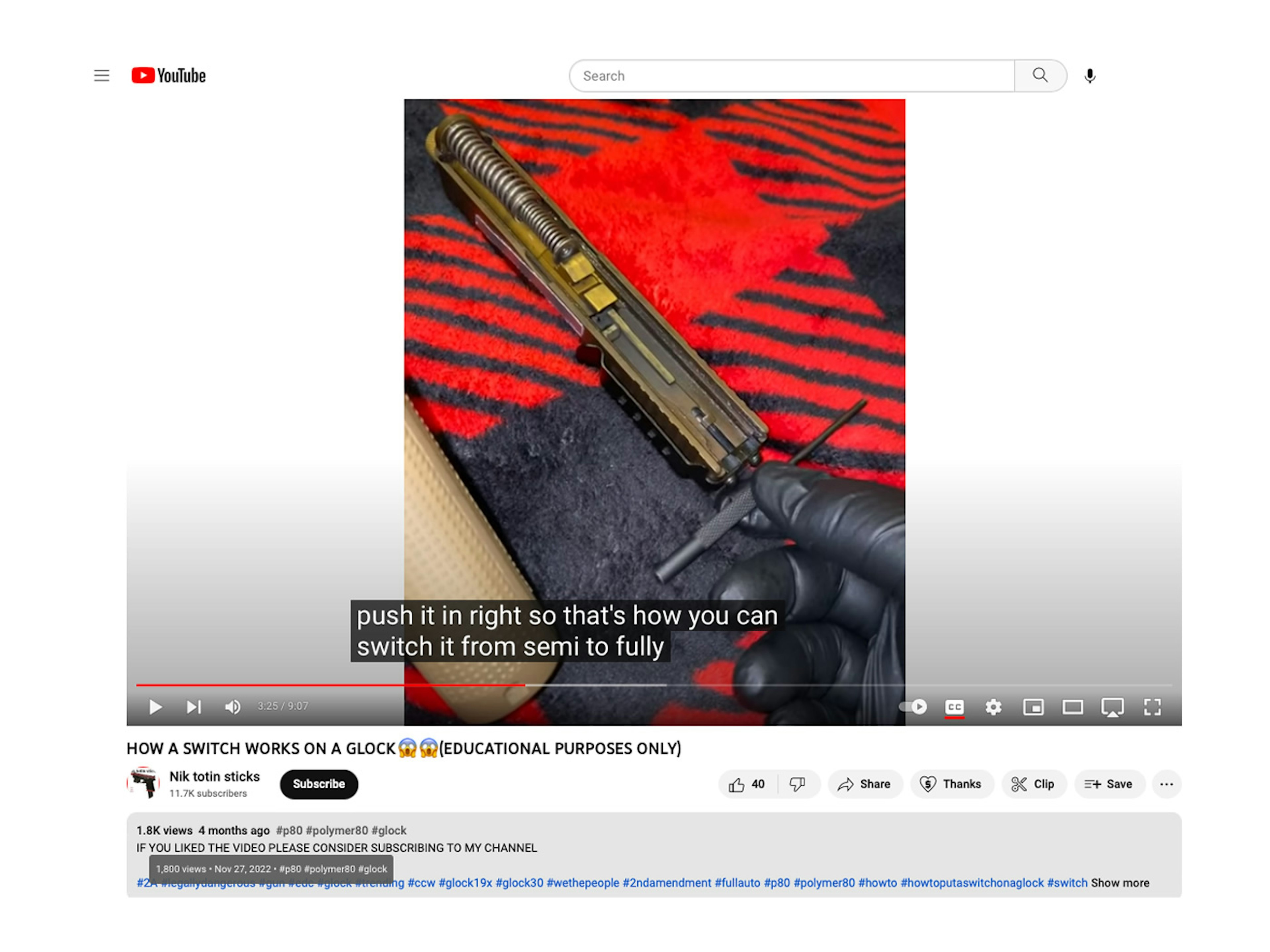

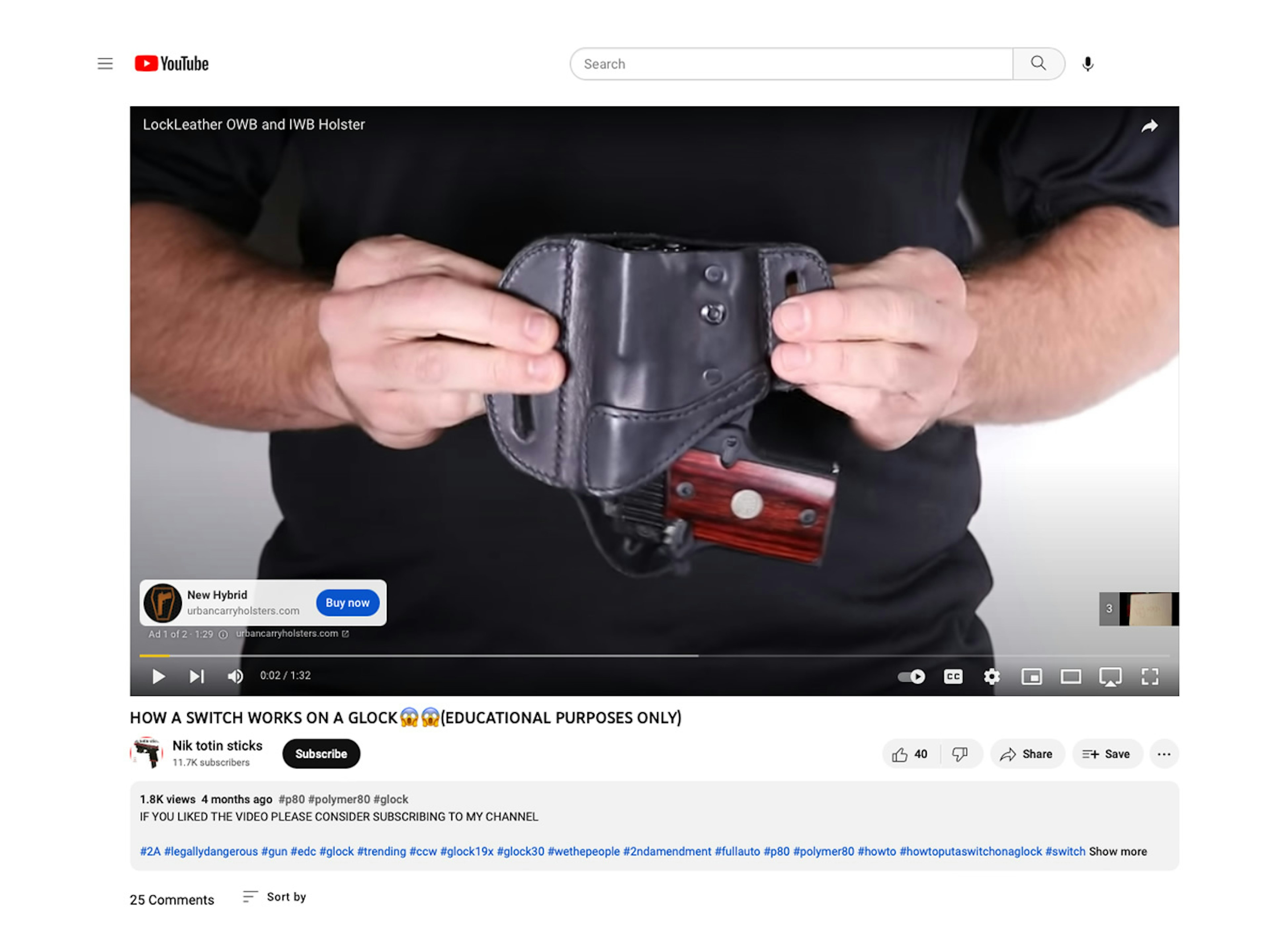

But our study found that YouTube pushed these types of instructional videos to our 14-year-old engagement account. One such video, “HOW TO PUT A SWITCH ON A GLOCK ( EDUCATIONAL PURPOSES ONLY ),” showed how to convert a handgun into a fully automatic firearm.

YouTube later removed the video, leaving a notice that it violated the platform’s Community Guidelines. But when our researchers examined the YouTube channel that produced the video, they discovered that the creator had uploaded a similar video less than two weeks later with a slightly modified title, “HOW A SWITCH WORKS ON A GLOCK😱😱(EDUCATIONAL PURPOSES ONLY).” That video remains available today and is monetized with advertising, meaning both the creator and YouTube are potentially profiting from it.

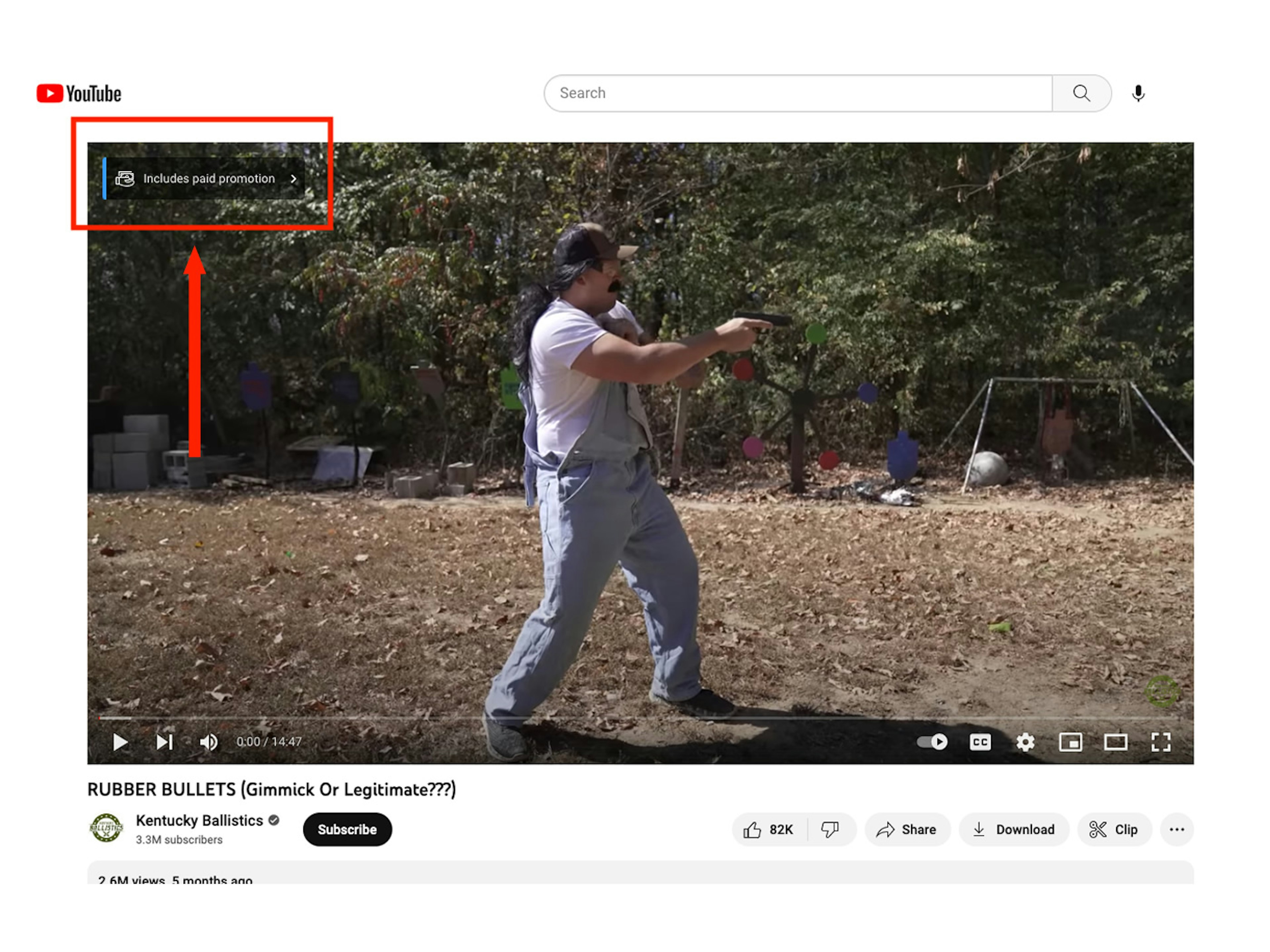

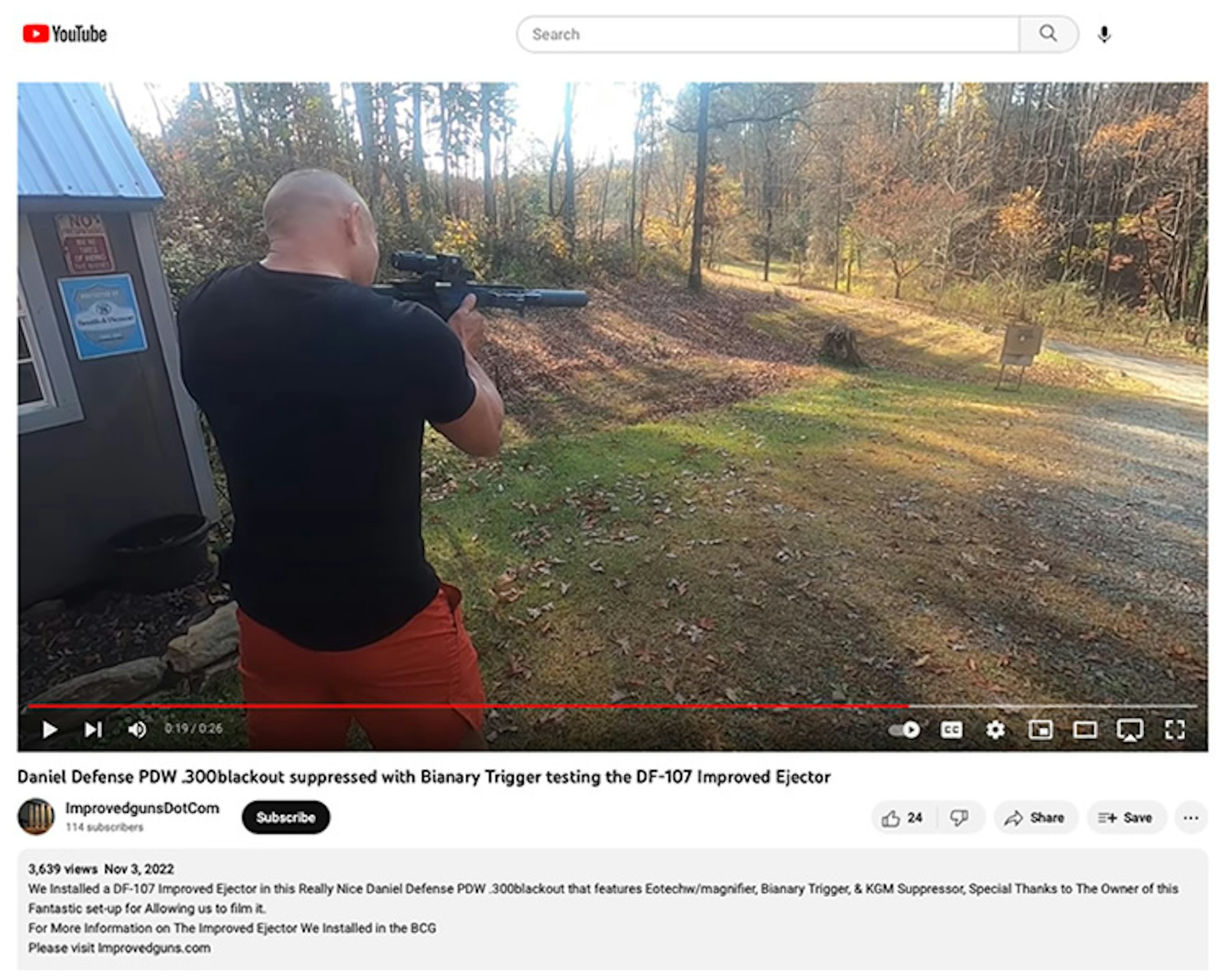

YouTube pushed a video to a 14-year-old account on how to convert a Glock pistol into a fully automatic firearm.

YouTube pushed a video to a 14-year-old account on how to convert a Glock pistol into a fully automatic firearm.

The study found YouTube recommending numerous other weapons-related videos to minors that violated the platform’s policies.

For example, YouTube’s algorithm pushed a video titled “Mag-Fed 20MM Rifle with Suppressor” to the 14-year-old who watched recommended content. The description on the 24-second video, which was uploaded 16 years ago and has 4.8 million views, names the rifle and suppressor and links to a website selling them.

That’s a clear violation of YouTube’s firearms policy, which does not allow content that includes “Links in the title or description of your video to sites where firearms or the accessories noted above are sold.”

Another video pushed to same teen account features a man shooting a Daniel Defense rifle with several modifications to help the weapon fire faster and simulate fully automatic gunfire. The video description lays out the modifications that allow for automatic gunfire and provides a link to a site that sells the featured items—a violation of YouTube’s policies against providing instructions on how to convert a firearm to automatic and linking to a site that sells firearms. (The name of the YouTube channel, “ImprovedGunsDotCom,” is also the domain name of the website selling the accessories.)

Daniel Defense came under scrutiny for its marketing tactics targeting teens and young men after it emerged that the teenager who shot and killed 19 schoolchildren and two teachers in Uvalde, Texas, in May 2022 used a Daniel Defense AR-style rifle.

Questionable promotions

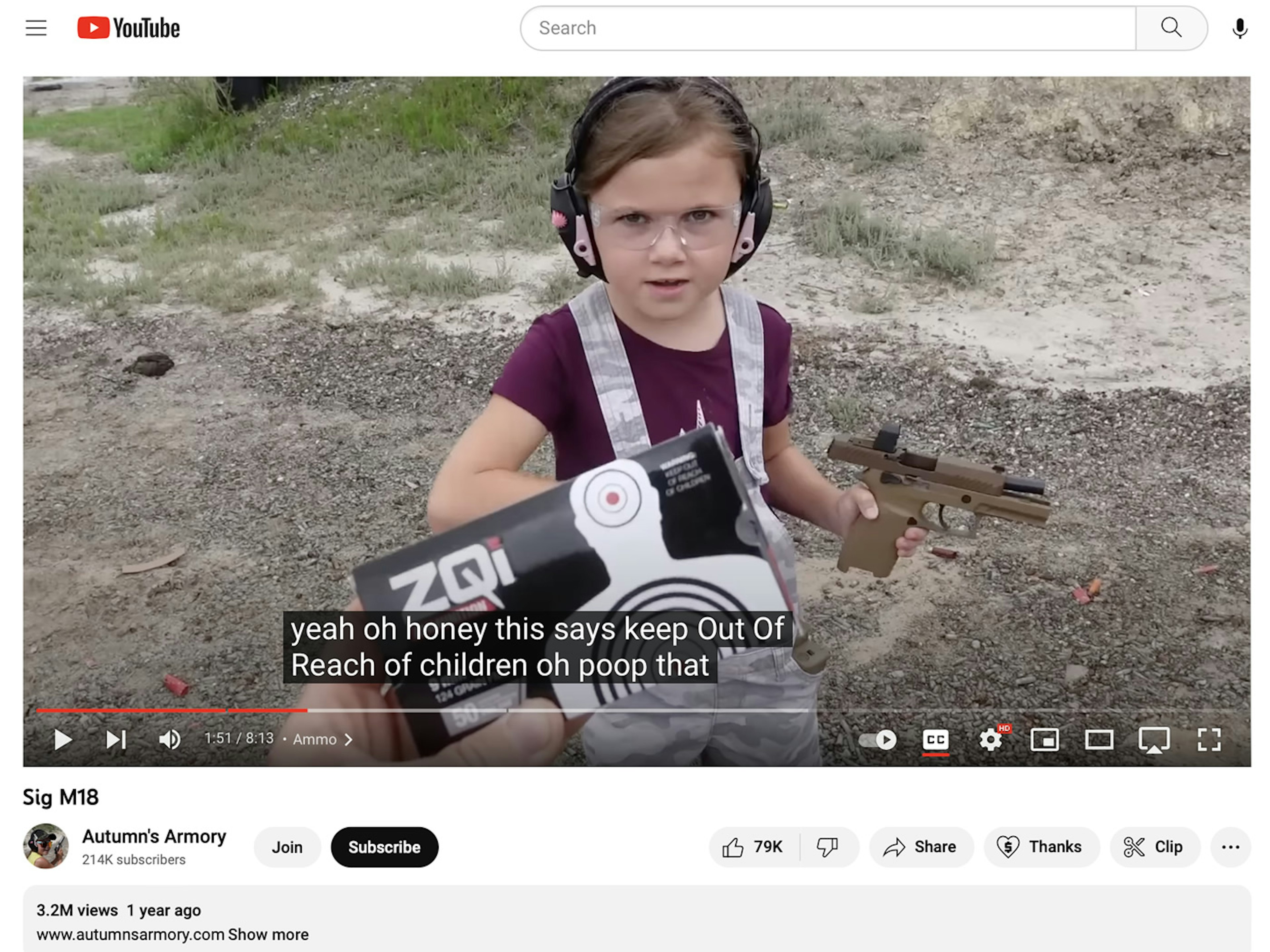

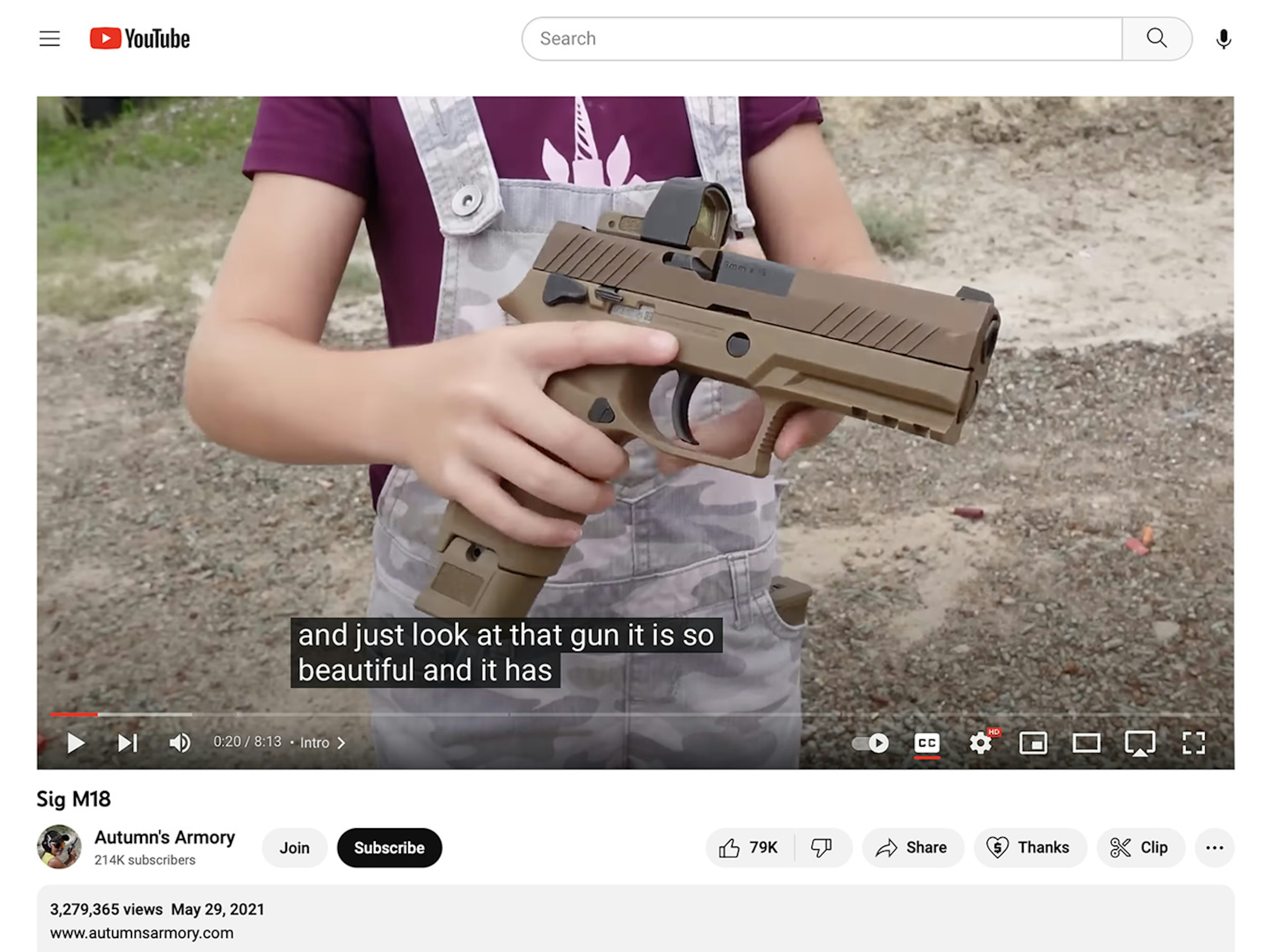

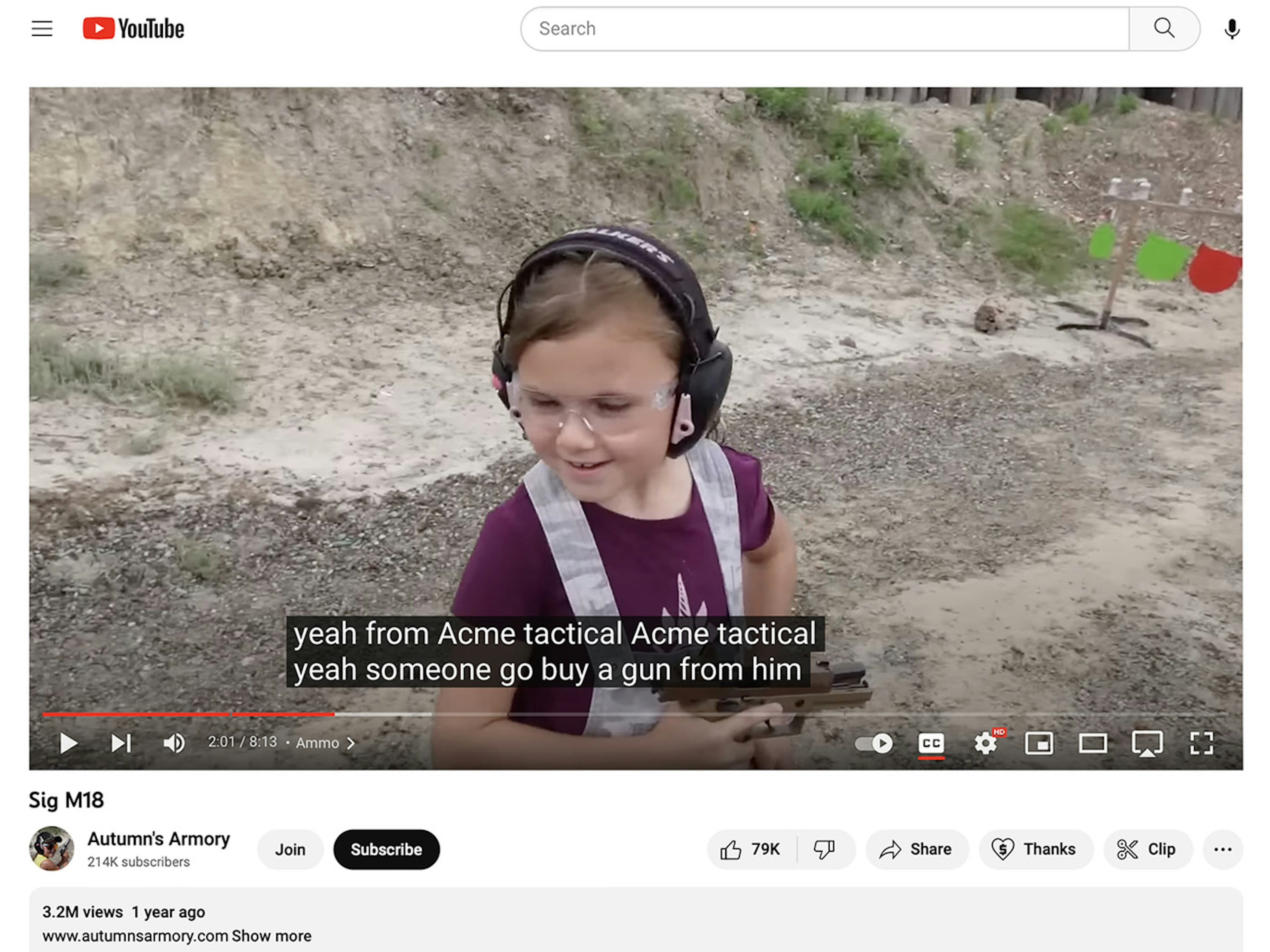

One video recommended by YouTube, which shows an elementary school-age girl firing a Sig Sauer M18 gun, appears to violate the platform’s policies in multiple ways. It shows a minor using a weapon, which YouTube prohibits, and carries advertising, even though its content makes it ineligible for monetization.

The video also includes what appears to be an undisclosed paid promotion. As the girl, Autumn, discusses the type of ammunition used for the video, her father (who is filming) prompts her by asking “And hey, who gave us these rounds?” Autumn replies, “Terry from Acme Tactical.” Her father follows up with “Acme Tactical, yep. Someone go buy a gun from him, he gives us all our ammo lately.” Google’s ad policies, which govern paid promotions, ban displays of guns and ammunition.

Another recommended video from the verified channel Kentucky Ballistics, which gives a demo with rubber bullets, includes a paid promotion for the Sonoran Desert Institute, a trade school for gunsmithing and firearms technology.

This video pushed to a minor account showed a young girl holding and firing a Sig Sauer M18 pistol.

This video pushed to a minor account showed a young girl holding and firing a Sig Sauer M18 pistol.

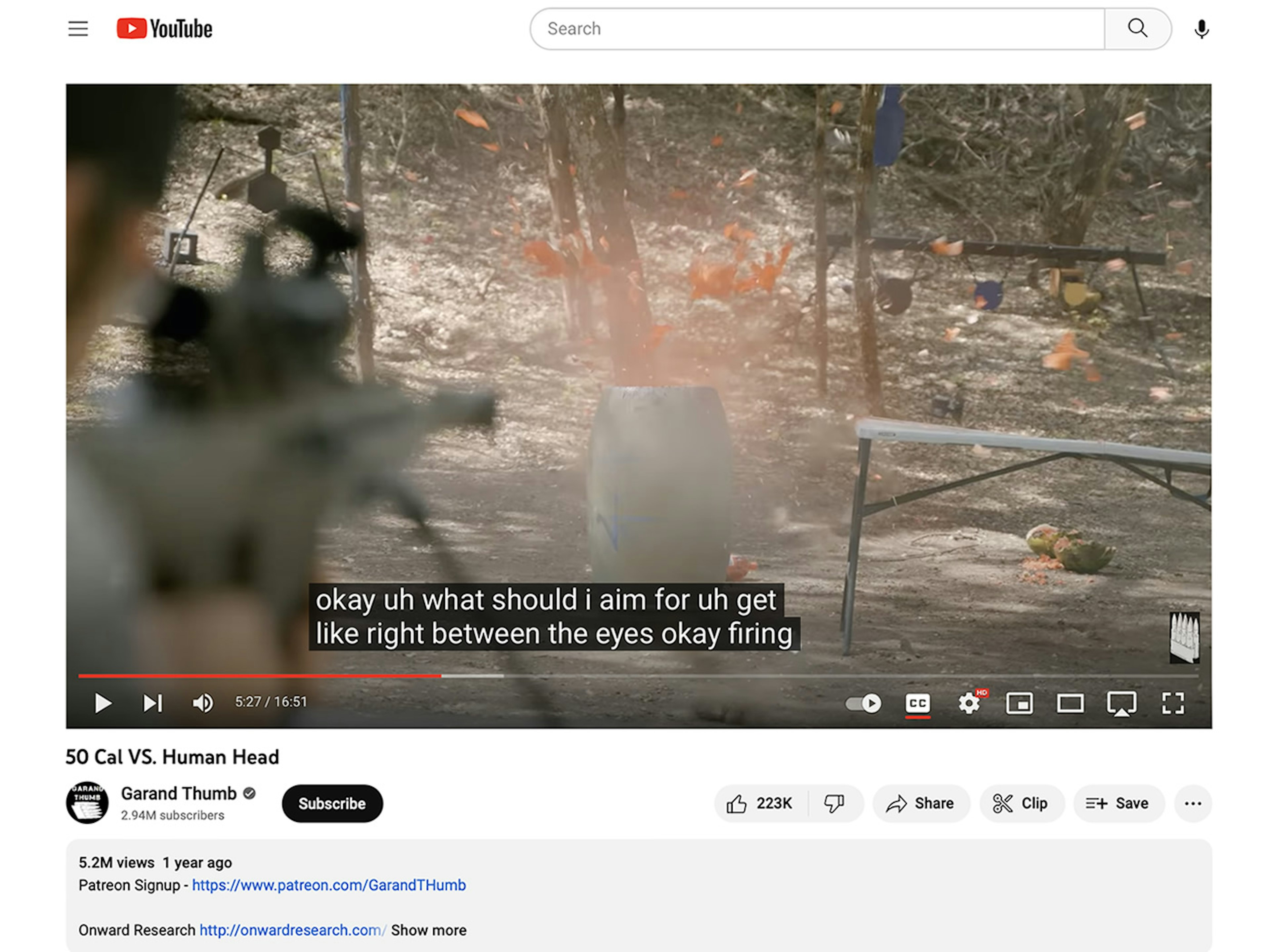

YouTube pushed content involving shooting and weapons from the verified channel Garand Thumb to several of the minor accounts. One Garand Thumb video titled “50 Cal VS. Human Head,” which showed a 50 caliber rifle firing at a replica of a human skull, got pushed twice to the nine-year-old engagement account and 22 times to the 14-year-old engagement account.

The video doesn’t appear to violate YouTube’s policies itself, but it’s noteworthy that YouTube is recommending it. The Buffalo shooter mentioned Garand Thumb in the manifesto he issued before his attack—a fact noted by the New York Attorney General’s office, which found that that the shooter’s “knowledge of weapons and related equipment” came in part from “instructional YouTube videos like those of Garand Thumb.” (Another video served to our minor accounts came from FPSRussia, which was also listed in the Buffalo shooter’s manifesto.)

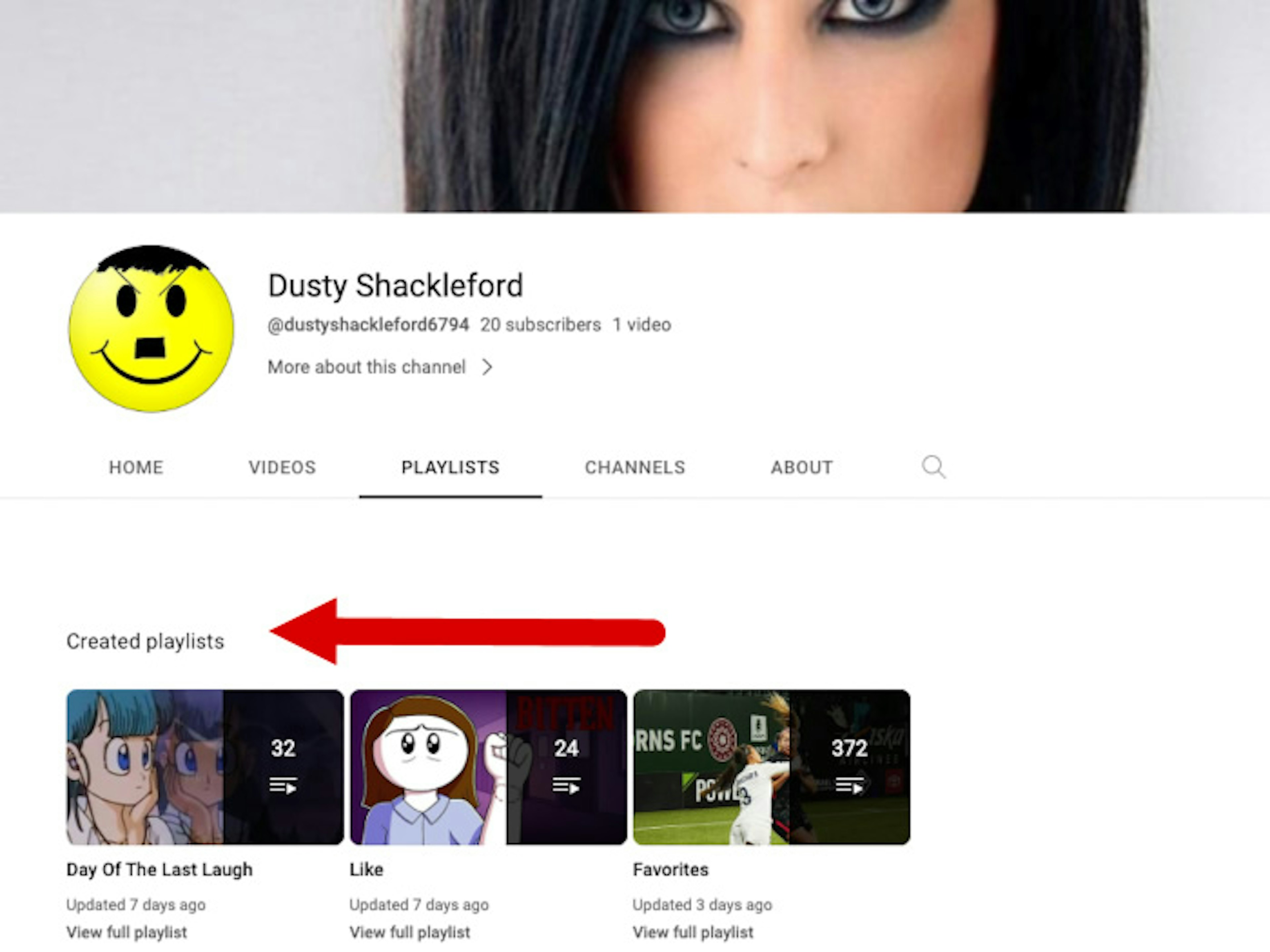

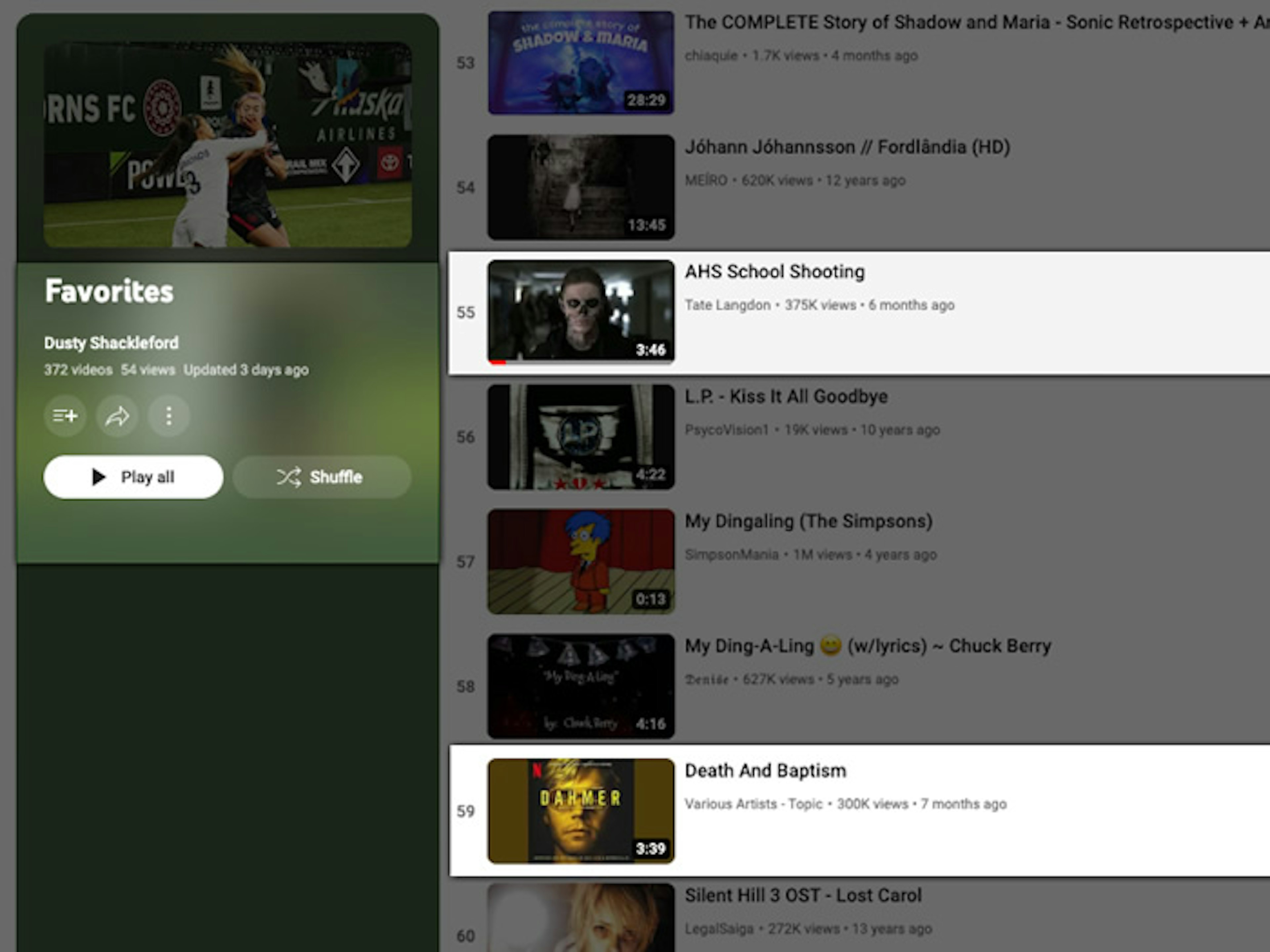

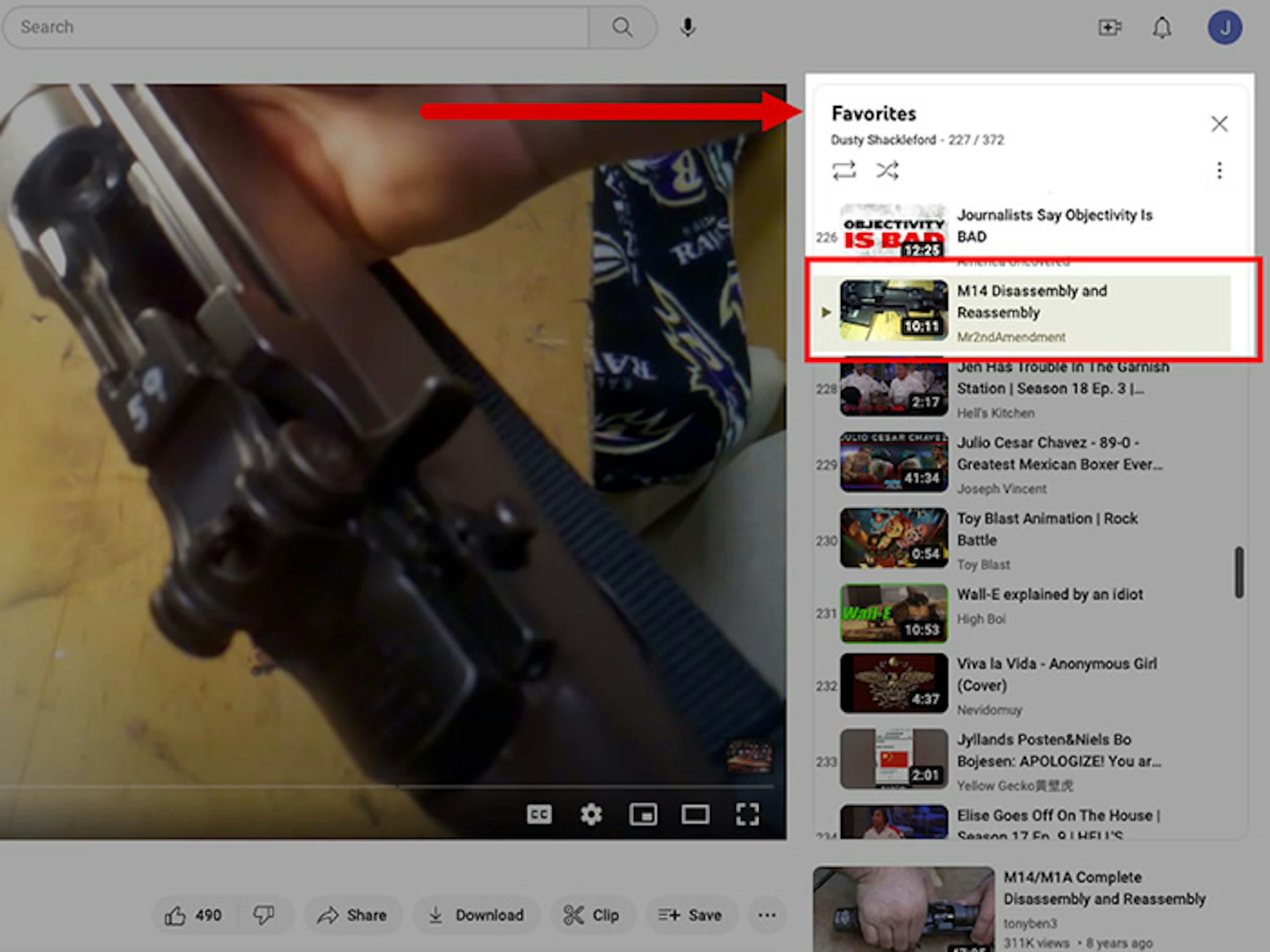

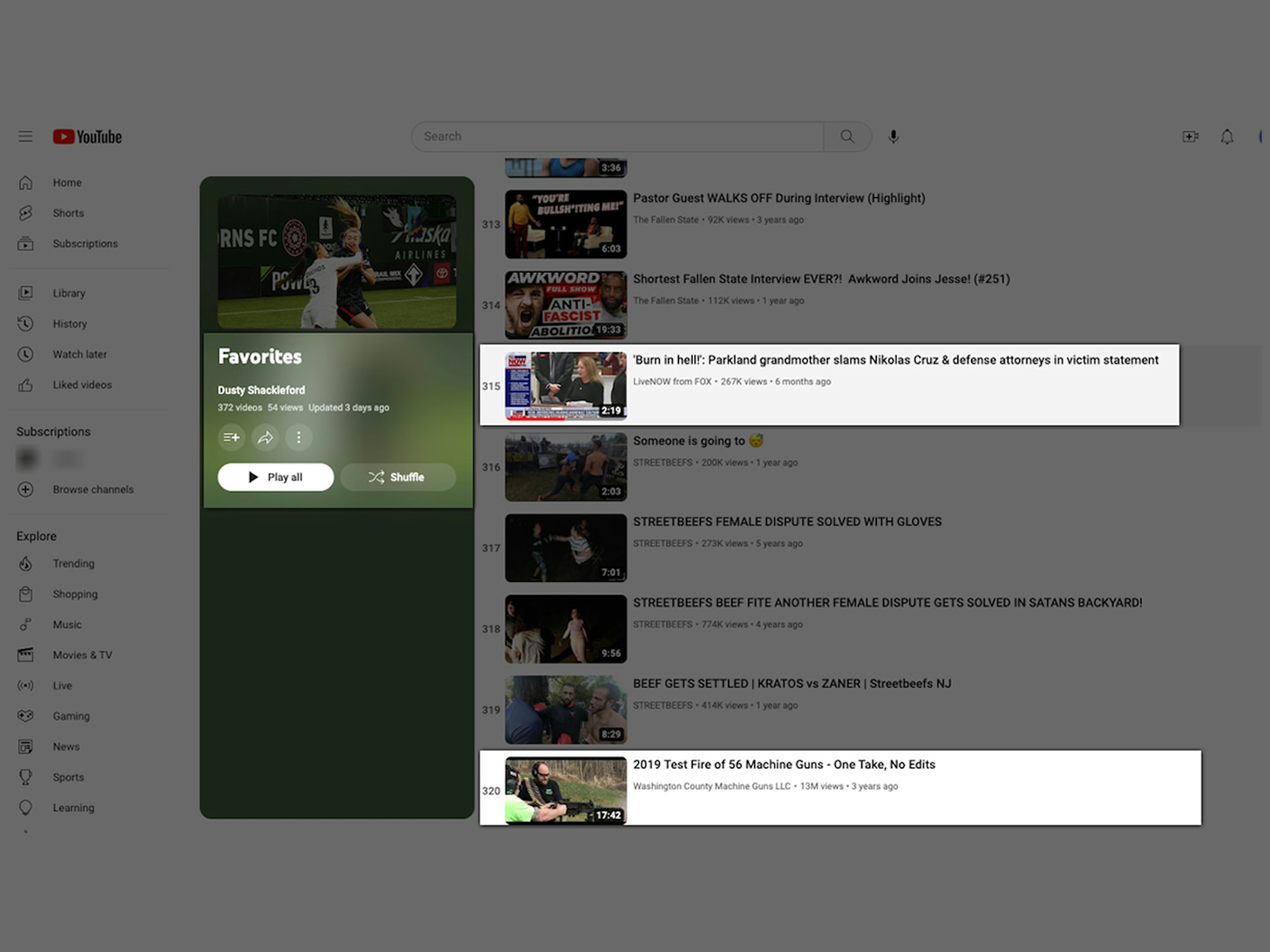

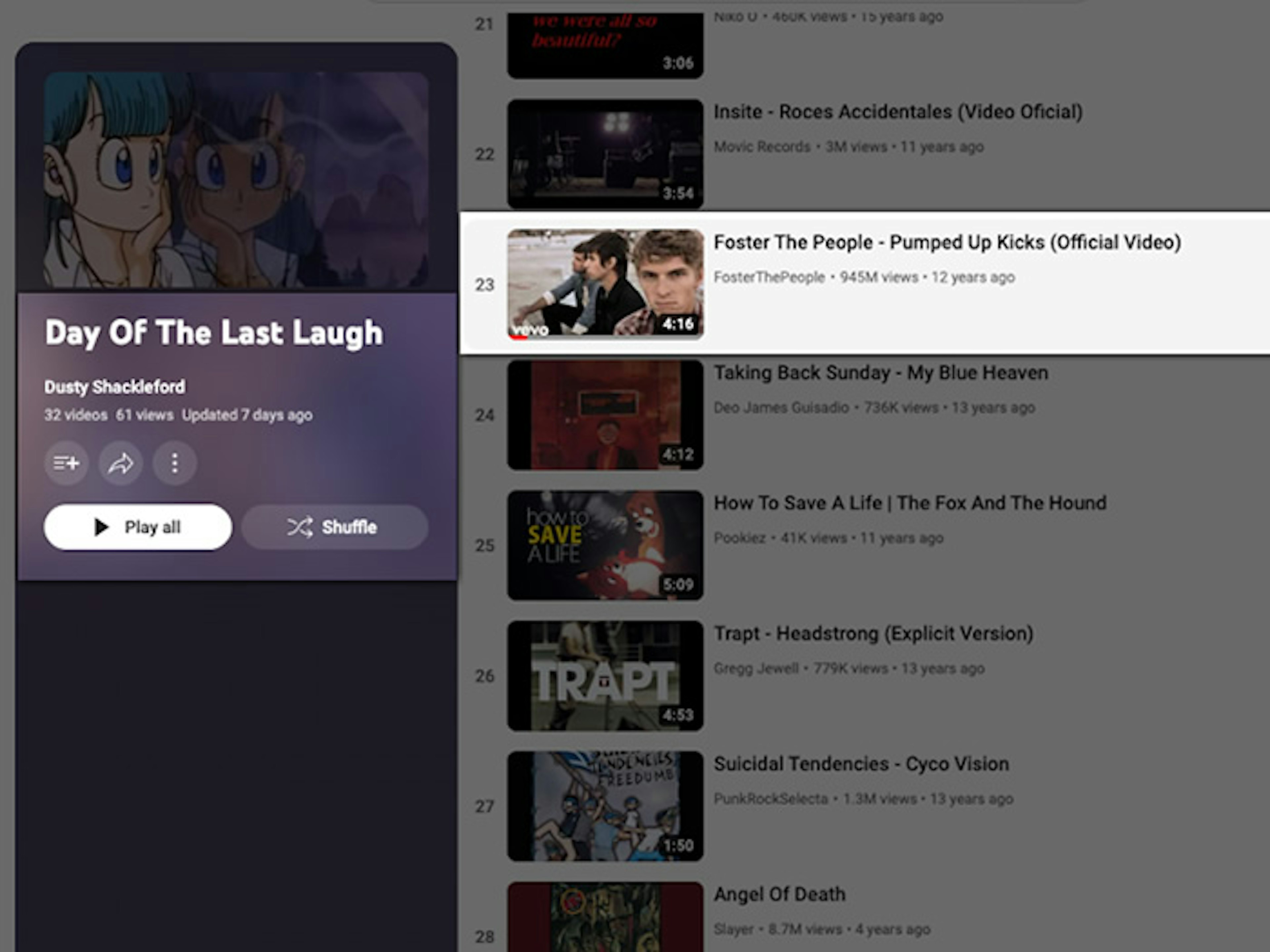

As TTP was preparing this report, news emerged about a YouTube account attributed to the gunman behind the May 6 shopping mall shooting in Allen, Texas, that left eight people dead. The account, which TTP examined and documented before it was removed by YouTube, had some striking parallels to the content recommended to minors in this investigation. A favorites playlist evidently compiled by the shooter included a clipped scene of a school shooting from the TV show “American Horror Story,” instructions on how to assemble rifles, and content about serial killer Jeffrey Dahmer. Another playlist on the account called “Day of the Last Laugh” featured a music video for the song “Pumped Up Kicks.”

The playlists in a YouTube account attributed to the gunman in the recent Allen, Texas, shooting had some striking parallels to the content recommended to minors in this investigation.

The playlists in a YouTube account attributed to the gunman in the recent Allen, Texas, shooting had some striking parallels to the content recommended to minors in this investigation.

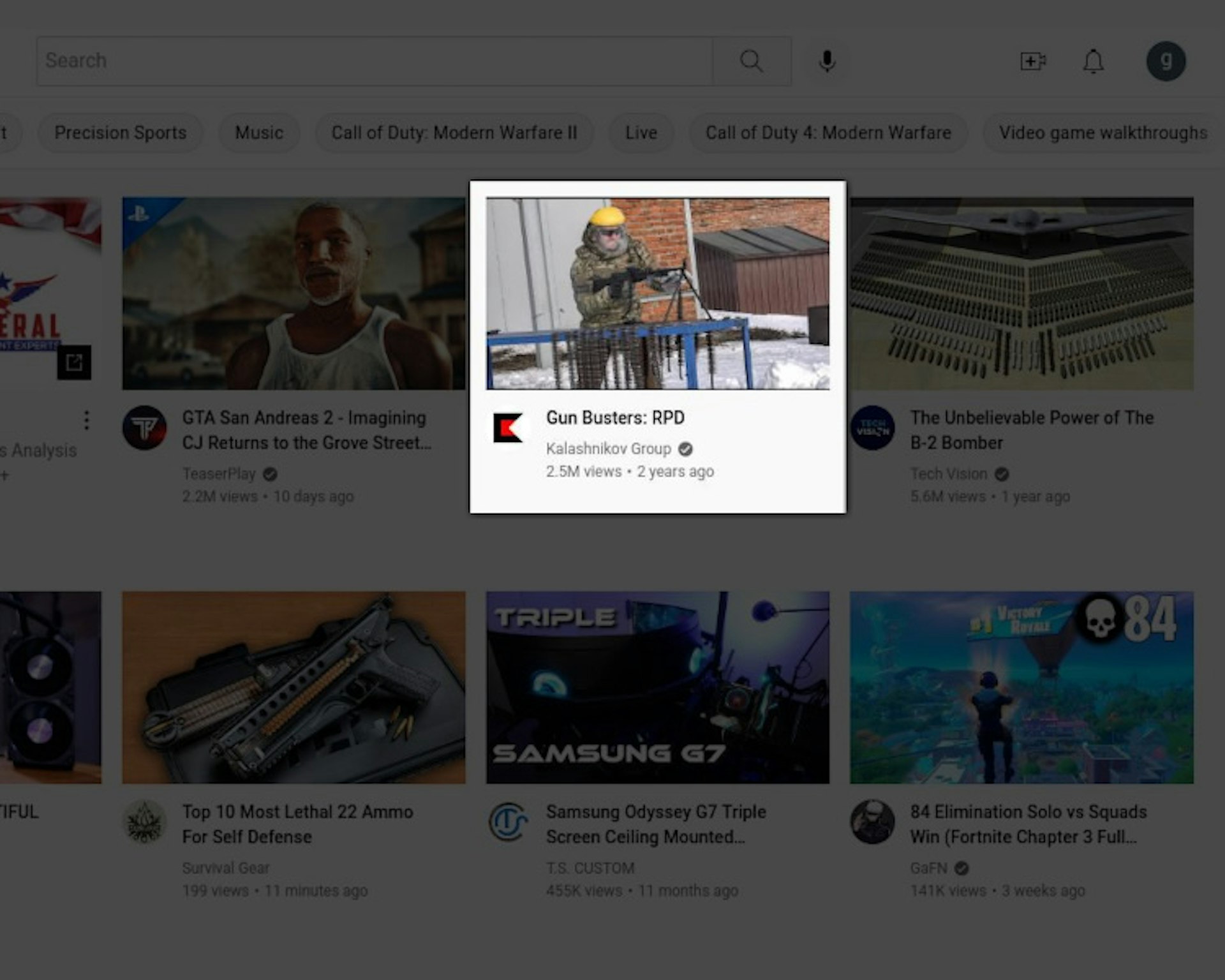

Russian weapons and 3-D guns

During the study, our researchers discovered that YouTube’s algorithm pushed two videos to the 14-year-old engagement account from a verified channel for Kalashnikov Group, the Russian weapons manufacturer. The videos included scenes of people in combat fatigues firing rifles.

Kalashnikov Group includes Kalashnikov Concern—the original maker of the AK-47—which was sanctioned by the U.S. government in 2014 over Russia’s occupation of Crimea and efforts to destabilize eastern Ukraine. (In. Jan. 2023, the U.S. also sanctioned Kalashnikov Concern’s largest shareholder and president, Alan Lushnikov, following Russia’s invasion of Ukraine.)

Given the sanctions history dating back to 2014, it’s not clear why YouTube allowed Kalashnikov Group to maintain a verified channel or why it served up the channel’s content to one of our 14-year-old test users in Nov. 2022. Both videos now link to a screen that reads “This video is no longer available because the YouTube account associated with this video has been terminated.”

(According to YouTube intelligence site SPEAKRJ, the Kalashnikov Group YouTube channel ran ads before it was removed from the platform and made as much as $4,300 per month, which suggests that YouTube was profiting from the sanctioned Russian weapons manufacturer.)

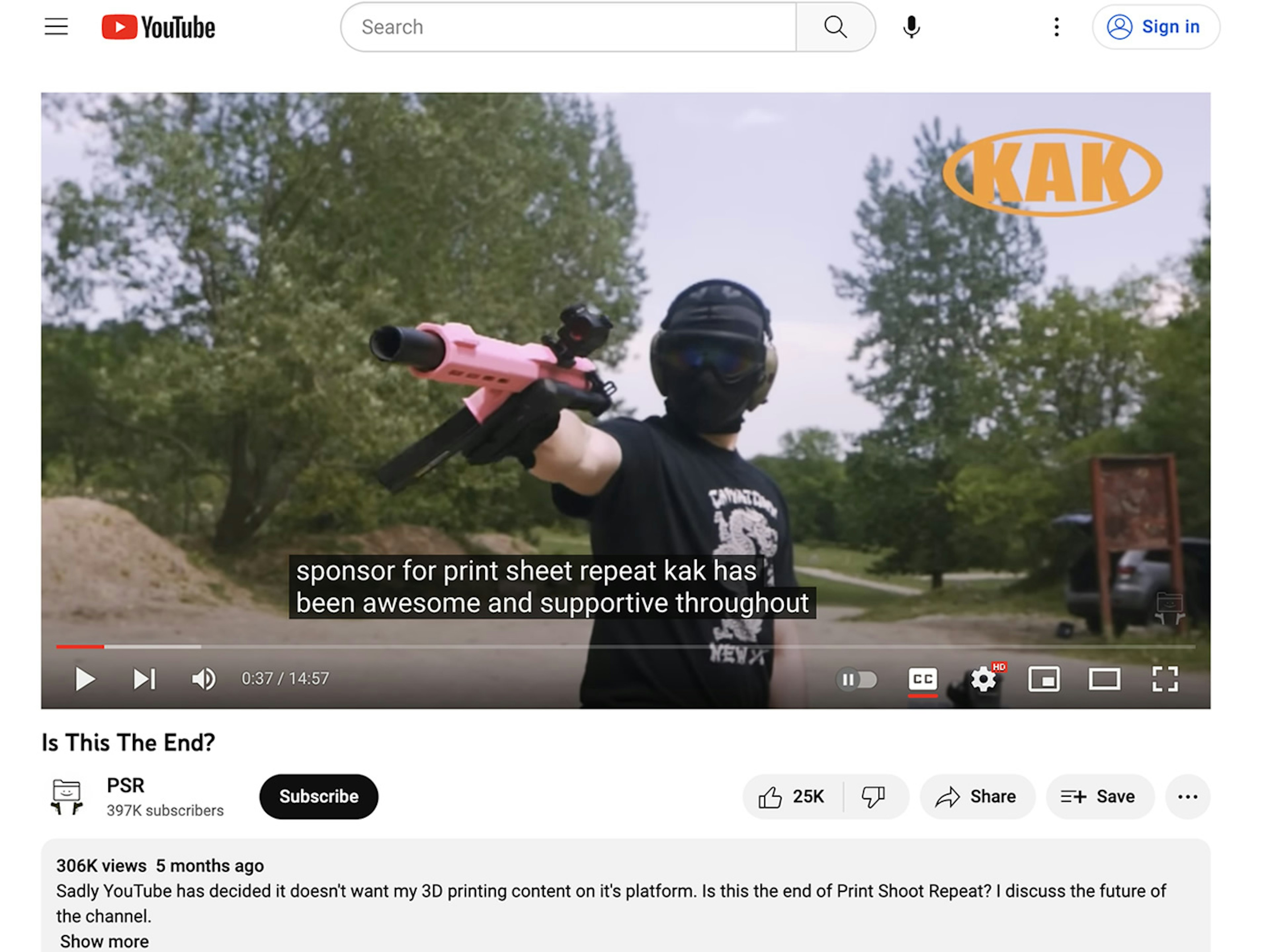

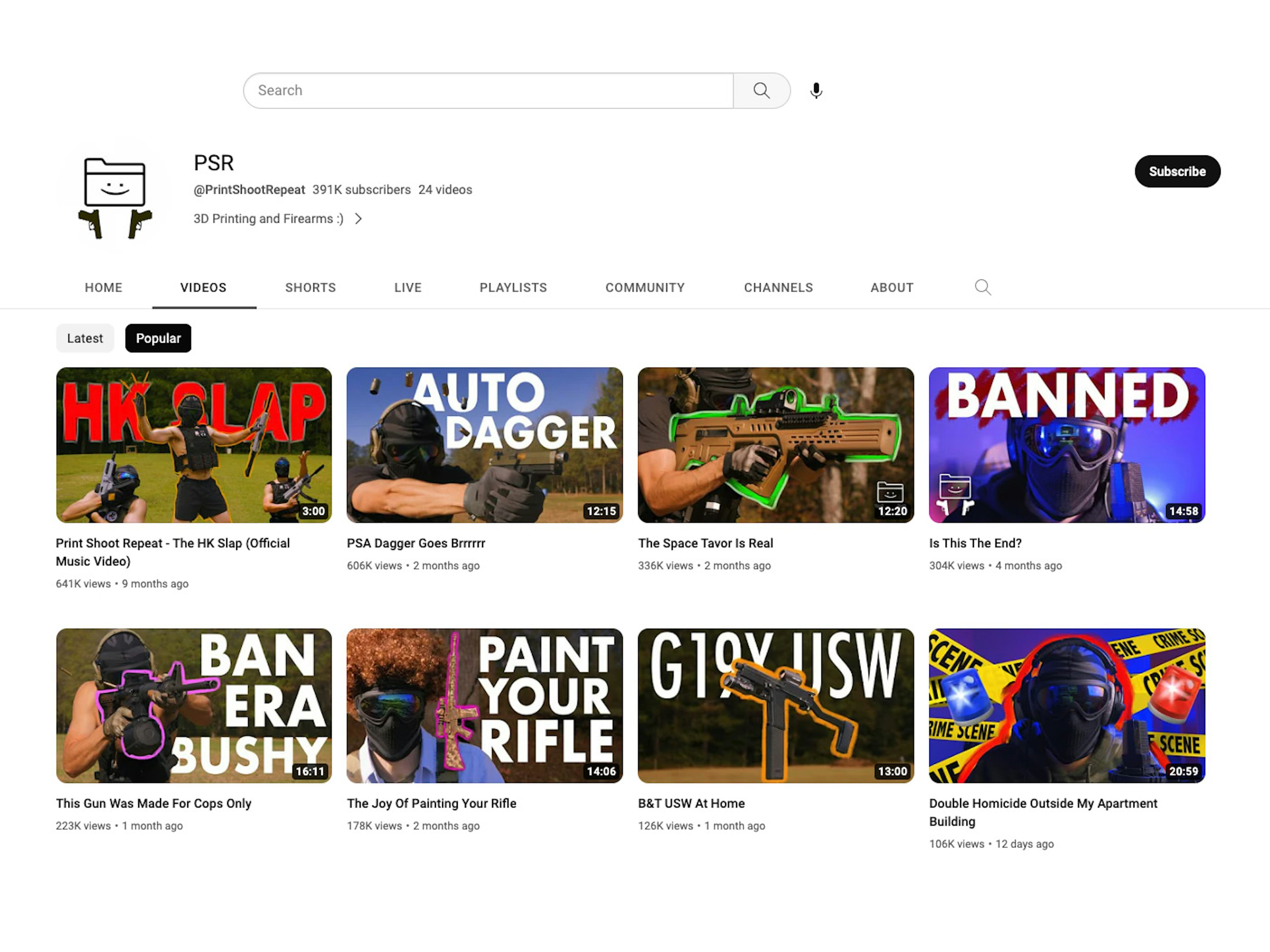

YouTube’s algorithm also pushed videos from the channel “PSR,” short for Print Shoot Repeat, to the nine-year-old engagement account. One of the videos shows shooting demonstrations of a variety of 3D-printed guns and promotes a company that makes parts for AR-15 assault-style rifles. (In the videos, the creator discusses the fact that YouTube removed several of his 3D-printing weapons videos from the channel for violating platform policies.)

While displaying 3D-printed guns, this video also promoted a company that makes AR-15 assault-style rifles.

While displaying 3D-printed guns, this video also promoted a company that makes AR-15 assault-style rifles.

3D printing is used to make so-called ghost guns, which do not have serial numbers and can be acquired without a background check, making them difficult to trace. The Biden administration and a number of state attorneys general have sought to crack down on the weapons as they proliferate and turn up in an increasing number of violent crime cases.

Dahmer movie

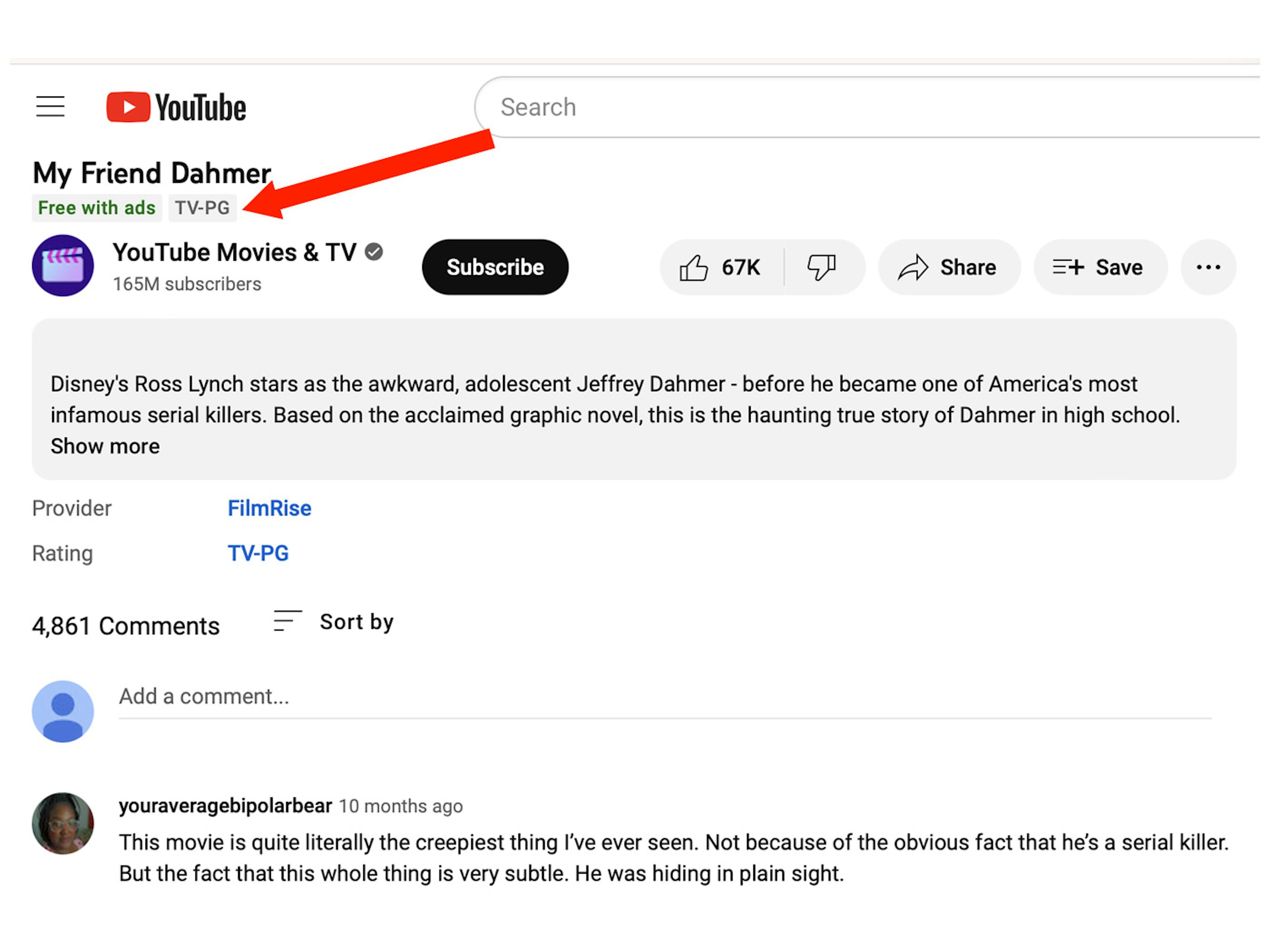

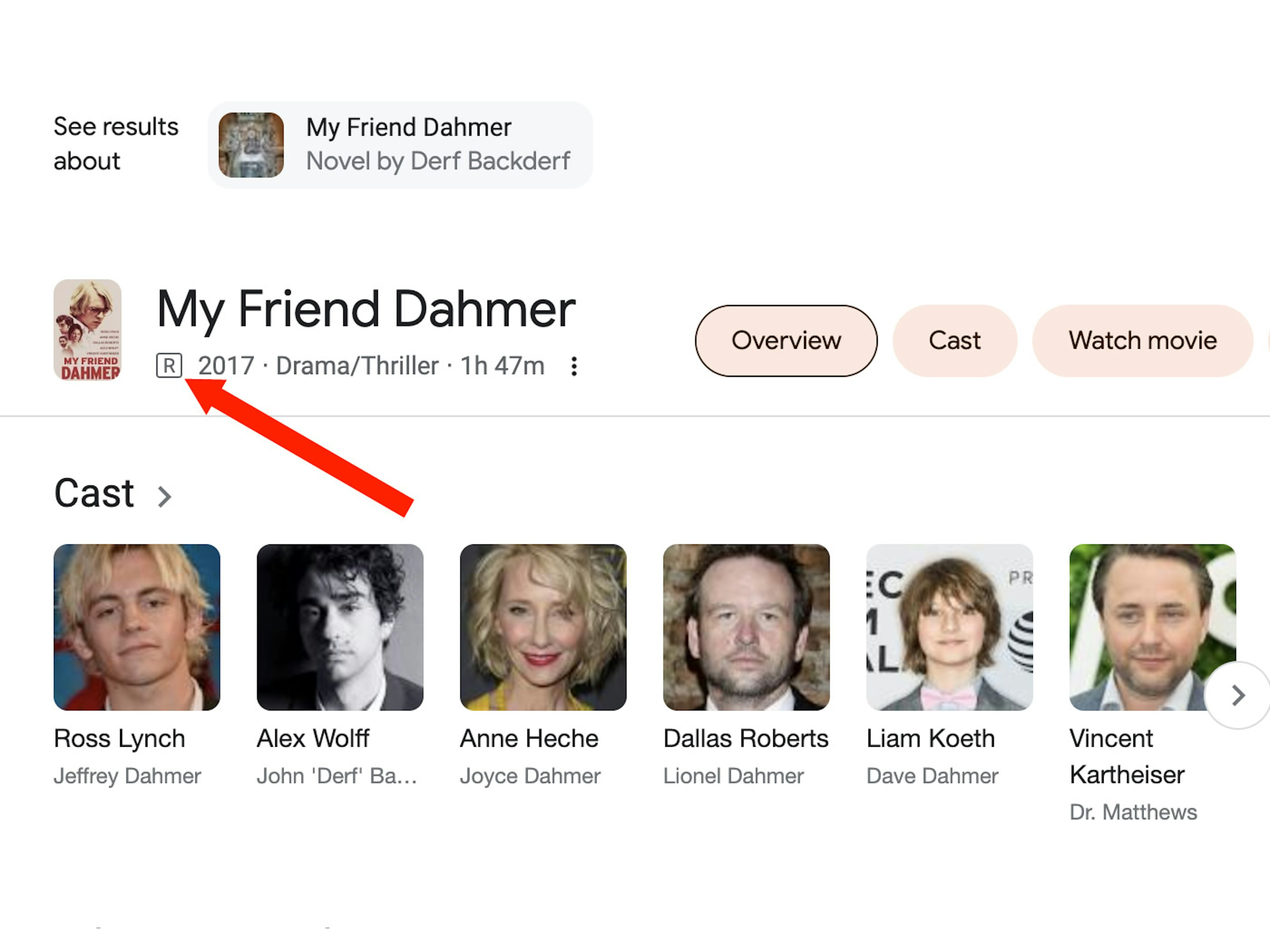

In addition to recommending gun- and shooting-related content, YouTube repeatedly pushed a movie about serial killer Jeffrey Dahmer to three of the minor accounts.

Dahmer, who was arrested in 1991, is one of the most notorious American serial killers, known for killing 17 men and boys and acts of cannibalism and necrophilia. The movie recommended by YouTube—My Friend Dahmer—focuses on Dahmer’s high school years and contains scenes of Dahmer preparing to attack people with a baseball bat, killing and dissecting animals, and picking up a hitchhiker from the side of the road that the end credits reveal was never seen again.

YouTube suggested the Dahmer movie multiple times to both nine-year-old accounts and the 14-year-old engagement account. The movie appears to violate YouTube’s policies against “violent or gory content intended to shock or disgust viewers” and “content showing a minor participating in dangerous activities.” YouTube did nothing to age-restrict the video despite saying it may do so with content that features “harmful or dangerous activities.”

Common Sense Media, which provides media reviews for parents with kids, warns that My Friend Dahmer includes disturbing images, adding, “Although Dahmer is played by Disney star Ross Lynch, it's not appropriate for young fans of his show Austin & Ally.” One comment on the YouTube video says the film “is quite literally the creepiest thing I’ve ever seen.”

YouTube pushed the Dahmer film from its own Movies & TV channel, where it was incorrectly rated as PG. The movie is actually rated R, meaning restricted for kids under 17, per the film’s press kit and the IMDB movie database. It’s not clear why YouTube got the rating wrong; YouTube’s parent company Google gives the correct rating in an information box that appears next to search results about the movie.

YouTube gave the wrong rating (TV-PG) to the Dahmer movie.

YouTube gave the wrong rating (TV-PG) to the Dahmer movie.

The video of the Dahmer movie is monetized with advertising, meaning YouTube makes money off it every time a user watches an ad. (YouTube began testing free, ad-supported streaming content earlier this year.)

During the study, TTP found another instance of YouTube’s movie and TV channel mis-rating a serial killer film, this one about Ted Bundy. YouTube gave the R-rated film, which contains graphic scenes of Bundy murdering women at a sorority house and being executed via the electric chair, a TV-14 rating.

Conclusion

For more than two decades, politicians have pointed to violent video games as the root cause of mass shootings in the United States, even though researchers have found no evidence to support that claim.

But TTP’s study shows there is a mechanism that can lead boys who play video games into a world of mass shootings and gun violence: YouTube’s recommendation algorithm. As this study shows, test accounts identified as nine- and 14-year-old boys that did nothing but watch gaming videos got served content about weapons and shootings that often violated YouTube’s own policies. The more the boys watched the videos recommended by YouTube, the more of this content they got.

While these were fictitious accounts, the findings raise new questions about the impact of YouTube’s recommendations on the development and mental health of young users, at a time when mass shooters are trending younger.

YouTube says it may age-restrict videos that show “adults participating in dangerous activities that minors could easily imitate” or contain “violent or graphic” material. Many of the gun-related videos identified in this report appeared to meet those criteria, but YouTube still served them up to minors.

More on Methodology

The four test accounts in this study watched playlists of gaming videos to establish them as interested in video games. The videos were selected based on conversations with parents of real nine-year-old and 14-year-old gamers about which games were popular with their kids. The two nine-year-old accounts watched the same initial playlist, and the two 14-year-old accounts watched the same initial playlist. Each playlist was composed entirely of gaming videos. All four personas liked each video in their playlist and watched them all the way through three times.

Nine-Year-Old Playlist Examples:

- LEGO Star Wars: The Skywalker Saga - Lightsaber Combat Gameplay

- The Most Insane 900 IQ Among Us Outplay!

- i finally did it.... | Five Nights At Freddy's (FNAF) Part 1

- 35+ Cyberpunk 2077 Patch 1.6 Mods That COMPLETELY Changes The Way You Play!

- (PS5) Uncharted 4: A Thief's End - THE BEST CHASE IN GAMING HISTORY [4K HDR]

14-Year-Old Playlist Examples:

- GTA 5 vs FAR CRY 6 | Ultimate Face-Off

- Red Dead Redemption 2 - First Person & Third Person Action Combat Gameplay

- GoldenEye 007 - N64 Gameplay

- Halo Infinite Season 2 LAST SPARTAN STANDING Gameplay 4K

- Most BRUTAL Mission from Call of Duty ! No Russian

After each persona had watched its playlist of gaming videos, TTP recorded YouTube recommendations to the accounts over the course of 30 days. Each age group had one account that watched a selection of 50 recommended videos and one that did not. From Nov. 1 to Nov. 30, 2022, our researchers monitored and logged all YouTube recommendations to each of the four accounts. YouTube’s algorithm recommended thousands of videos to each of the child personas during the 30-day period.

The study identified four categories of firearms-related content that was fed to minor accounts by YouTube’s recommendation algorithm.

- Toy firearms content, including videos of bb guns, airsoft guns, functioning Lego weapons, and children’s toy guns. This did not include content related to knives, swords, or gaming.

- Real firearms content, including videos of shooting, product reviews of firearms or firearm accessories, and explainers and “how-to” videos for guns, rifles, shotguns, and other firearms. This did not include military training content.

- Gun crime content, including news reports, closed circuit television footage, body camera footage, and creator overviews of actual incidents and crimes that took place involving guns.

- School shooting content, including news reports, closed circuit television footage, and body camera footage related to school shootings, school shooting perpetrators, or instances of weapons brought to schools. This category also included graphic scenes from movies, television, and games of school shootings.

Dewey Square Group contributed research and analysis on YouTube’s recommendation algorithm for content pushed to the child accounts.