YouTube’s recommendation algorithm pushes users into ideological filter bubbles that are stronger for viewers of right-wing content, according to new research by the Tech Transparency Project (TTP) that highlights how the platform drives political polarization.

Over hundreds of clicks and views, TTP researchers found that the YouTube algorithm creates a feedback loop for users who dabble in Fox News or MSNBC content, serving up the same ideological flavor of videos over and over again.

But the investigation also found that YouTube’s filter bubbles are more robust for viewers of Fox News content, keeping them in an endless cycle of like-minded videos even as users who started with MSNBC content eventually broke free.

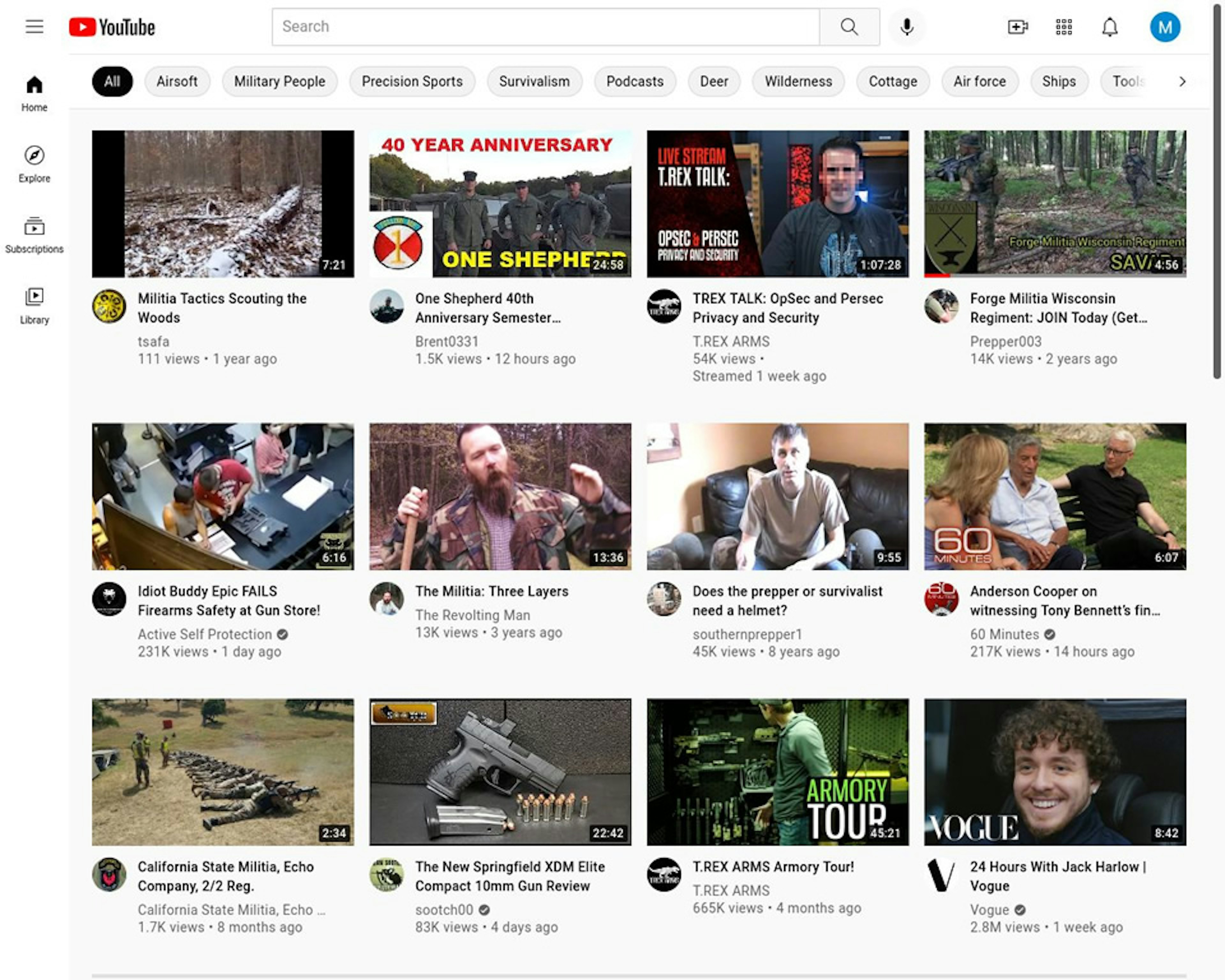

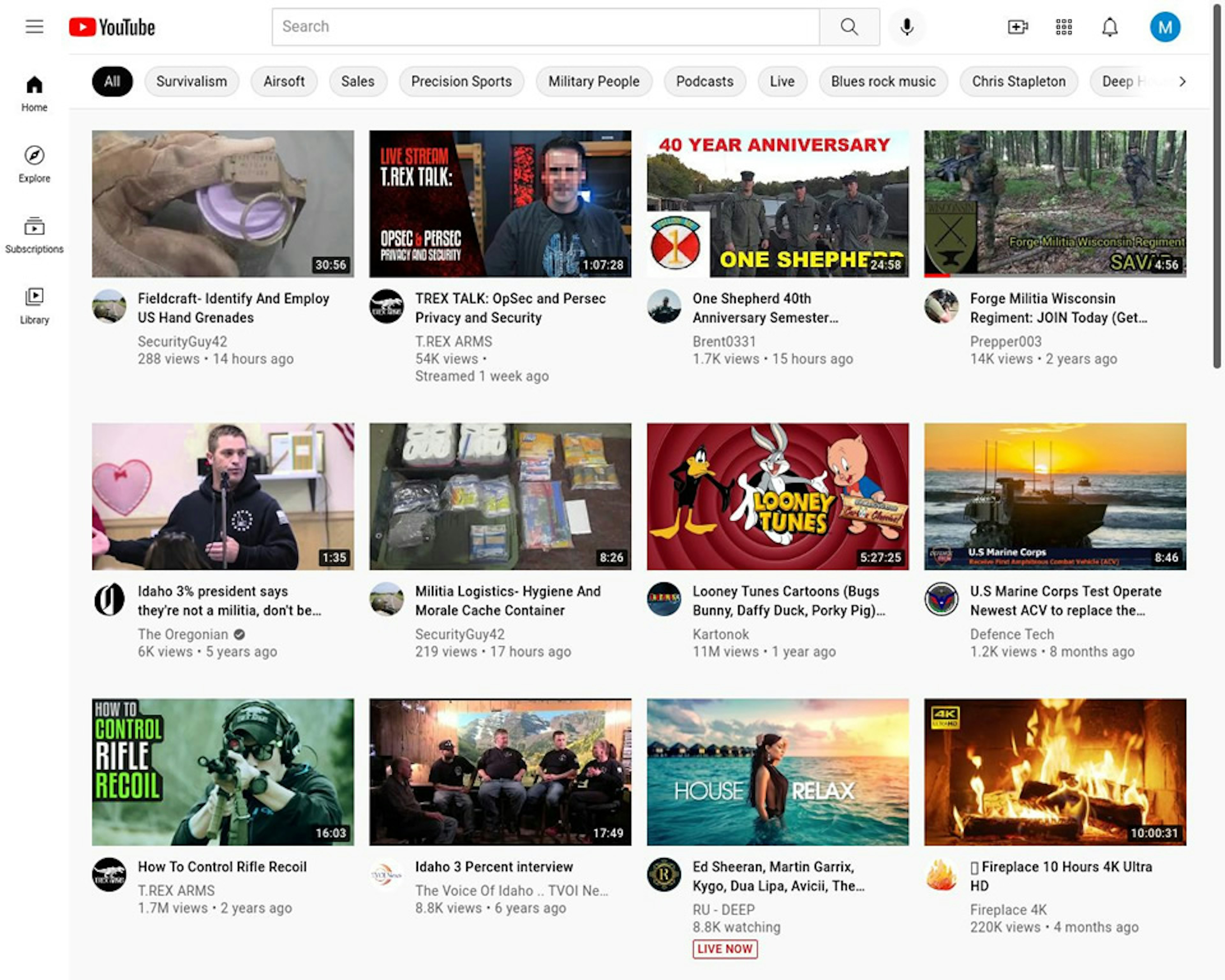

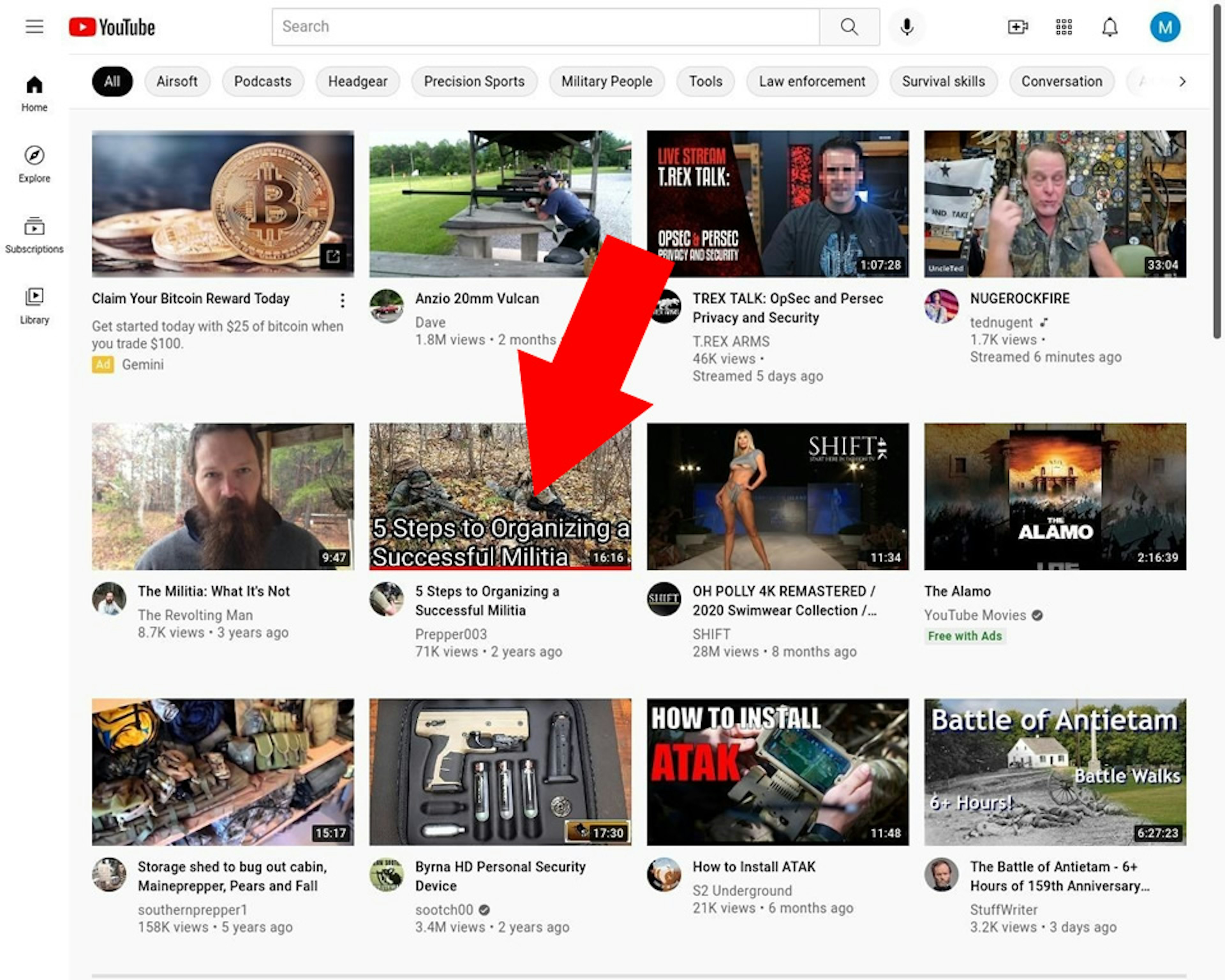

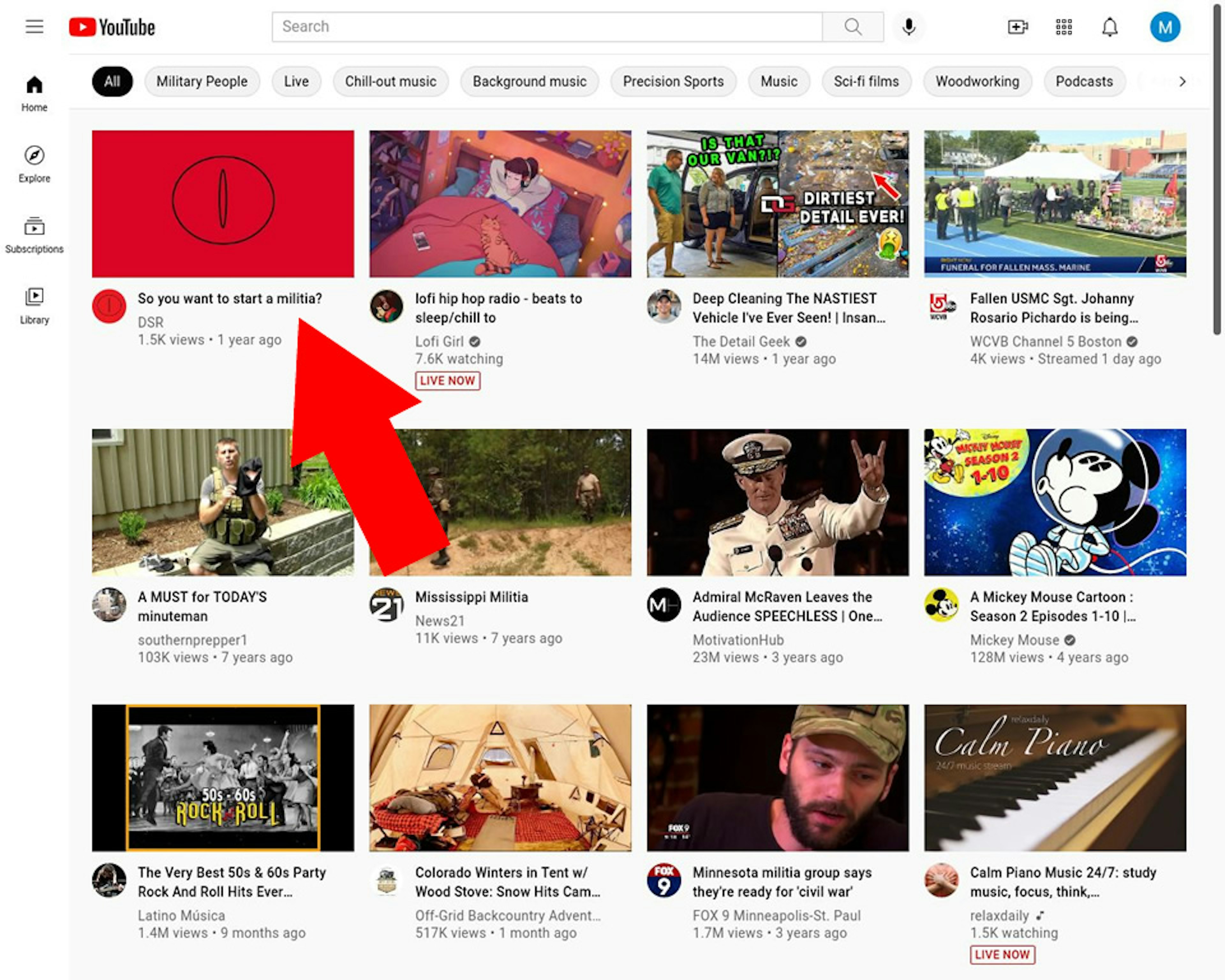

Another alarming finding: When researchers showed an interest in militant movements, YouTube suggested videos with titles like “5 Steps to Organizing a Successful Militia” and “So You Want to Start a Militia?” The platform also recommended videos about weapons, ammunition, and tactical gear to the militia-curious viewer.

The results highlight YouTube’s role in keeping people in political echo chambers and reinforcing extremist views and behavior. Google’s YouTube often escapes the scrutiny focused on its tech peers Facebook and Twitter, but as TTP’s research demonstrates, the video giant is helping to fuel political polarization—one of the key issues now confronting Big Tech.

Here are the key takeaways from the research:

- YouTube pushes users into filter bubbles that dramatically change the news results they see based on the ideological orientation of the content they have already viewed.

- These filter bubbles appear to be more robust for right-leaning content. YouTube fed Fox News viewers an endless stream of right-wing content, whereas MSNBC viewers eventually broke out of their left-leaning filter bubble.

- Fox News has a heavy presence in the recommended videos. YouTube’s algorithm even started showing the MSNBC viewer content from Fox after it ran out of left-leaning recommendations.

- YouTube recommended videos about militia organizing and tactical weapons to viewers who had previously showed an interest in militia movements.

- Google has shown it can tweak its algorithm to address issues like extremism, but has not taken such steps with YouTube.

Powerful feedback loop

YouTube’s recommendation algorithm—the opaque formula that the platform uses to suggest and automatically play the next video for a viewer—has become a source of debate over whether it leads to radicalization. TTP set out to test that issue.

Our researchers registered new Google accounts and watched a collection of YouTube top news videos from either Fox or MSNBC using a clean browser. They then watched the first 100 videos that YouTube recommended after they visited each of three jumping-off points on the platform: the first result in the trending news feed, the first search result for the keywords “Election Results,” and the second video on YouTube’s home feed.

The results showed that YouTube served up a feedback loop of content that reflected the user’s original dabbling in Fox or MSNBC. TTP did not find evidence that YouTube fed users increasingly radicalized content, but it did keep them in ideological corners.

For example, the users who clicked the top story in YouTube’s trending news feed got entirely different recommendations depending on whether they had previously watched Fox or MSNBC. A user who watched MSNBC videos saw a Biden White House press conference, a story about the sex trafficking case against an associate of Republican Rep. Matt Gaetz of Florida, and leaked video of Republican officials discussing voter suppression. A user who watched Fox News saw a story accusing the Biden administration of covering up the origins of the Covid pandemic, a segment about Critical Race Theory, and a video accusing the left of hypocrisy regarding climate change.

Notably, though, YouTube’s filter bubbles appeared to be stronger for the Fox News viewer. Over time, the MSNBC viewer broke out of their filter bubble as the recommendation engine began to show them stories from a variety of news sources, including Fox News. The Fox News viewer, on the other hand, received a steady diet of conservative videos—many from Fox’s most incendiary commentators—through the end of the study.

The findings raise questions about YouTube’s role in right-wing polarization and the spread of conspiracy theories stoked by Fox News. YouTube in 2017 changed its algorithm to recommend videos that people will find more gratifying, despite concerns that the move would reinforce pre-existing viewpoints and misinformation. YouTube executives at the time acknowledged the change could help misinformation and hoaxes to spread on the platform, according to Reuters.

The Fox factor

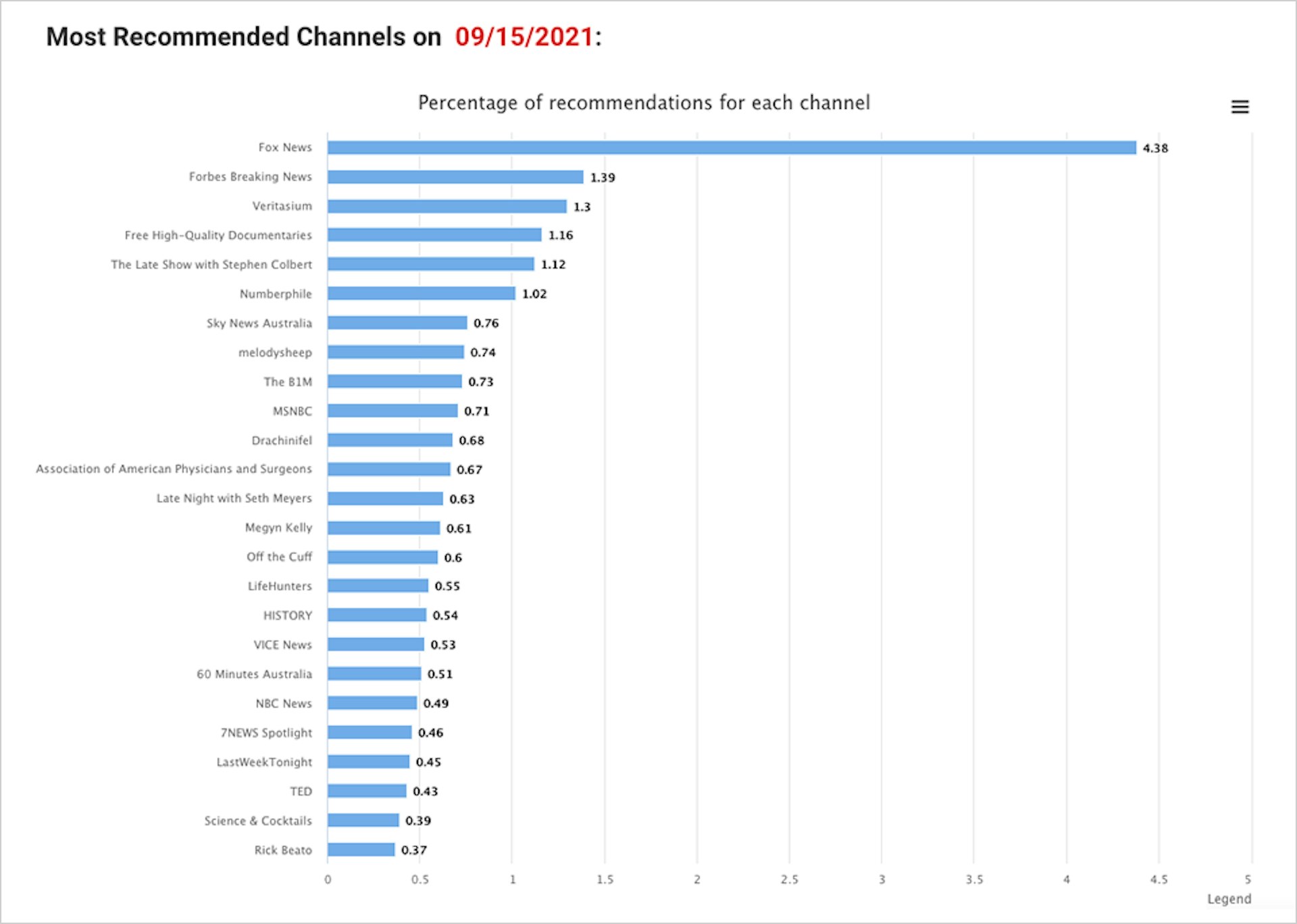

TTP’s research highlights the prominence of Fox News in YouTube’s recommended videos. The findings echo data from AlgoTransparency, a research consortium run by former YouTube engineer Guillaume Chaslot, which found that Fox is YouTube’s most-recommended information channel. According to AlgoTransparency, videos on the Fox News channel constituted nearly 4.4% of all recommendations on the platform on September 15, 2021, three times more than the next most-recommended channel. YouTube recommended MSNBC less than 1% of the time.

AlgoTransparency data

One possible explanation for this trend could simply be that more Fox News viewers use YouTube than MSNBC viewers do. But TTP’s results show the clear role played by YouTube’s algorithm. For a user who dabbled in Fox News, YouTube recommended Fox videos 90 out of 100 times. We saw this pattern even when users started from the trending news feed, which YouTube says it doesn’t personalize.

In contrast, when we primed a user account by watching MSNBC, YouTube recommended MSNBC videos 14 times in the first 20 news stories, maintaining a left-leaning lineup for a time, but then losing its ideological focus. In fact, Fox News had a heavy presence in the remaining 80 videos served up to the MSNBC viewer, accounting for more than half (46).

The secret to Fox News’ success on YouTube may be the channel’s inflammatory reports. It’s long been observed that YouTube prioritizes content that encourages user outrage, which the tech industry euphemistically calls “engagement.” Of course, left-leaning MSNBC can also serve as an outrage machine. But Fox News brings some unique attributes. One 2020 study found that Fox News was five times more likely than MSNBC to use “hate” and similar negatively charged words, usually in the context of telling its viewers that cultural and political elites hate Fox viewers. Another study showed that Fox News headlines feature more negative sentiment than those of CNN.

Media reports indicate that a YouTube algorithm change in 2019, meant to promote “authoritative news sources” over conspiracy theories and misinformation, had the effect of boosting Fox News on the platform. A 2020 study led by former YouTube engineer Chaslot and researchers at the University of California, Berkeley underscored that trend, revealing that when YouTube stopped recommending conspiracy theorists like Alex Jones, Fox News almost always filled the void.

Meanwhile, Fox has gotten better at social media strategy and reportedly has an entire team devoted to optimizing the channel’s content for YouTube. It’s also notable that Fox News commentators frequently stoke conspiracy theories not far afield from Jones’ claims, on topics ranging from murdered DNC staffer Seth Rich to the nation’s top infectious disease expert Dr. Anthony Fauci—and of course, the bogus claim that the 2020 election was stolen from Donald Trump.

Militia videos

When users start by watching Fox News, they remain in a familiar echo chamber of right-wing opinion. But when they start by watching more extreme videos, the echoes become toxic.

In an experiment where a user started by watching videos about American militia movements, YouTube recommended additional extremist content, including videos that purport to teach tactical skills like operation security, how to use military equipment, homemade weapon building, and how to use household tools for self-defense. YouTube’s algorithm also offered up general instructional videos like “5 Steps to Organizing a Successful Militia” and “So You Want to Start a Militia?” YouTube repeated these recommendations on multiple occasions.

This is not an entirely new phenomenon for YouTube. Reporting from 2017 showed how a far-right Christian militia maintained a YouTube channel with combat training clips. In recent years, attention has turned to Facebook’s ongoing problems with militias on its platform—scrutiny that has become more intense in the wake of the Jan. 6 U.S. Capitol insurrection. But as the above findings show, YouTube has a problem with such extremist content as well. YouTube’s community guidelines state that it “doesn’t allow content that encourages dangerous or illegal activities that risk serious physical harm or death.”

In the case of our hypothetical militia-curious YouTube user, YouTube’s recommendations could have had deadly consequences. But it doesn’t have to be this way. In 2016, amid concerns about ISIS recruitment online, YouTube’s parent company Google said it would tweak its search algorithm to recommend counter-extremism content when it detected that a user had an interest in joining a terrorist organization. Facing a backlash over inappropriate recommendations and an addictive user experience, YouTube recently gave parents the option of turning off the autoplay feature and restricting recommended videos to human-curated selections on YouTube Kids. These changes show that a healthier YouTube is within the company’s grasp if it chooses to prioritize user well-being over engagement.

Conclusion

YouTube’s ultimate agenda is to keep users on the platform. When a user shows an interest in a topic that is known to drive engagement, YouTube will suggest an endless stream of similar content in a bid to further entrench that interest. While the result could be innocuous for a user who likes cat videos, funneling viewers of political content into ideological filter bubbles can lead to a distorted worldview.

A 2020 paper by academics Jonas Kaiser and Adrian Rauchfleisch argues that consuming a steady stream of intellectually homogenous content can lead to the normalization of radical ideas and ultimately to greater political polarization. The more deeply that users become engaged with YouTube, the more estranged they become from their fellow citizens.

Note: Dewey Square Group contributed research and analysis on YouTube’s recommendation algorithm for militia-related content.