Instagram continues to promote dangerous eating disorders to vulnerable users including young teenagers, according to new investigation by Reset and the Tech Transparency Project (TTP) that highlights the platform’s role in amplifying unhealthy body ideals.

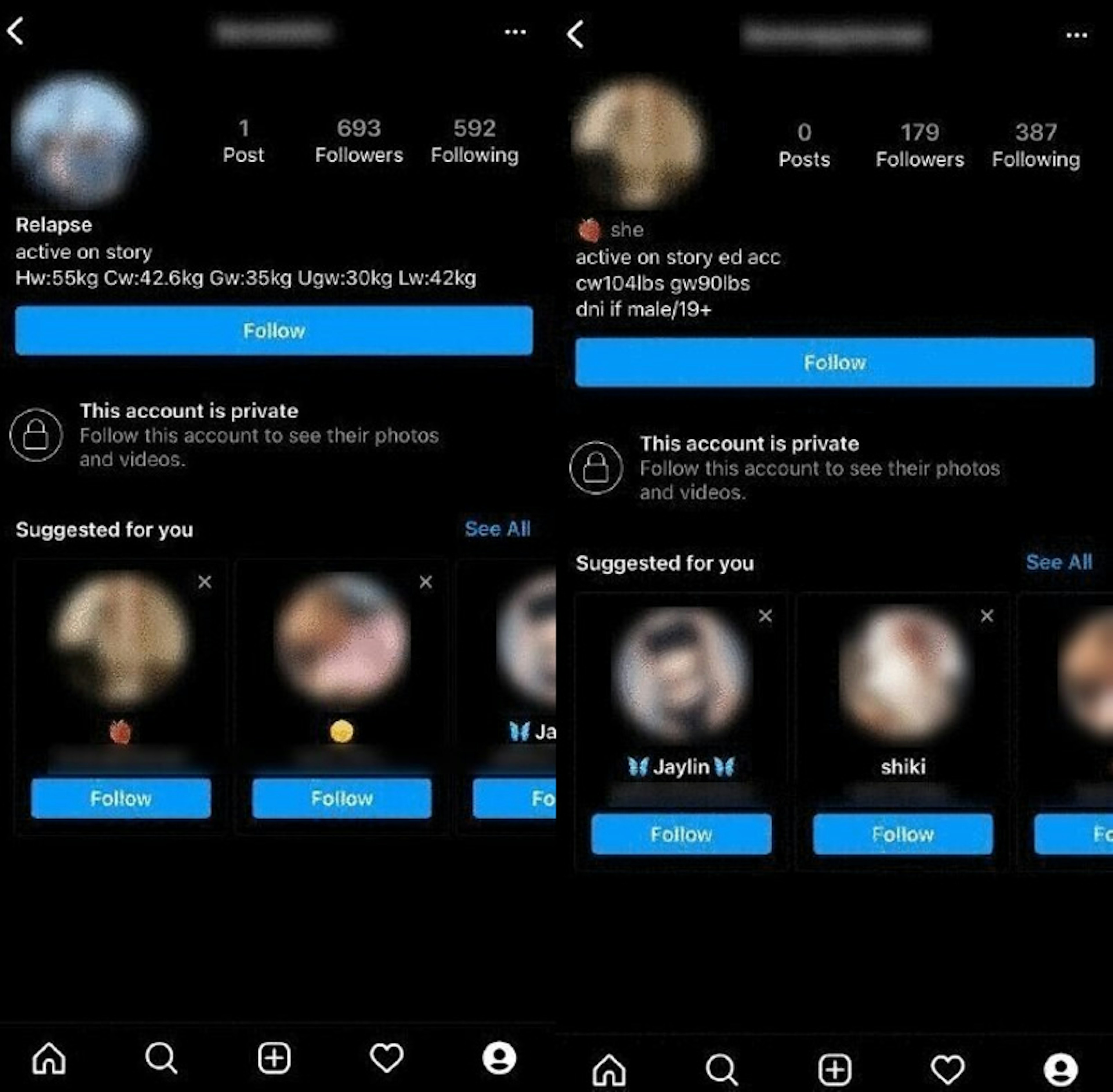

Researchers found that Instagram recommended accounts full of disturbing images of underweight women to users who showed an interest in getting thin. Many of the recommended accounts explicitly promoted anorexia and bulimia, listing goal weights as low as 77 pounds.

The investigation also revealed just how easy it is to get pulled into Instagram’s “thinfluencer” culture, with anorexia “coaches” reaching out with unsolicited offers to provide weight loss advice. Meanwhile, Instagram makes it exceedingly easy to search for hashtags and terms associated with eating disorders on the platform.

According to documents leaked earlier this year by Facebook whistleblower Francis Haugen, Instagram executives are acutely aware of the effects of content promoting unhealthy body ideals on young users. An internal presentation by an Instagram employee in 2019 said, “We make body image issues worse for one in three teen girls.” But the platform continues to amplify content promoting extreme weight loss, failing to enforce its own moderation policies.

The research by Reset and TTP adds to growing questions about Instagram’s impact on users struggling with body image issues, especially young people. Such questions will be front and center for Adam Mosseri, the head of Instagram, when he testifies before a Senate panel on Wednesday.

“The conclusions of this research are deeply concerning but sadly not surprising,” said Dr. Elaine Lockhart, chair of the Faculty of Child and Adolescent Psychiatry at the Royal College of Psychiatrists in the U.K. “I’m seeing more and more young people affected by harmful online content.”

“We need to see tougher regulation and stricter penalties for organizations that promote or amplify this content to users,” she said, adding that “government must compel social media companies to hand over anonymized data to researchers.”

Warning: The following slides contain images that some readers may find disturbing. Click on individual slides to view.

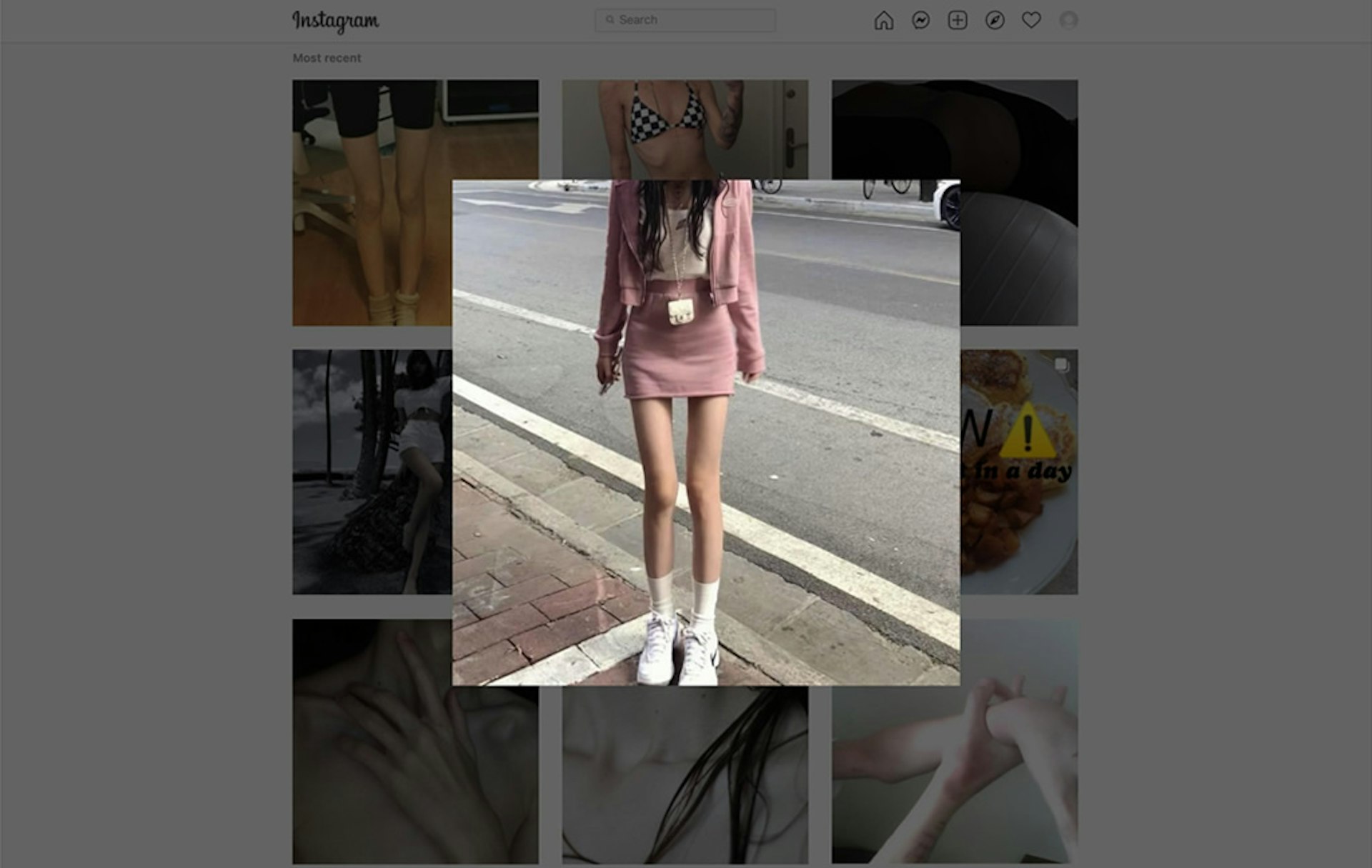

The world of ‘thinspo’

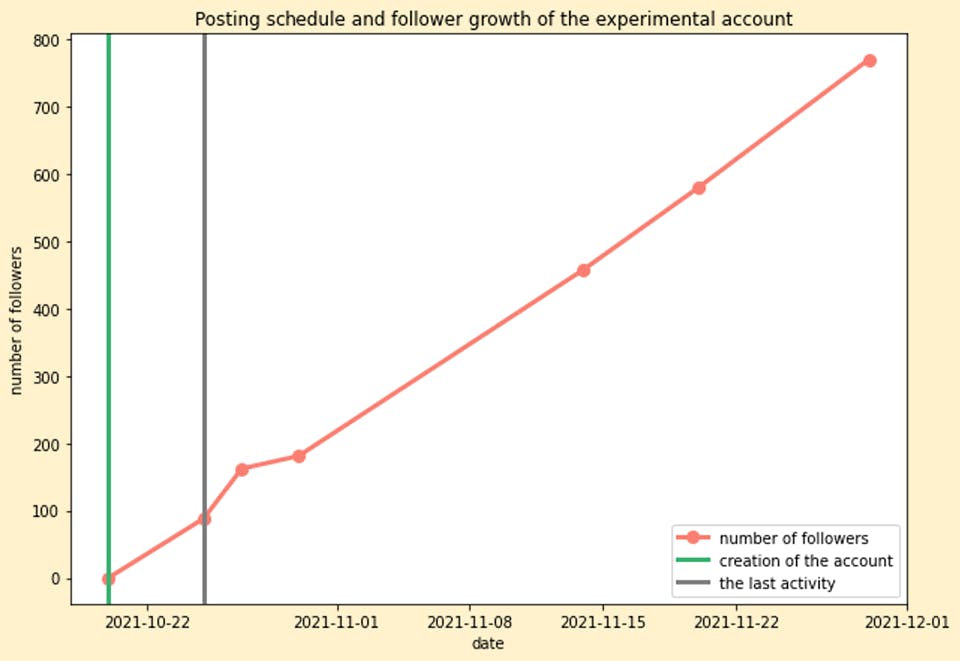

To assess the extent to which Instagram protects users from content that encourages eating disorders, our researchers created an account on Oct. 20, 2021 for a hypothetical user who showed an interest in getting thin, and used that to document the content that Instagram’s algorithm recommended.

The account was for a 29-year-old adult. Over the course of a week, we posted six pictures of thin bodies and used vocabulary in the bio section that is common in thinspiration communities—such as “My thinspo” and “TW,” short for trigger warning. (While originally meant to help people avoid triggering images, the term “TW” has become a signal that can attract users to eating disorder content.) We also subscribed to other “thinspo” Instagram accounts, both private and public.

To examine whether Instagram affords a higher level of protection to minors, we repeated the same experiment with a second account for a 14-year-old. We explicitly stated the age in the bio for that account.

During this process, when our hypothetical users followed just one account associated with eating disorders, Instagram started recommending similar accounts.

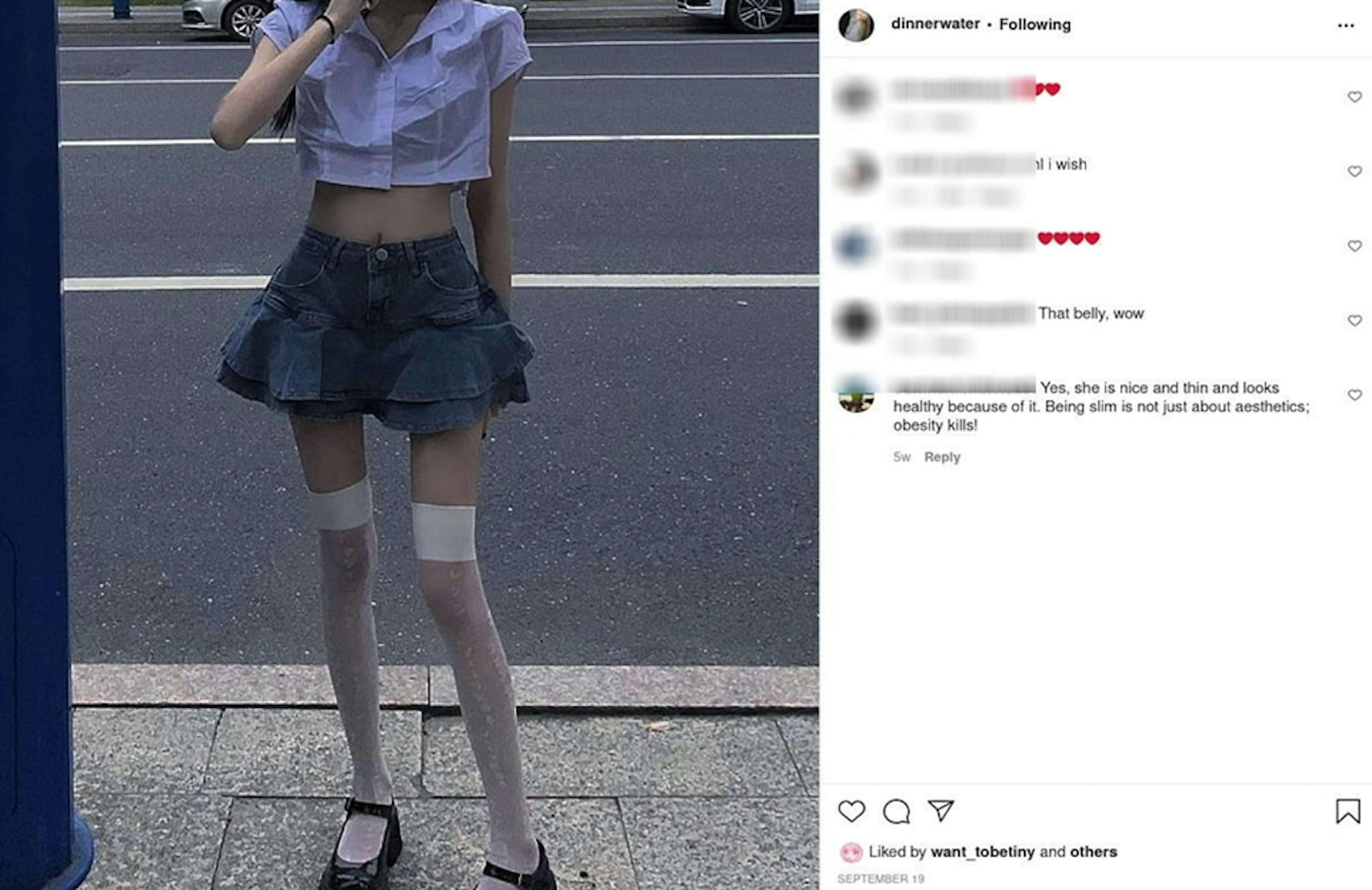

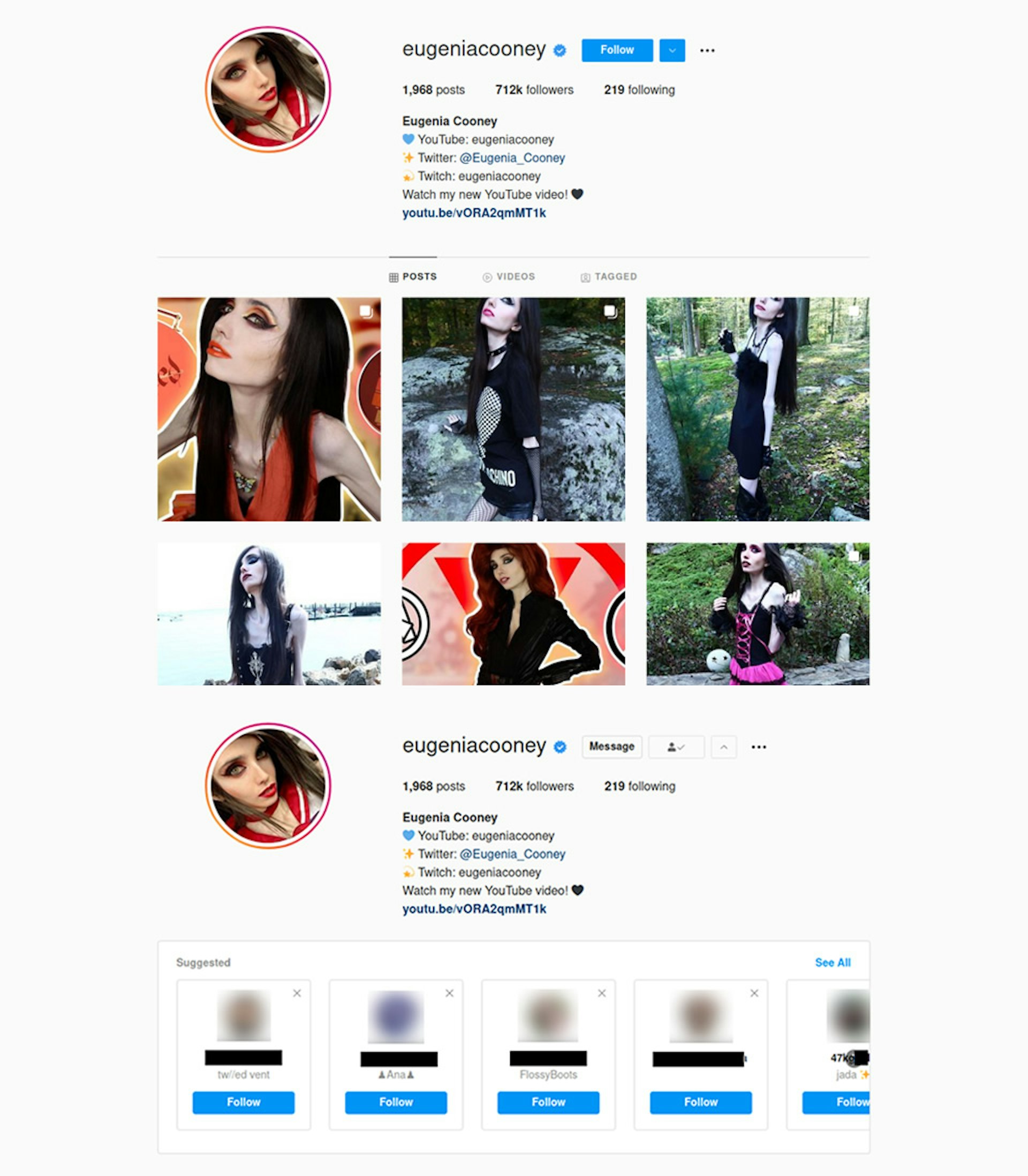

For example, when our first test user started following a verified account with over 700,000 followers, run by a figure with a fan base in the "thinfluencer" community, Instagram’s algorithm suggested we also follow so-called “pro-ana” accounts. (“Ana” is a common shorthand for anorexia nervosa.) It’s easy to see how this could send a vulnerable person down a rabbit hole that normalizes toxic body images and extreme weight loss.

Interestingly, many of the pro-ana accounts recommended by Instagram had smaller follower counts. Accounts like these would normally have a hard time getting traction on the platform, but Instagram’s algorithmic amplification actively promoted them to new users, helping them find a broader audience.

The growth curve of our first test account further illustrates the problem of algorithmic amplification on Instagram. The account’s audience increased by more than seven fold in the three weeks after its last activity, suggesting that Instagram recommended it to other users.

Our findings for the 14-year-old account were equally alarming.

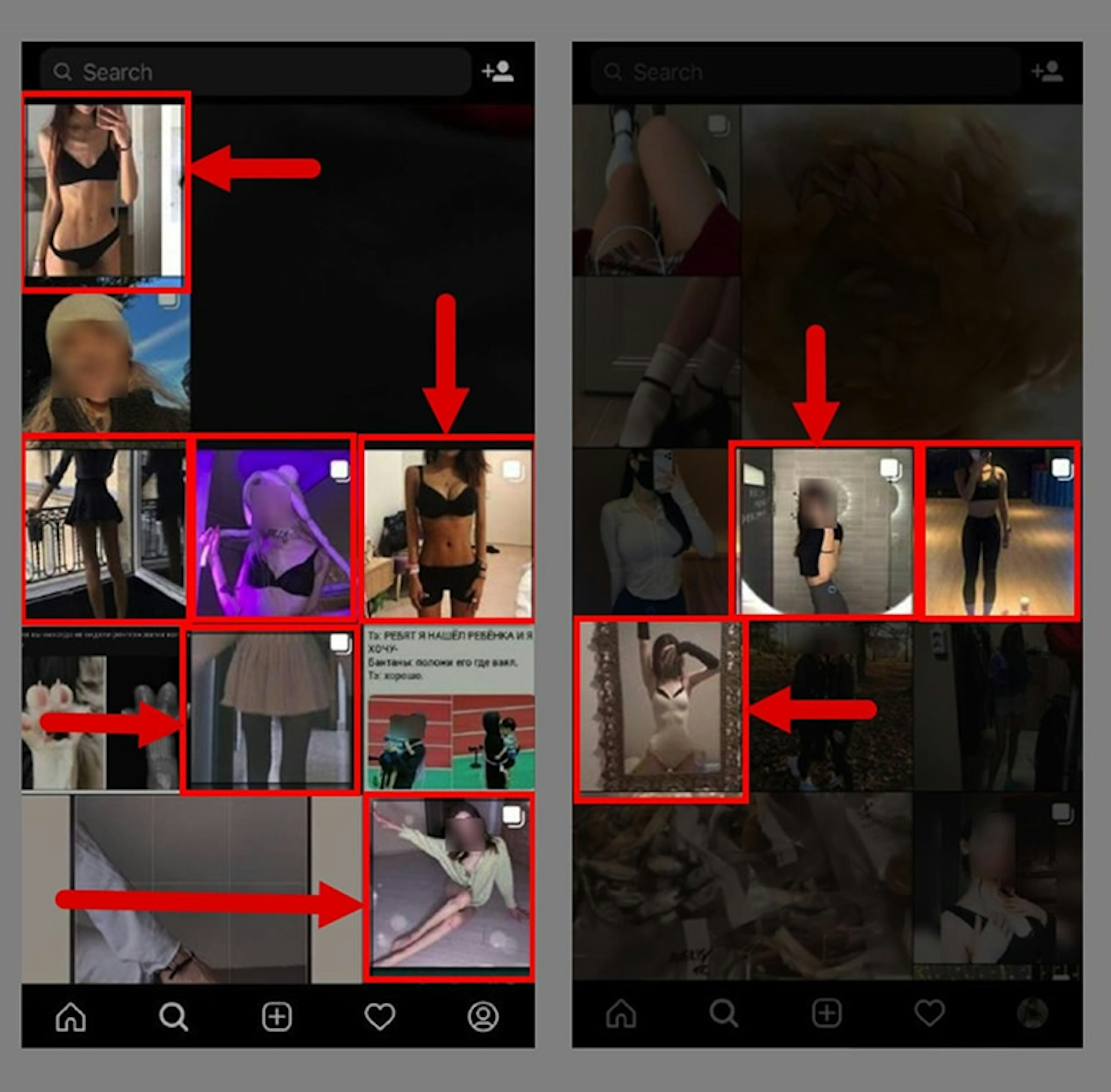

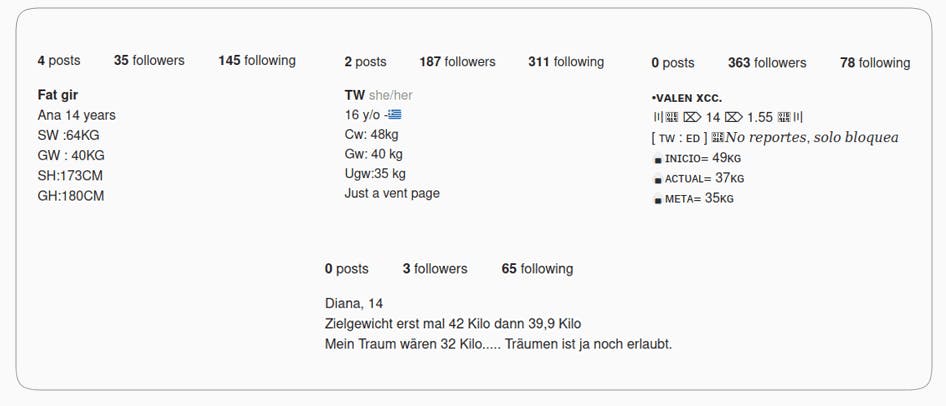

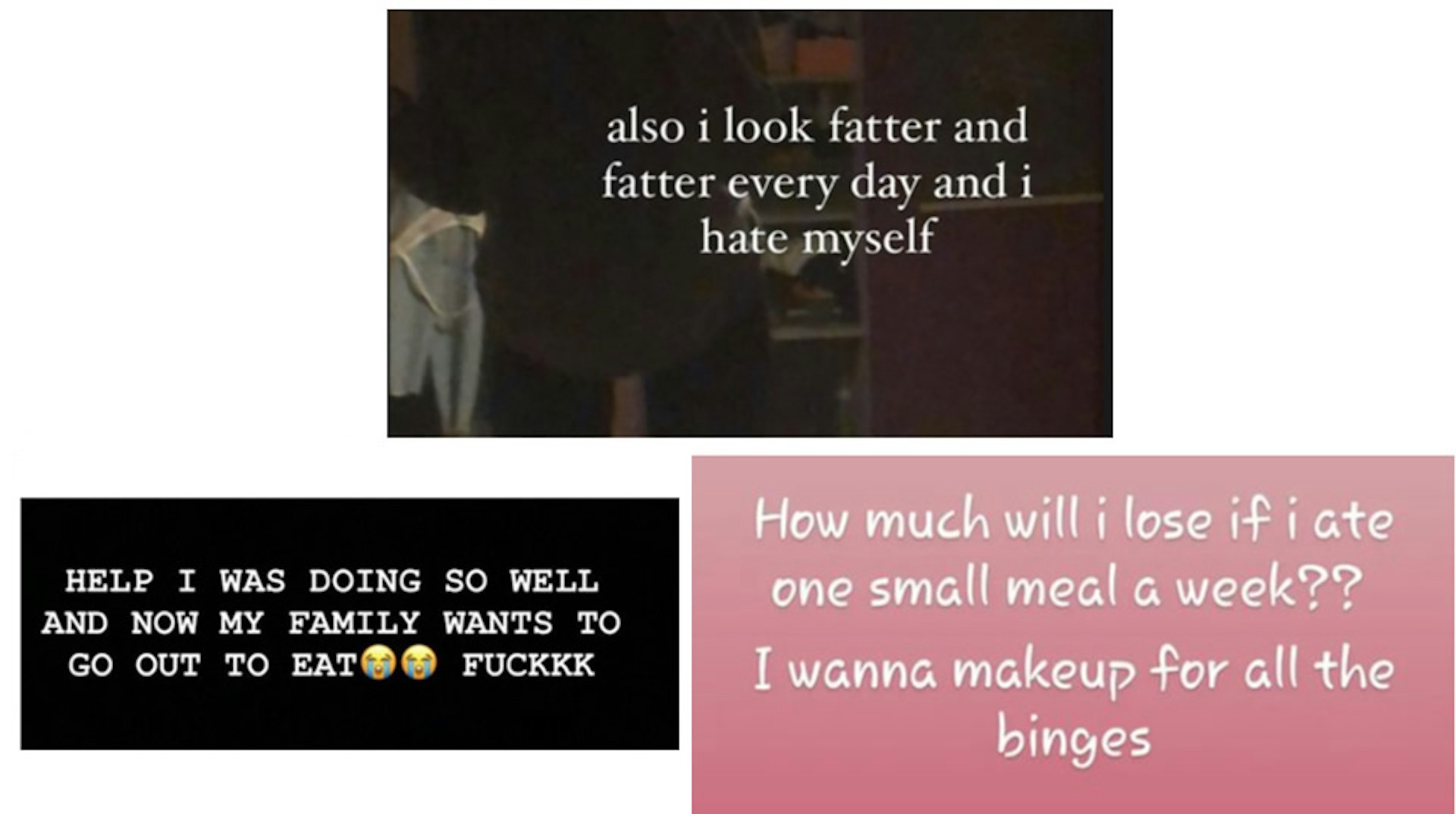

In the teen user’s Discovery tab, Instagram recommended a number of large “thinfluencer” accounts that had at least 1,000 followers and featured highly produced content with dangerous body images. At the same time, Instagram’s “Discover people” feature (found in a user's profile) recommended smaller private accounts of young users oriented around extreme weight loss. This all creates a troubling ecosystem: “Thinfluencer” accounts on Instagram promote unhealthy body ideals, while peer communities of young users encourage each other to pursue those ideals.

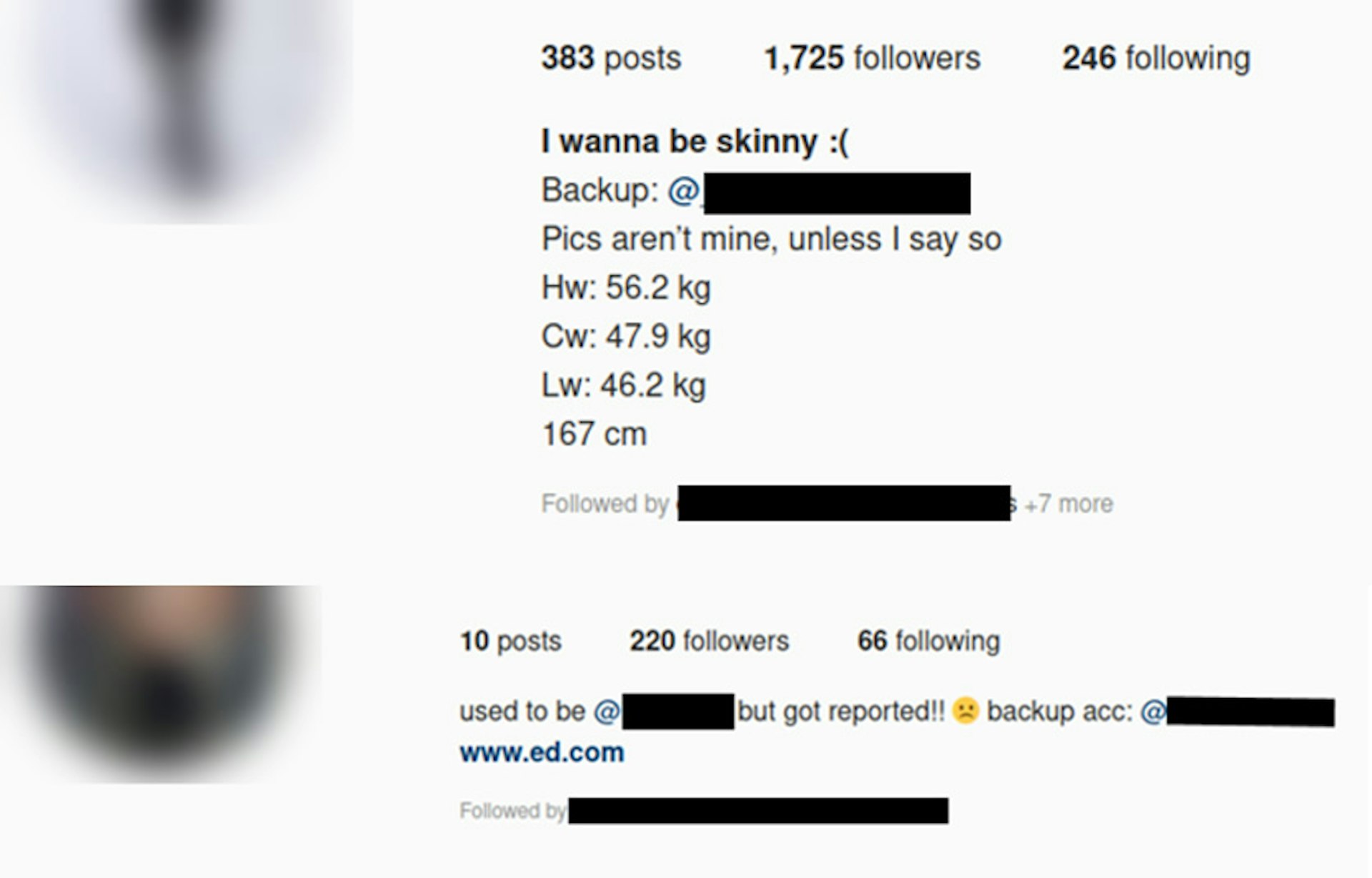

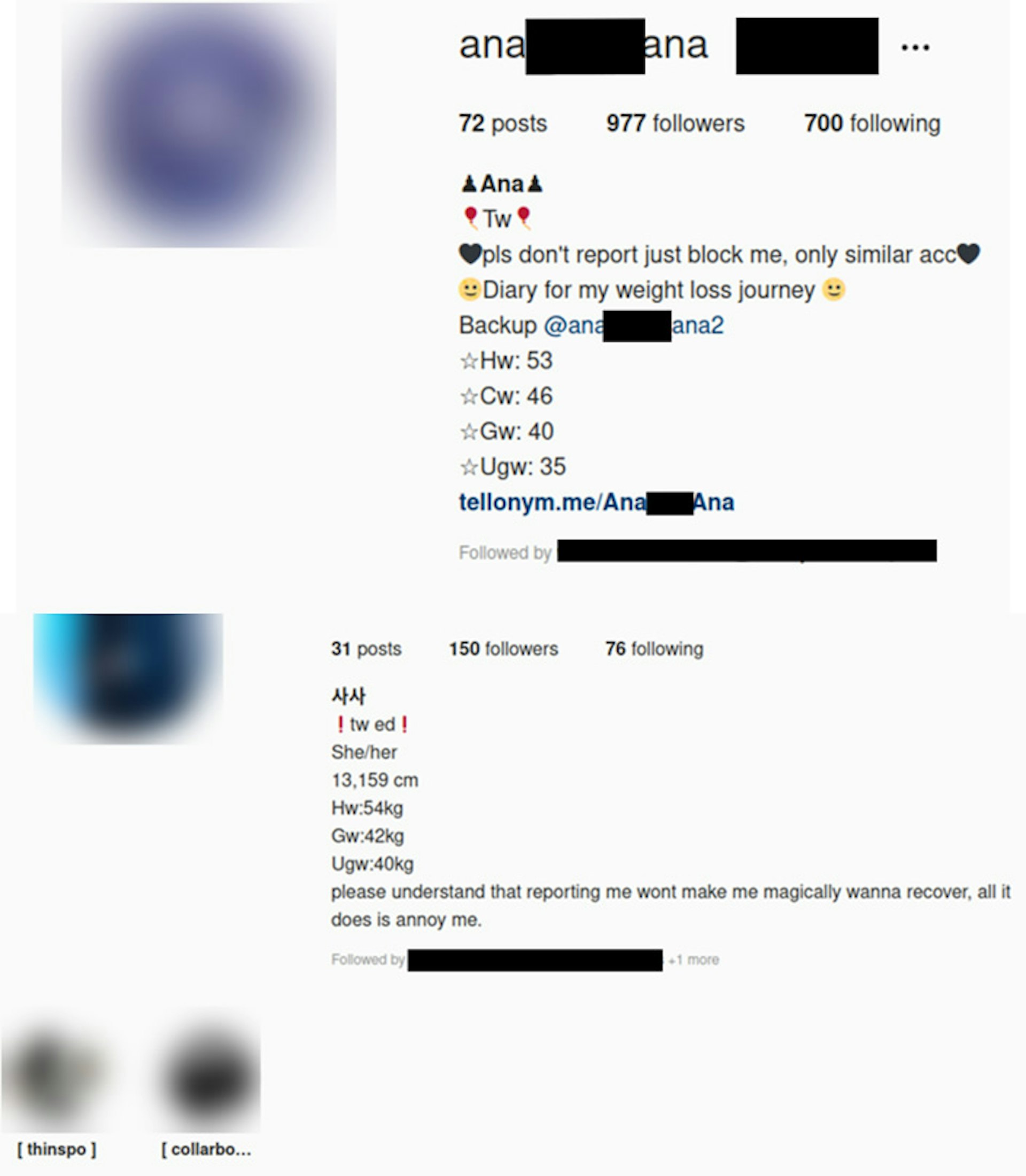

Examples of private user accounts recommended via ‘Discover people’ feature

Examples of private user accounts recommended via ‘Discover people’ feature

Anorexia ‘coaches’

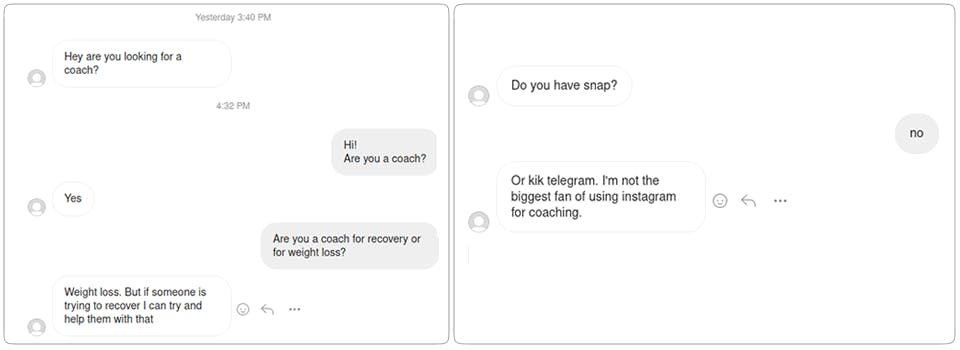

Within the Instagram communities promoting extreme body images, self-described “coaches” provide weight-loss advice to other users. The media have reported for years on the dangers of these kinds of individuals preying on vulnerable young people, particularly girls, on social media, so it should come as no surprise to Instagram. But it took only four days for a “coach” to contact our first account.

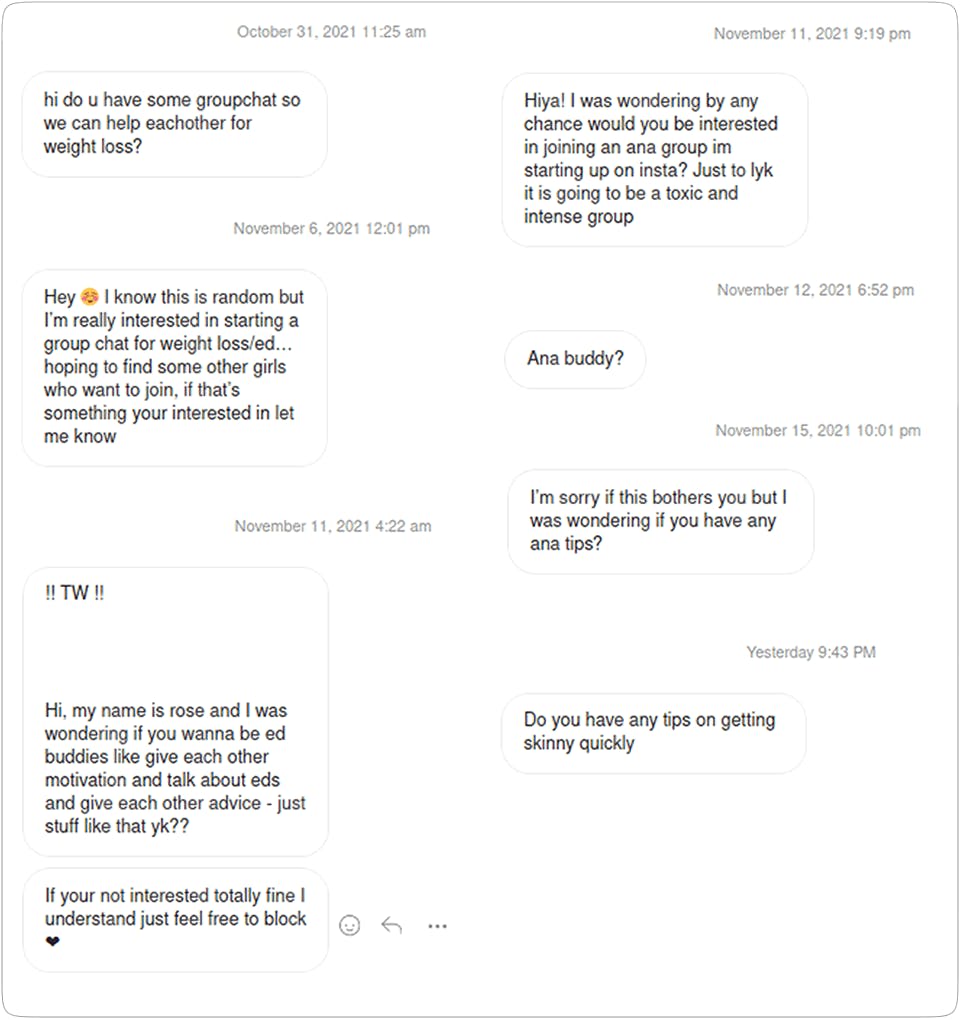

The following are screenshots of the interaction between the “coach” and our test account. The “coach” immediately attempted to shift the interaction to other platforms such as Snapchat or Telegram, where personal conversations between users are harder to track by both the platforms and law enforcement authorities.

During the experiment, our first account received personal messages from other users who likely found our account through the Discovery tab. (Instagram does not provide researchers with the data needed to track amplification patterns.) Those other users asked for tips on “how to get skinny quickly” or whether we wanted to be “ana buddies.” In many cases, it was impossible to verify the users’ authenticity, and we did not respond to any of the messages.

Unmoderated group chats

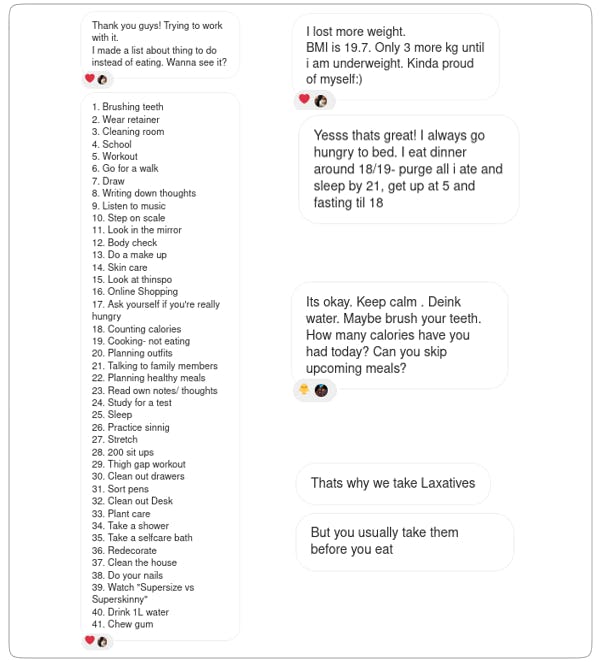

Upon being invited, we did join one group chat called “Supporting starvation,” which had 17 other members. We did not write any messages in that chat, but captured messages from other users documented below:

Enforcement loopholes

Instagram’s official policy states: “[W]e’ll remove content that promotes or encourages eating disorders” while allowing people to “share their own experiences and journeys around self-image and body acceptance.” But our investigation showed that the company’s enforcement of this policy is patchy at best.

During our experiment, Instagram blocked the hashtags #ana (short for anorexia) and #mia (short for bulimia), but our researchers found that the fully spelled out hashtags for #аnorexia, #bulimia, and #magersucht (anorexia in German) were still active. What’s more, typing “ana” or “mia” into the Instagram search bar as non-hashtags still yields a significant amount of content promoting eating disorders.

Instagram provides some resources to help people suffering from eating disorders. Some pages for hashtags associated with eating disorders include a pop-up message pointing to ways to get support, including a link to reach a helpline volunteer. But there are loopholes in this system. For example, if a user searches for a banned hashtag like #thinspo, they don't get the pop-up message.

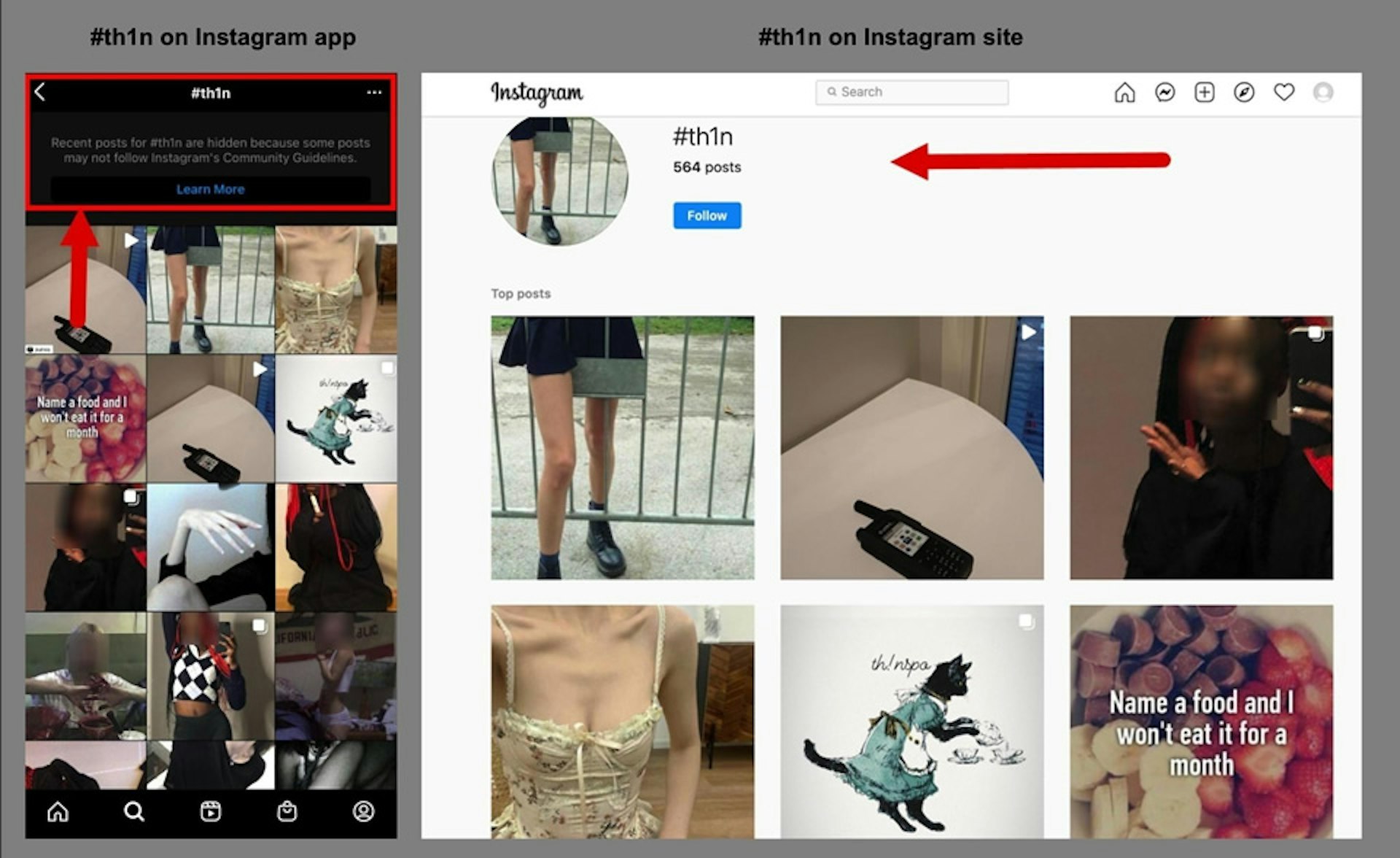

TTP also found discrepancies in how Instagram deploys safety features. Take the page for the hashtag #th1n, a reference to "thin." On the app, Instagram showed a warning on the #th1n page that the content may go against its Community Guidelines. Yet the same #th1n page on the Instagram website included no such warnings, despite the presence of some graphic eating disorder content.

Moreover, without a prompt, resources for people suffering from eating disorders are hard to find on Instagram. A user must click on their profile icon, then click "Settings," then click "Help" in the sidebar menu, then click "Help Center," then click on the drop-down menu for "Privacy, Safety, and Security," and finally click "About Eating Disorders."

‘Multiple accounts’ policy

According to Instagram's Help Center, one user can have up to five different Instagram accounts and switch between them without logging out—and our researchers found this feature plays an important role in the “thinspiration” community.

We analyzed thousands of “thinspiration” profiles and found that backup accounts are common, with users often featuring them in their bios. The apparent strategy with these backup accounts is to evade removals or suspensions by Instagram that might cut people off completely from the eating disorder content they seek.

We found examples of users arguing that reporting their accounts won’t help them recover. One 13-year-old Instagram user who was engaging with pro-anorexia content said “reporting me won’t make me magically wanna recover, all it does is annoy me.”

Conclusion

Our research reveals multiple loopholes in Instagram's product design and safety policies, which make Instagram a danger to the mental health and physical well-being of one its most vulnerable user groups: people with eating disorders.

Instagram not only fails to enforce its own policies, but it also proactively recommends toxic body image content to its adult and teen users. In this way, Instagram fuels the idealization and marketization of dangerous body ideals, while fostering communities of young users prone to eating disorders.

The platform, meanwhile, hasn’t adequately addressed the threat of anorexia “coaches” who prey on young people. These shortcomings greatly increase the risk of users being drawn into communities of self-harm.