Apple announced a controversial plan this month to scan U.S. iPhones for images of child sexual abuse—a response to mounting concerns about the digital explosion of such content.

But a new investigation by the Tech Transparency Project (TTP) shows that Apple is failing to take even the most basic steps to protect children in one of its core profit engines: the App Store.

The investigation reveals major holes in the App Store’s child safety measures, showing how easy it is for young teens to access adult apps that offer dating, random chats, casual sex, and gambling, even when Apple knows the user is a minor.

The results undermine Apple’s promise that its App store is a “safe place for kids” and that it rejects apps that are “over the line — especially when it puts children at risk.”

Using an Apple ID for a simulated 14-year-old, TTP examined nearly 80 apps in the App Store that are limited to people 17 and older—and found that the underage user could easily evade age restrictions in the vast majority of cases.

Among the troubling findings: A dating app that opened directly to pornography before asking a user’s age; adult chat apps filled with explicit images that never asked the user’s age; and a gambling app that let the minor account deposit and withdraw money.

The investigation also identified broader flaws in Apple’s approach to child protection. Chief among them: Apple and many apps essentially pass the buck to each other when it comes to blocking underage users, making it easy for young teens to slip through the system.

Meanwhile, TTP found a number of apps design their age verification mechanisms in a way that minimizes the chance of learning the user is underage. Apple, despite its total command over the App Store, takes no discernible steps to prevent this.

Taken together, these review failures create an ecosystem that is much more dangerous for minors than advertised, undermining assurances from Apple executives like CEO Tim Cook, who recently testified in the Epic Games v. Apple trial that the App Store would be a “toxic kind of mess” without Apple’s approval process.

In that same trial, evidence emerged that Apple insiders are aware of the company’s child safety problems. In text messages from last year, an engineer who led the company’s Fraud Engineering Algorithms and Risk unit wrote that Apple is more concerned about privacy than trust and safety, making it “the greatest platform for distributing child porn.”

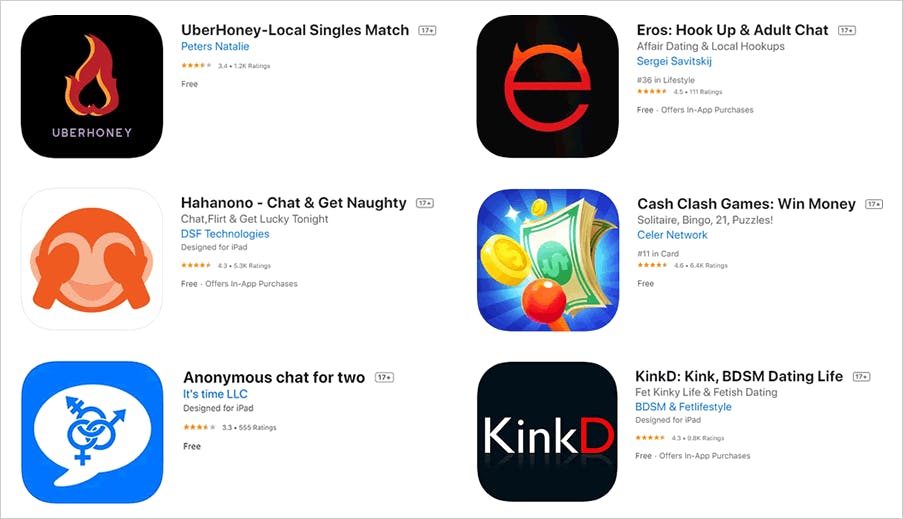

TTP's test showed a 14-year-old had easy access to these and other apps:

Blatant violations

TTP set up an Apple ID for a fictitious 14-year-old user with a birthdate of February 2007 and used it to download and test 75 apps in the App Store across several adult-oriented genres: dating, hookups, online chat, and casino/gambling. All of these apps were designated 17+ by the App Store.

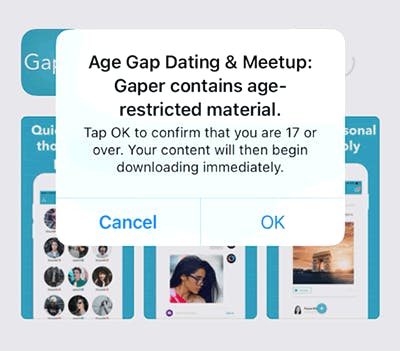

Minors can click OK to say they're 17+, even if Apple knows they're not.

When the underage test account tried to download these adult apps, Apple served a pop-up message asking the user to confirm they were 17 or older. But if our 14-year-old user clicked “yes” to say they were 17+, Apple did nothing to prevent the download, despite knowing, by virtue of the Apple ID, that the user wasn’t old enough. That puts the onus on the apps themselves to prevent access by minors—and TTP found this system is far from reliable.

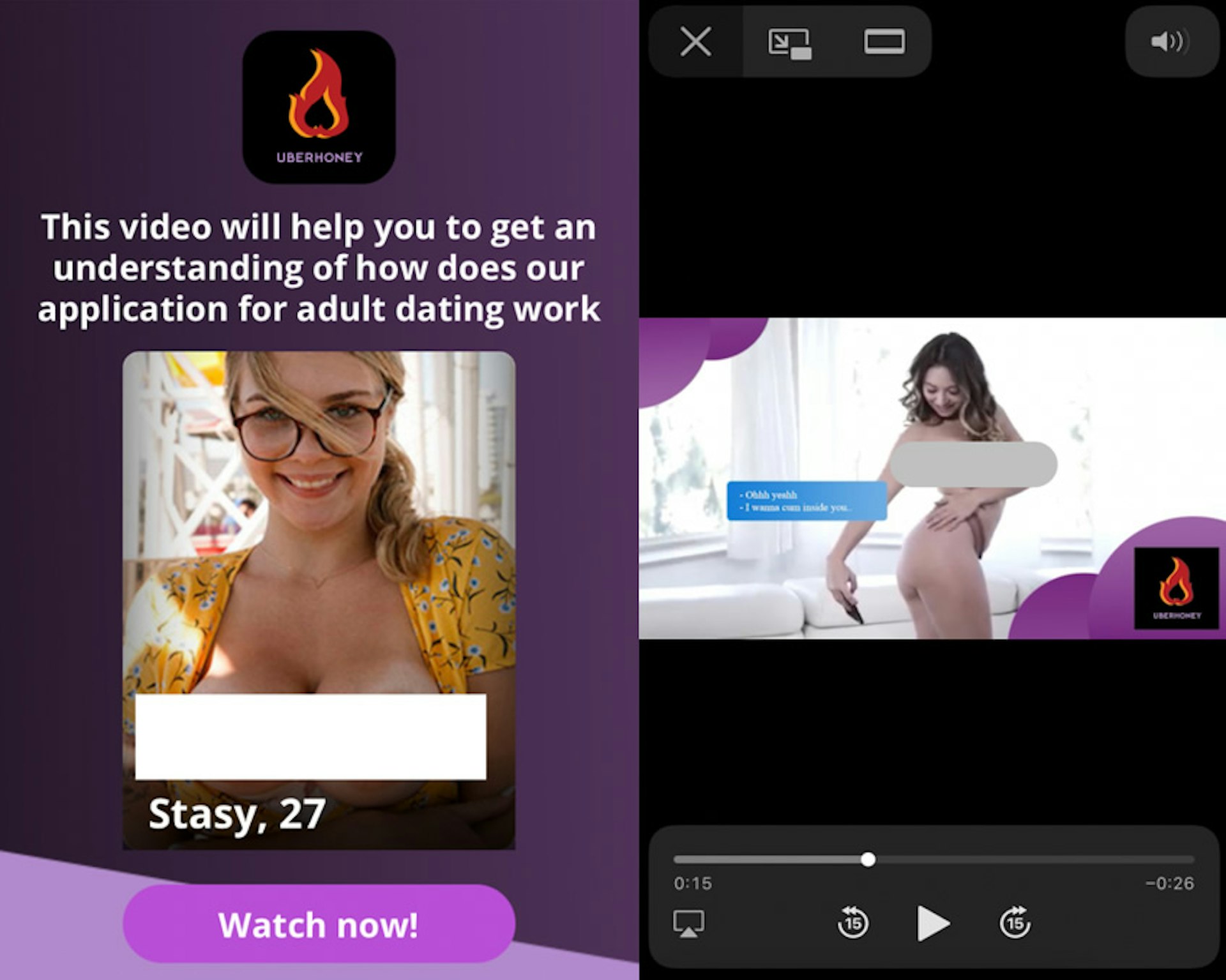

Take UberHoney, a local singles match app that was among the U.S. top-50 most downloaded Lifestyle apps in March 2021, according to App Store analytics firm App Annie. When TTP’s underage user opened the app, it immediately displayed pornographic content before even asking the user’s age. That’s a clear violation of Apple’s App Store Review Guidelines, which prohibit “overtly sexual or pornographic material.” UberHoney has been available on the App Store since September 2020.

The opening screen and video on UberHoney (censored).

Another example is Anonymous chat for two, a 17+ app that pairs users randomly and allows them to exchange text messages and images. TTP’s simulated 14-year-old user was able to gain immediate access to this app, and at no point did it ask for the user’s age. The reviews for the app are filled with complaints of bots linking to pornography and, in the words of one reviewer, “99% of the people here… just want nudes.”

A similar app, Hippo - Random Live Video Chat, never asked the 14-year-old user for their age, despite stating in its Terms of Service that users must be 18 or older. More than a dozen App Store reviews of the app refer to instances of pornographic content or users exposing themselves to others.

These kinds of random chat apps persist in the App Store despite Apple’s prohibition against “Chatroulette-style experiences” and apps that feature “user-generated content or services that end up being used primarily for pornographic content.” Apple changed its guidelines to ban such apps back in 2010.

Loopholes and workarounds

TTP’s investigation also identified broader flaws and inconsistencies in the App Store’s approach to child safety.

For example, 37 of the adult apps allowed registration with an Apple ID, and in each case, our 14-year-old user was able to use that method to sign up, even though Apple knew the ID was underage. If the apps asked for the user’s age, the minor would simply enter 18—again, with no intervention by Apple. The apps included HOO — Adult Hook Up & Friend Finder, Hahanono – Chat & Get Naughty, and Tinder.

These findings strongly suggest Apple doesn’t share user age data with the apps in its App Store. (Tinder parent company Match Group has actually complained to Congress that Apple’s refusal to do so prevents its apps from conducting a due diligence check on users.)

It was a different story when our 14-year-old tried to register via Facebook, a method offered by 31 of the apps. TTP used an underage Facebook account with the same name and age as the simulated minor, and in two-thirds of the cases (21), Facebook blocked the attempted registration, evidently because the user wasn’t old enough.

In seven of the cases that escaped a Facebook block, the apps themselves blocked the user, suggesting they knew from the synch-up with Facebook that the user was underage. Only one, Tinder, blocked the user permanently, while five others—Badoo, Clover, DOWN, Eros, and HER—were less stringent, letting the minor switch to another registration method and enter their age as 18. Dating app Zoosk, meanwhile, simply let the minor enter their age as 18 and proceed.

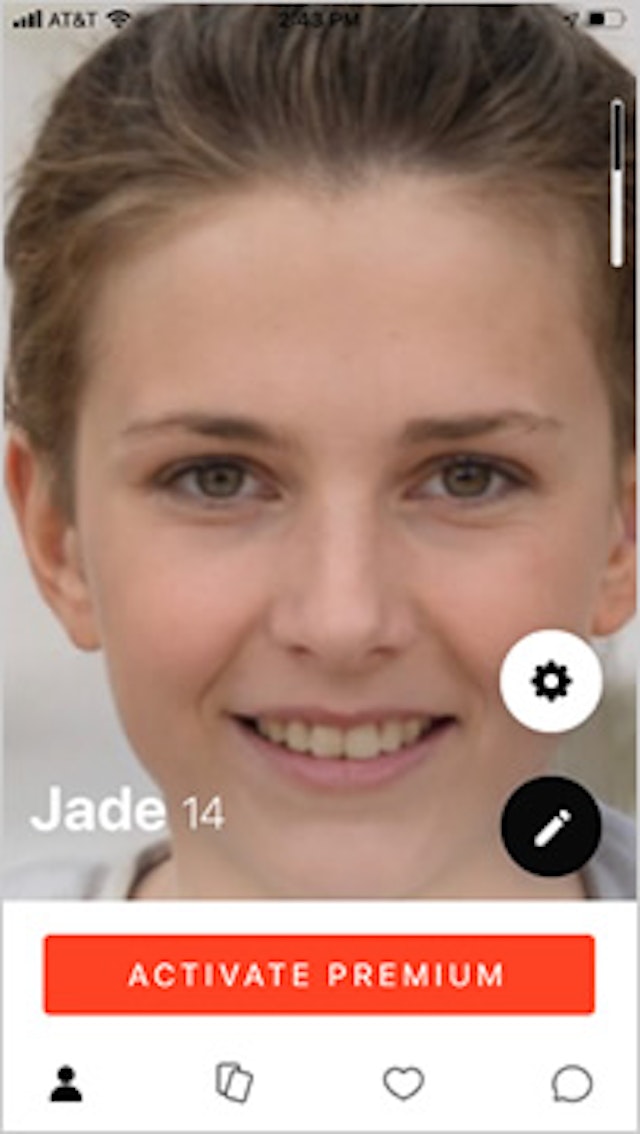

The profile TTP set up on Eros using an AI-generated image.

The Eros example provides a good case study in what teens encounter once they get inside these apps. TTP’s test account set up an Eros profile using an artificial intelligence-generated image of a minor. The app then served up a selection of hookup options, including “No Strings Attached,” “Friends with Benefits,” “One Night Stands,” and “Swinging,” and allowed the user to begin viewing adult profiles. Eros has been available in the App Store since March 2020, according to App Annie.

The investigation also found that five of the apps, after Facebook blocked an attempted registration, locked out the user—but not permanently. The lock-out was easily circumvented by deleting and redownloading the apps. These apps were Bumble, Hinge, BLK, Upward, and Chispa.

Inconsistent rules

Another interesting case is the chat app Yubo, which has been dubbed “Tinder for Teens.” It’s restricted to users 17 and up in the App Store, but the app itself allows users as young as 13 to register, and even says so in its terms of service.

It’s not clear why there’s a discrepancy between the App Store’s 17+ rating and Yubo’s actual practice of permitting young teens to join. Yubo lets uses swipe to meet friends in a Tinder-like setup, and matches can enter video chats with each other. The app, which last year boasted of 25 million users, has raised concerns over the years that it provides opportunities for sexual predators to groom minors.

TTP’s investigation found that gambling apps, bound by laws that prohibit underage gambling, are generally more thorough in checking a user’s age (sometimes requiring pictures of a government-issued ID), but there are some notable exceptions.

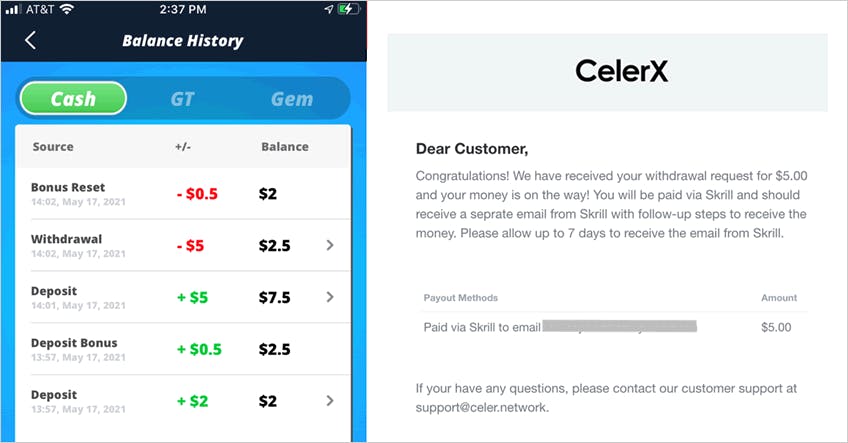

One of those is Cash Clash Games, a gambling app that promises users can “reap mega rewards.” It allowed our hypothetical 14-year-old account to play games, and deposit and withdraw cash. The only attempt at age verification took the form of miniscule print at the bottom of the registration page stating the user agreed they were 18 or over. (Cash Clash Games later banned TTP’s account for unspecified reasons.)

Deposit statement from Cash Clash Games and its parent company, CelerX.

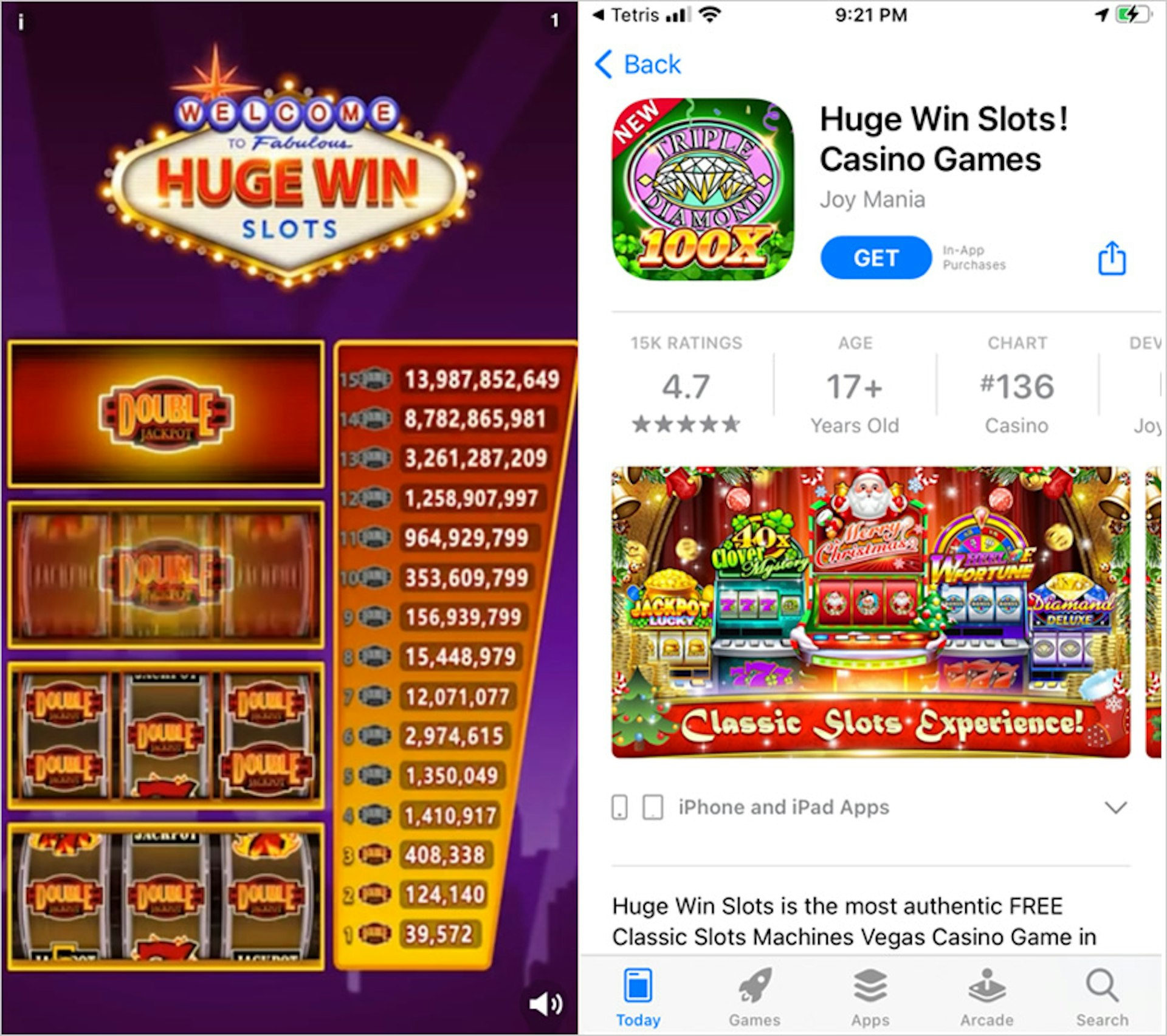

TTP also observed that Apple allows advertisements for 17+ casino apps to be served to the underage test account on several occasions, including in a game of Tetris, which is not an age-restricted app.

Casino game ad served to underage Apple ID while the user was playing Tetris. The ad immediately opens the download window if the screen is tapped anywhere at any time.

See no evil

A number of apps tested by TTP appeared to be designed to minimize the possibility of learning a user is underage.

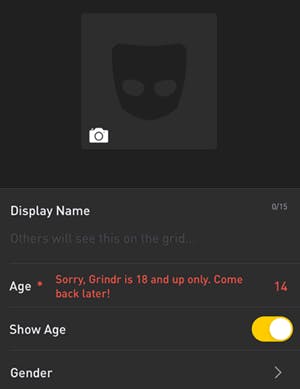

For example, Grindr, a popular dating and hookup app for gay and bisexual men, told our 14-year-old user to “come back later” when they entered their “true” date of birth, but did nothing to block the same user from trying again with an 18-year-old birth date to gain access.

Users on Grindr can send unmoderated photos to anyone in their vicinity, and the app can display the exact distance of other users in feet. Within 24 hours of registering for Grindr as a supposed 18-year-old, TTP’s test account received six unsolicited messages from other users, including two sexually explicit photos.

Grindr allows the user to immediately adjust their age upward to gain access to the app.

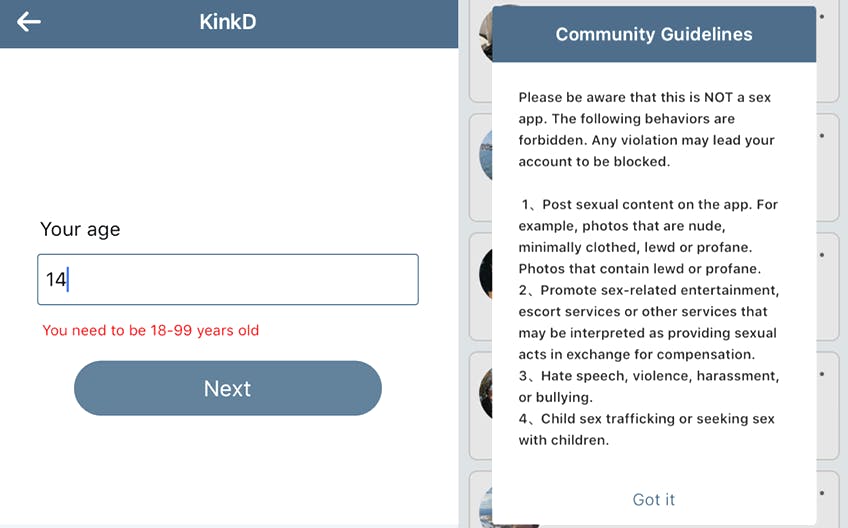

It’s a similar situation with KinkD, a “bondage, kink, fetish and BDSM dating community” app that is listed as 17+ and says it prohibits sex with children. When our minor user tried to enter an underage birth date, the app said they were underage—but simply let them readjust it. Once inside, a user can freely send images and messages to other accounts within their area.

KinkD also allows the minor to readjust their age to 18. KinkD displays the following warnings before allowing the user to interact with anyone in the same area via a chat.

This willful blindness to minor users tweaking their age contrasts with the stricter approach of dating apps like Tinder, Match, and Skout. TTP found that all three apps permanently locked out the hypothetical 14-year-old after they entered their “true” birth date or attempted to register via the underage Facebook account. Once the lockout occurred, the user would encounter a nonfunctional opening screen telling them to come back when they’re older.

This is not the first time Apple has faced scrutiny over sexual content in the app store. In 2019, the Washington Post found over 1,500 reviews on just six apps detailing incidents of unwanted sexual behavior, including many detailing incidents targeting minors. TTP’s investigation suggests little has been done to rectify the mechanics of the App Store that led to these incidents.

Tinder was one of the only apps tested that permanently locked out users it suspected of being underage. This screen remains even if the app is deleted and redownloaded.

More on methodology

TTP compiled the 75 apps to test through the top-100 most downloaded lists in the Lifestyle and Social Networking categories of the U.S. App Store, App Store recommendations, and in-Store keyword searches.

For each app, TTP tested the ability of the underage user to register for the app via their Apple ID and Facebook account, the two of the most common methods among the 75 tested. The Facebook account shared the same name, age, and email as the Apple ID. We also tried to sign up via an email and/or phone number, both tied exclusively to the simulated underage user, in cases where Apple ID or Facebook registration was not available. We further examined the apps for any other vulnerabilities that could endanger minor users.

For the purpose of this study, TTP did not activate parental controls on the hypothetical 14-year-old user’s iPhone. (These controls are disabled by default.)

Recent research on the usage of parental controls for iOS is lacking. But earlier studies suggest that, in general, these features haven’t been widely embraced by parents of children with smartphones. A 2016 Pew survey indicated that in practice only 16% of American parents of teenagers use parental control features on mobile devices. A 2018 survey of in the UK found that 61% of parents of children age 4 to 16 did not use consistent parental controls across their broadband or mobile network.